Dynamic systems, represented as a set of ordinary differential equations (ODEs), are commonly used to model behaviors in scientific domains, such as gene regulation (), biological rhythms (), spread of disease (), ecology (), etc. We focus on models specified by a set of ODEs

where the vector

contains the system outputs that evolve over time

t and

θ is the vector of model parameters to be estimated from experimental/observational data. When

f is nonlinear, solving

x(t) given initial conditions

x(0) and

θ generally requires a numerical integration method, such as Runge–Kutta.

Historically, ODEs have mainly been used for conceptual or theoretical understanding rather than data fitting as experimental data were limited. Advances in experimental and data collection techniques have increased the capacity to follow dynamic systems closer to real time. Such data will generally be recorded at discrete times and subject to measurement error. Thus, we assume that we observe y(τ)=x(τ)+ϵ(τ) at a set of observation time points τ with error ϵ governed by noise level σ. Our focus here is inference of θ given y(τ), with emphasis on nonlinear f where specialized methods that exploit a linear structure (e.g., refs. and ), are not generally applicable. We shall present a coherent, statistically principled framework for dynamic system inference with the help of Gaussian processes (GPs). The key to our method is to restrict the GPs on a manifold that satisfies the ODE system: Thus, we name our method MAGI (manifold-constrained Gaussian process inference). Placing a GP on x(t) facilitates inference of θ without numerical integration, and our explicit manifold constraint is the key idea that addresses the conceptual incompatibility between the GP and the specification of the ODE model, as we shall discuss shortly when overviewing our method. We show that the resulting parameter inference is computationally efficient, statistically principled, and effective in a variety of practical scenarios. MAGI particularly works in the cases when some system component(s) is/are unobserved. To the best of our knowledge, none of the current available software packages that do not use numerical integration can analyze systems with unobserved component(s).

Overview of Our Method

Following the Bayesian paradigm, we view the D-dimensional system x(t) to be a realization of the stochastic process X(t)=(X1(t),…,XD(t)) and the model parameters θ a realization of the random variable Θ. In Bayesian statistics, the basis of inference is the posterior distribution, obtained by combining the likelihood function with a chosen prior distribution on the unknown parameters and stochastic processes. Specifically, we impose a general prior distribution π(⋅) on θ and independent GP prior distributions on each component Xd(t) so that Xd(t)∼GP(μd,Kd), t∈[0,T], where Kd:R×R→R is a positive definite covariance kernel for the GP and μd:R→R is the mean function. Then, for any finite set of time points τd, Xd(τd) has a multivariate Gaussian distribution with mean vector μd(τd) and covariance matrix Kd(τd,τd). Denote the observations by y(τ)=(y1(τ1),…,yD(τD)), where τ=(τ1,τ2,…,τD) is the collection of all observation time points, and each component Xd can have its own set of observation times τd=(τd,1,…,τd,Nd). If the dth component is not observed, then Nd=0, and τd=∅. N=N1+⋯+ND is the total number of observations. We note that for the remainder of the paper, the notation t shall refer to time generically, while τ shall refer specifically to the observation time points.

As an illustrative example, consider the dynamic system in ref. that governs the oscillation of Hes1 mRNA (messenger ribonucleic acid) (M) and Hes1 protein (P) levels in cultured cells, where it is postulated that an Hes1-interacting (H) factor contributes to a stable oscillation, a manifestation of biological rhythm (). The ODEs of the three-component system X=(P,M,H) are

where

θ=(a,b,c,d,e,f,g) are the associated parameters. In

Fig. 1, left-most panel, we show noise-contaminated data generated from the system, which closely mimics the experimental setup described in ref. :

P and

M are observed at 15-min intervals for 4 h, but

H is never observed. In addition,

P and

M observations are asynchronous: Starting at time 0, every 15 min we observe

P; starting at 7.5 min, every 15 min we observe

M;

P and

M are never observed at the same time. It can be seen that the mRNA and protein levels exhibit the behavior of regulation via negative feedback.

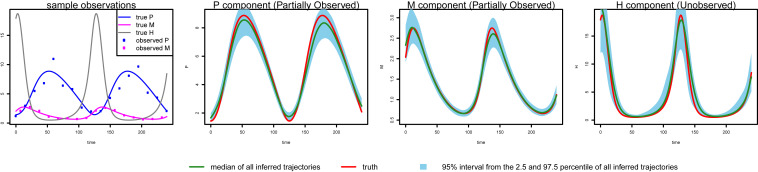

Fig. 1.

Inference by MAGI for Hes1 partially observed asynchronous system on 2,000 simulated datasets. The red curve is the truth. MAGI recovers the system well, without the usage of any numerical solver: The green curve shows the median of the inferred trajectories among the 2,000 simulated datasets, and a 95% interval from the 2.5 and 97.5% of all inferred trajectories is shown via the blue area.

The goal here is to infer the seven parameters of the system: a,b govern the rate of protein synthesis in the presence of the interacting factor; c,d,g are the rates of decomposition; and e,f are inhibition rates. The unobserved H component poses a challenge for most existing methods that do not use numerical integration but is capably handled by MAGI: The P and M panels of Fig. 1 show that our inferred trajectories provide good fits to the observed data, and the H panel shows that the dynamics of the entirely unobserved H component are largely recovered as well. We emphasize that these trajectories are inferred without any use of numerical solvers. We shall return to the Hes1 example in detail in Results.

Intuitively, the GP prior on X(t) facilitates computation as GP provides closed analytical forms for X˙(t) and X(t), which could bypass the need for numerical integration. In particular, with a GP prior on X(t), the conditional distribution of X˙(t) given X(t) is also a GP with its mean function and covariance kernel completely specified. This GP specification for the derivatives x˙(t), however, is inherently incompatible with the ODE model because also completely specifies x˙(t) given x(t) (via the function f). As a key contribution of our method, MAGI addresses this conceptual incompatibility by constraining the GP to satisfy the ODE model in . To do so, we first define a random variable W quantifying the difference between stochastic process X(t) and the ODE structure with a given value of the parameter θ:

W≡0 if and only if ODEs with parameter

θ are satisfied by

X(t). Therefore, ideally the posterior distribution for

X(t) and

θ given the observations

y(τ) and the ODE constraint,

W≡0, is (informally)

While is the ideal posterior, in reality

W is not generally computable. In practice, we approximate

W by finite discretization on the set

I=(t1,t2,…,tn) such that

τ⊂I⊂[0,T] and similarly define

WI as

Note that

WI is the maximum of a finite set, and

WI→W monotonically as

I becomes dense in

[0,T]. Therefore, the practically computable posterior distribution is

which is the joint conditional distribution of

θ and

X(I) together. Thus, effectively, we simultaneously infer both the parameters and the unobserved trajectory

X(I) from the noisy observations

y(τ).

Under Bayes’ rule, we have

where the right-hand side can be decomposed as

The first term (1) can be simplified as P(X(I)=x(I)|Θ=θ)=P(X(I)=x(I)) due to the prior independence of X(I) and Θ; it corresponds to the GP prior on X. The second term (2) corresponds to the noisy observations. The third term (3) can be simplified as

which is the conditional density of

X˙(I) given

X(I) evaluated at

f(x(I),θ,tI). All three terms are multivariate Gaussian: The third term is Gaussian because

X˙(I) given

X(I) has a multivariate Gaussian distribution as long as the kernel

K is twice differentiable.

Therefore, the practically computable posterior distribution simplifies to

where

‖v‖A2=v⊺Av,

|I| is the cardinality of

I,

fd,I x,θ is short for the

dth component of

f(x(I),θ,tI), and the multivariate Gaussian covariance matrix

Cd and the matrix

Kd can be derived as follows for each component

d:

where

′K=∂∂sK(s,t),

K′=∂∂tK(s,t), and

K″=∂2∂s∂tK(s,t).

In practice, we choose the Matern kernel K(s,t)=ϕ121−νΓ(ν)2νlϕ2νBν2νlϕ2, where l=|s−t|, Γ is the Gamma function, Bν is the modified Bessel function of the second kind, and the degree of freedom ν is set to be 2.01 to ensure that the kernel is twice differentiable. K has two hyperparameters ϕ1 and ϕ2. Their meaning and specification are discussed in Materials and Methods.

With the posterior distribution specified in , we use Hamiltonian Monte Carlo (HMC) () to obtain samples of XI and the parameters together. At the completion of HMC sampling, we take the posterior mean of XI as the inferred trajectory and the posterior means of the sampled parameters as the parameter estimates. Throughout the MAGI computation, no numerical integration is ever needed.

Review of Related Work

The problem of dynamic system inference has been studied in the literature, which we now briefly review. We first note that a simple approach to constructing the “ideal” likelihood function is according to p(y(t)|x^(t,θ,x(0)),σ), where x^(t,θ,x(0)) is the numerical solution of the ODE obtained by numerical integration given θ and the initial conditions. This approach suffers from a high computational burden: Numerical integration is required for every θ sampled in an optimization or Markov chain Monte Carlo (MCMC) routine (). Smoothing methods have been useful for eliminating the dependence on numerical ODE solutions, and an innovative penalized likelihood approach () uses a B-spline basis for constructing estimated functions to simultaneously satisfy the ODE system and fit the observed data. While in principle, the method in ref. can handle an unobserved system component, substantive manual input is required as we show in Results, which contrasts with the ready-made solution that MAGI provides.

As an alternative to the penalized likelihood approach, GPs are a natural candidate for fulfilling the smoothing role in a Bayesian paradigm due to their flexibility and analytic tractability (). The use of GPs to approximate the dynamic system and facilitate computation has been previously studied by a number of authors (, 121314–). The basic idea is to specify a joint GP over y,x,x˙ with hyperparameters ϕ and then, provide a factorization of the joint density p(y,x,x˙,θ,ϕ,σ) that is suitable for inference. The main challenge is to find a coherent way to combine information from two distinct sources: the approximation to the system by the GP governed by hyperparameters ϕ and the actual dynamic system equations governed by parameters θ. In refs. and , the factorization proposed is p(y,x,x˙,θ,ϕ,σ)=p(y|x,σ)p(x˙|x,θ,ϕ)p(x|ϕ)p(ϕ)p(θ), where p(y|x,σ) comes from the observation model and p(x|ϕ) comes from the GP prior as in our approach. However, there are significant conceptual difficulties in specifying p(x˙|x,θ,ϕ): On one hand, the distribution of x˙ is completely determined by the GP given x, while on the other hand, x˙ is completely specified by the ODE system x˙=f(x,θ,t); these two are incompatible. Previous authors have attempted to circumvent this incompatibility of the GP and ODE system: Refs. and use a product of experts heuristic by letting p(x˙|x,θ,ϕ)∝p(x˙|x,ϕ)p(x˙|x,θ), where the two distributions in the product come from the GP and a noisy version of the ODE, respectively. In ref. , the authors arrive at the same posterior as refs. and by assuming an alternative graphical model that bypasses the product of experts heuristic; nonetheless, the method requires working with an artificial noisy version of the ODE. In ref. , the authors start with a different factorization: p(y,x,x˙,θ,ϕ,σ)=p(y|x˙,ϕ,σ)p(x˙|x,θ)p(x|ϕ)p(ϕ)p(θ), where p(y|x˙,ϕ) and p(x|ϕ) are given by the GP and p(x˙|x,θ) is a Dirac delta distribution given by the ODE. However, this factorization is incompatible with the observation model p(y|x,σ) as discussed in detail in ref. . There is other related work that uses GPs in an ad hoc partial fashion to aid inference. In ref. , GP regression is used to obtain the means of x and x˙ for embedding within an Approximate Bayesian Computation estimation procedure. In ref. , GP smoothing is used during an initial burn-in phase as a proxy for the likelihood, before switching to the ideal likelihood to obtain final MCMC samples. While empirical results from the aforementioned studies are promising, a principled statistical framework for inference that addresses the previously noted conceptual incompatibility between the GP and ODE specifications is lacking. Our work presents one such principled statistical framework through the explicit manifold constraint. MAGI is therefore distinct from recent GP-based approaches (, ) or any other Bayesian analogs of ref. .

In addition to the conceptual incompatibility, none of the existing methods that do not use numerical integration offer a practical solution for a system with unobserved component(s), which highlights another unique and important contribution of our approach.

Results

We apply MAGI to three systems. We begin with an illustration that demonstrates the effectiveness of MAGI in practical problems with unobserved system component(s). Then, we make comparisons with other current methods on two benchmark systems, which show that our proposed method provides more accurate inference while having much faster run time.

Illustration: Hes1 Model.

The Hes1 model described in the Introduction demonstrates inference on a system with an unobserved component and asynchronous observation times. This section continues the inference of this model. Ref. studied the theoretical oscillation behavior using parameter values a=0.022, b=0.3, c=0.031, d=0.028; e=0.5, f=20, g=0.3, which leads to one oscillation cycle approximately every 2 h. Ref. also set the initial condition at the lowest value of P when the system is in oscillation equilibrium (): P=1.439, M=2.037, H=17.904. The noise level in our simulation is derived from ref. where the SE based on repeated measures is reported to be around 15% of the P (protein) level and M (mRNA) level, so we set the simulation noise to be multiplicative following a log-normal distribution with SD 0.15; throughout this example, we assume the noise level σ is known to be 0.15 from repeated measures reported in ref. . The H component is never observed. Owing to the multiplicative error on the strictly positive system, we apply our method to the log-transformed ODEs, so that the resulting error distributions are Gaussian. To the best of our knowledge, MAGI is the only one that provides a practical and complete solution for handling unobserved component cases like this example.

We generate 2,000 simulated datasets based on the above setup for the Hes1 system. The left-most panel in Fig. 1 shows one example dataset. For each dataset, we use MAGI to infer the trajectories and estimate the parameters. We use the posterior mean of Xt=(P,M,H)t as the inferred trajectories for the system components, which are generated by MAGI without using any numerical solver. Fig. 1 summarizes the inferred trajectories across the 2,000 simulated datasets, showing the median of the inferred trajectories of Xt together with the 95% interval of the inferred trajectories represented by the 2.5 and 97.5% percentiles. The posterior mean of θ=(a,b,c,d,f,e,g) is our estimate of the parameters. Table 1 summarizes the parameter estimates across the 2,000 simulated datasets, by showing their means and SDs. Fig. 1 shows that MAGI recovers the system well, including the completely unobserved H component. Table 1 shows that MAGI also recovers the system parameters well, except for the parameters that only appear in the equation for the unobserved H component, which we will discuss shortly. Together, Fig. 1 and Table 1 demonstrate that MAGI can recover the entire system without any usage of a numerical solver, even in the presence of unobserved component(s).

Parameter inference in the Hes1 partially observed asynchronous system based on 2,000 simulation datasets

| θ | Truth | MAGI | Ref. |

| | Estimate | RMSE | Estimate | RMSE |

| a | 0.022 | 0.021 ± 0.003 | 0.003 | 0.027 ± 0.026 | 0.026 |

| b | 0.3 | 0.329 ± 0.051 | 0.059 | 0.302 ± 0.086 | 0.086 |

| c | 0.031 | 0.035 ± 0.006 | 0.007 | 0.031 ± 0.010 | 0.010 |

| d | 0.028 | 0.029 ± 0.002 | 0.003 | 0.028 ± 0.003 | 0.003 |

| e | 0.5 | 0.552 ± 0.074 | 0.090 | 0.498 ± 0.088 | 0.088 |

| f | 20 | 13.759 ± 3.026 | 6.936 | 604.9 ± 5084.8 | 5,117.0 |

| g | 0.3 | 0.141 ± 0.026 | 0.162 | 1.442 ± 9.452 | 9.519 |

Metrics for assessing the quality of system recovery.

To further assess the quality of the parameter estimates and the system recovery, we consider two metrics. First, as shown in Table 1, we examine the accuracy of the parameter estimates by directly calculating the root mean squared error (RMSE) of the parameter estimates to the true parameter value. We call this measure the parameter RMSE metric. Second, it is possible that a system might be insensitive to some of the parameters; in the extreme case, some parameters may not be fully identifiable given only the observed data and components. In these situations, it is possible that the system trajectories implied by quite distinct parameter values are similar to each other (or even close to the true trajectory). We thus consider an additional trajectory RMSE metric to account for possible parameter insensitivity and measure how well the system components are recovered given the parameter and initial condition estimates. The trajectory RMSE is obtained by treating the numerical ODE solution based on the true parameter value as the ground truth: First, the numerical solver is used to reconstruct the trajectory based on the estimates of the parameter and initial condition (from a given method); then, we calculate the RMSE of this reconstructed trajectory to the true trajectory at the observation time points. We emphasize that the trajectory RMSE metric is only for evaluation purpose to assess (and compare across methods) how well a method recovers the trajectories of the system components and that throughout MAGI, no numerical solver is ever needed.

We summarize the trajectory RMSEs of MAGI in Table 2 for the Hes1 system.

Trajectory RMSEs of the individual components in the Hes1 system, comparing the average trajectory RMSEs of MAGI and ref. over the 2,000 simulated datasets

| Method | P | M | H |

| MAGI | 0.97 | 0.21 | 2.57 |

| Ref. | 1.30 | 0.40 | 59.47 |

We compare MAGI with the benchmark provided by the B spline-based penalization approach of ref. . To the best of our knowledge, among all of the existing methods that do not use numerical integration, ref. is the only one with a software package that can be manually adapted to handle an unobserved component. We note, however, that this package itself is not ready made for this problem: It requires substantial manual input as it does not have default or built-in setup of its hyperparameters for the unobserved component. None of the other benchmark methods, including refs. and , provide software that is equipped to handle an unobserved component. Table 1 compares our estimates against those given by ref. based on the parameter RMSE, which shows that the parameter RMSEs for MAGI are substantially smaller than ref. . Fig. 1 shows that the inferred trajectories from MAGI are very close to the truth. On the contrary, the method in ref. is not able to recover the unobserved component H nor the associated parameters f and g; SI Appendix, Fig. S1 has the plots. Table 2 compares the trajectory RMSE of the two methods. It is seen that the trajectory RMSE of MAGI is substantially smaller than that of ref. . Further implementation details and comparison are provided in SI Appendix.

Finally, we note that MAGI recovers the unobserved component H almost as well as the observed components of P and M, as measured by the trajectory RMSEs. In comparison, for the result of ref. in Table 2, the trajectory RMSE of the unobserved H component is orders of magnitude worse than those of P and M. The numerical results thus illustrate the effectiveness of MAGI in borrowing information from the observed components to infer the unobserved component, which is made possible by explicitly conditioning on the ODE structure. The self-regulating parameter g and inhibition rate parameter f for the unobserved component appear to have high inference variation across the simulated datasets despite the small trajectory RMSEs. This suggests that the system itself could be insensitive to f and g when the H component is unobserved.

Comparison with Previous Methods Based on GPs.

To further assess MAGI, we compare with two methods: adaptive gradient matching (AGM) of ref. and fast Gaussian process-based gradient matching (FGPGM) of ref. , representing the state of the art of inference methods based on GPs. For fair comparison, we use the same benchmark systems, scripts, and software provided by the authors for performance assessment and run the software using the settings recommended by the authors. The benchmark systems include the FitzHugh–Nagumo (FN) equations () and a protein transduction model ().

FN model.

The FN equations are a classic ion channel model that describes spike potentials. The system consists of X=(V,R), where V is the variable defining the voltage of the neuron membrane potential and R is the recovery variable from neuron currents, satisfying the ODE

where

θ=(a,b,c) are the associated parameters. As in refs. and , the true parameters are set to

a=0.2,b=0.2,c=3, and we generate the true trajectories for this model using a numerical solver with initial conditions

V=−1,

R=1.

To compare MAGI with FGPGM of ref. and AGM of ref. , we simulated 100 datasets under the noise setting of σV=σR=0.2 with 41 observations. The noise level is chosen to be on similar magnitude with that of ref. , and the noise level is set to be the same across the two components as the implementation of ref. can only handle equal-variance noise. The number of repetitions (i.e., 100) is set to be the same as ref. due to the high computing time of these alternative methods.

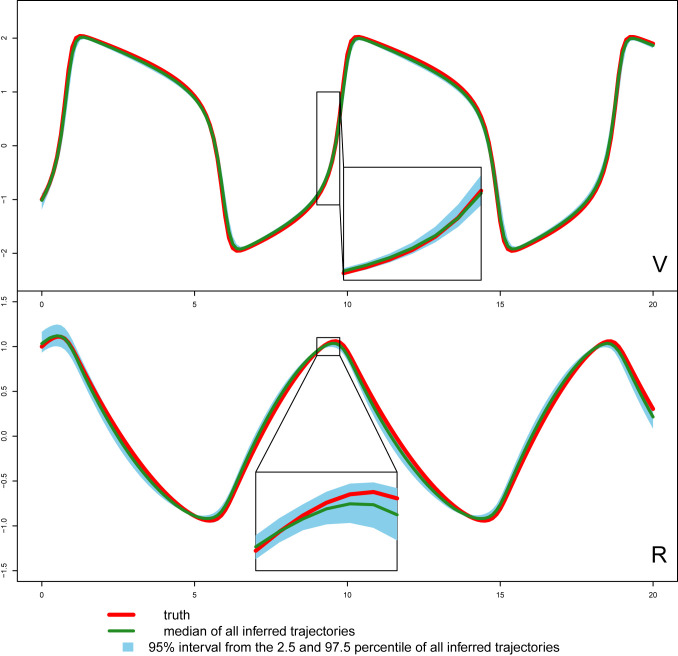

The parameter estimation results from the three methods are summarized in Table 3, where MAGI has the lowest parameter RMSEs among the three. Fig. 2 shows the inferred trajectories obtained by our method: MAGI recovers the system well, and the 95% interval band is so narrow around the truth that we can only see the band clearly after magnification (as shown in Fig. 2, Insets). SI Appendix provides visual comparison of the inferred trajectories of different methods, where MAGI gives the most consistent results across the simulations. Furthermore, to assess how well the methods recover the system components, we calculated the trajectory RMSEs, and the results are summarized in Table 4, where MAGI significantly outperforms the others, reducing the trajectory RMSE over the best alternative method for 60% in V and 25% in R. We note that compared with the true parameter value, all three methods show some bias in the parameter estimates, which is partly due to the GP prior as discussed in ref. , and MAGI appears to have the smallest bias.

Parameter inference in the FN model based on 100 simulated datasets

| θ | MAGI | FGPGM () | AGM () |

| Estimate | RMSE | Estimate | RMSE | Estimate | RMSE |

| a | 0.19 ± 0.02 | 0.02 | 0.22 ± 0.04 | 0.05 | 0.30 ± 0.03 | 0.10 |

| b | 0.35 ± 0.09 | 0.17 | 0.32 ± 0.13 | 0.18 | 0.36 ± 0.06 | 0.17 |

| c | 2.89 ± 0.06 | 0.13 | 2.85 ± 0.15 | 0.21 | 2.04 ± 0.14 | 0.97 |

Fig. 2.

Inferred trajectories by MAGI for each component of the FN system over 100 simulated datasets. The Top is the V component and the Bottom is the R component. The blue shaded area represents the 95% interval. Insets magnify the corresponding segments.

Trajectory RMSEs of each component in the FN system, comparing the average trajectory RMSE of the three methods over 100 simulated datasets

| Method | V | R |

| MAGI | 0.103 | 0.070 |

| FGPGM () | 0.257 | 0.094 |

| AGM () | 1.177 | 0.662 |

For computing cost, the average run time of MAGI for this system over the repetitions is 3 min, which is 145 times faster than FGPGM () and 90 times faster than AGM () on the same processor (we follow the authors’ recommendation for running their methods) (SI Appendix has details).

Protein transduction model.

This protein transduction example is based on systems biology where components S and Sd represent a signaling protein and its degraded form, respectively. In the biochemical reaction, S binds to protein R to form the complex SR, which enables the activation of R into Rpp. X=(S,Sd,R,SR,Rpp) satisfies the ODE

where

θ=(k1,k2,k3,k4,V,Km) are the associated rate parameters.

We follow the same simulation setup as refs. and by taking t=0,1,2,4,5,7,10,15,20,30,40,50,60,80,100 as the observation times, X(0)=(1,0,1,0,0) as the initial values, and θ=(0.07,0.6,0.05,0.3,0.017,0.3) as the true parameter values. Two scenarios for additive observation noise are considered: σ=0.001 (low noise) and σ=0.01 (high noise). Note that the observation times are unequally spaced, with only a sparse number of observations from t=20 to t=100. Further, inference for this system has been noted to be challenging due to the nonidentifiability of the parameters, in particular Km and V (). Therefore, the parameter RMSE is not meaningful for this system, and we focus our comparison on the trajectory RMSE.

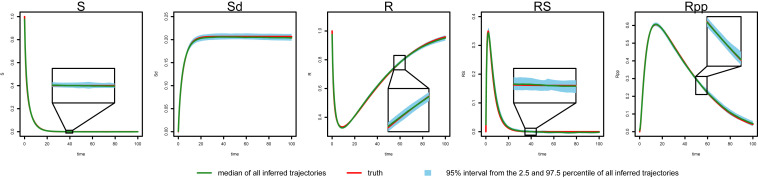

We compare MAGI with FGPGM of ref. and AGM of ref. on 100 simulated datasets for each noise setting (SI Appendix has method and implementation details). We plot the inferred trajectories of MAGI in the high-noise setting in Fig. 3, which closely recover the system. The 95% interval band from MAGI is quite narrow that for most of the inferred components, we need magnifications (as shown in Fig. 3, Insets) to clearly see the 95% band. We then calculated the trajectory RMSEs, and the results are summarized in Table 5 for each system component. In both noise settings, MAGI produces trajectory RMSEs that are uniformly smaller than both FGPGM () and AGM () for all system components. In the low-noise setting, the advantage of MAGI is especially apparent for components S, R, SR, and Rpp, with trajectory RMSEs less than half of the closest comparison method. For the high-noise setting, MAGI reduces trajectory RMSE the most for Sd and Rpp (∼50%). AGM () struggles with this example at both noise settings. To visually compare the trajectory RMSEs in Table 5, plots of the corresponding reconstructed trajectories by different methods at both noise settings are given in SI Appendix.

Fig. 3.

Inferred trajectories by MAGI for each component of the protein transduction system in the high-noise setting. The red line is the truth, and the green line is the median inferred trajectory over 100 simulated datasets. The blue shaded area represents the 95% interval. Insets magnify the corresponding segment.

Trajectory RMSEs of the individual components in the protein transduction system, by comparing the average RMSEs of the three methods over 100 simulated datasets

| Method | S | Sd | R | SR | Rpp |

| Low-noise case, σ=0.001 | | | | | |

| MAGI | 0.0020 | 0.0013 | 0.0040 | 0.0017 | 0.0036 |

| FGPGM () | 0.0049 | 0.0016 | 0.0156 | 0.0036 | 0.0149 |

| AGM () | 0.0476 | 0.2881 | 0.3992 | 0.0826 | 0.2807 |

| High-noise case, σ=0.01 | | | | | |

| MAGI | 0.0122 | 0.0043 | 0.0167 | 0.0135 | 0.0136 |

| FGPGM () | 0.0128 | 0.0089 | 0.0210 | 0.0136 | 0.0309 |

| AGM () | 0.0671 | 0.3125 | 0.4138 | 0.0980 | 0.2973 |

The run time of MAGI for this system averaged over the repetitions is 18 min, which is 12 times faster than FGPGM () and 18 times faster than AGM () on the same processor (we follow the authors’ recommendation for running their methods) (SI Appendix has details).

Discussion

We have presented a methodology for the inference of dynamic systems, using manifold-constrained GPs. A key feature that distinguishes our work from the previous approaches is that it provides a principled statistical framework, firmly grounded on the Bayesian paradigm. Our method also outperformed currently available GP-based approaches in the accuracy of inference on benchmark examples. Furthermore, the computation time for our method is much faster. Our method is robust and able to handle a variety of challenging systems, including unobserved components, asynchronous observations, and parameter nonidentifiability.

A robust software implementation is provided, with user interfaces available for R, MATLAB, and Python, as described in SI Appendix. The user may specify custom ODE systems in any of these languages for inference with our package by following the syntax in the examples that accompany this article. In practice, inference with MAGI using our software can be carried out with relatively few user interventions. The setting of hyperparameters and initial values is fully automatic, although may be overridden by the user.

The main setting that requires some tuning is the number of discretization points in I. In our examples, this was determined by gradually increasing the denseness of the points with short sampler runs, until the results become indistinguishable. Note that further increasing the denseness of I has no ill effect, apart from increasing the computational time. To illustrate the effect of the denseness of I on MAGI inference results, an empirical study is included in SI Appendix, Varying Number of Discretization, where we examined the results of the FN model with the discretization set I taken to be 41, 81, 161, and 321 equally spaced points. The results confirm that our proposal of gradually increasing the denseness of I works well. The inference results improve as we increase I from 41 to 161 points, and at 161 points, the results are stabilized. If we further increase the discretization to 321 points, that doubles the compute time with only a slight gain in accuracy compared with 161 points in terms of trajectory RMSEs. This empirical study also indicates that as WI becomes an increasingly dense approximation of W, an inference limit would be expected. A theoretical study is a natural future direction of investigation.

We also investigated the stability of MAGI when the observation time points are farther apart. This empirical study, based on the FN model with 21 observations, is included in SI Appendix, FN Model with Fewer Observations. The inferred trajectories from the 21 observations are still close to the truth, while the interval bands become wider, which is expected as we have less information in this case. We also found that the denseness of the discretization needs to be increased (to 321 time points in this case) to compensate for the sparser 21 observations.

An inherent feature of the GP approximation is the tendency to favor smoother curves. This limitation has been previously acknowledged (, ). As a consequence, two potential forms of bias can exist. First, estimates derived from the posterior distributions of the parameters may have some statistical bias. Second, the trajectories reconstructed by a numerical solver based on the estimated parameters may differ slightly from the inferred trajectories. MAGI, which is built on a GP framework, does not entirely eliminate these forms of bias. However, as seen in the benchmark systems, the magnitude of our bias in both respects is significantly smaller than the current state of the art in refs. and .

We considered the inference of dynamic systems specified by ODEs in this article. Such deterministic ODE models are often adequate to describe dynamics at the aggregate or population level (). However, when the goal is to describe the behavior of individuals [e.g., individual molecules (, )], models such as stochastic differential equations (SDEs) and continuous-time Markov processes, which explicitly incorporate intrinsic (stochastic) noise, often become the model of choice. Extending our method to the inference of SDEs and continuous-time Markov models is a future direction we plan to investigate. Finally, recent developments in deep learning have shown connections between deep neural networks and GPs (, ). It could thus also be interesting to explore the application of neural networks to model the ODE system outputs x(t) in conjunction with GPs.

Materials and Methods

For notational simplicity, we drop the dimension index d in this section when the meaning is clear.

Algorithm Overview.

We begin by summarizing the computational scheme of MAGI. Overall, we use HMC () to obtain samples of XI and the parameters from their joint posterior distribution. Details of the HMC sampling are included in SI Appendix, Hamiltonian Monte Carlo. At each iteration of HMC, XI and the parameters are updated together with a joint gradient, with leapfrog step sizes automatically tuned during the burn-in period to achieve an acceptance rate between 60 and 90%. At the completion of HMC sampling (and after discarding an appropriate burn-in period for convergence), we take the posterior means of XI as the inferred trajectories and the posterior means of the sampled parameters as the parameter estimates. The techniques we use to temper the posterior and speed up the computations are discussed in Prior Tempering and SI Appendix, Techniques for Computational Efficiency.

Several steps are taken to initialize the HMC sampler. First, we apply a GP fitting procedure to obtain values of ϕ and σ for the observed components; the computed values of the hyperparameters ϕ are subsequently held fixed during the HMC sampling, while the computed value of σ is used as the starting value in the HMC sampler (if σ is known, the GP fitting procedure is used to obtain values of ϕ only). Second, starting values of XI for the observed components are obtained by linearly interpolating between the observation time points. Third, starting values for the remaining quantities—θ and (XI,ϕ) for any unobserved component(s)—are obtained by optimization of the posterior as described below.

Setting Hyperparameters ϕ for Observed Components.

The GP prior Xd(t)∼GP(μd,Kd), t∈[0,T], is on each component Xd(t) separately. The GP Matern kernel K(l)=ϕ121−νΓ(ν)2νlϕ2νBν2νlϕ2 has two hyperparameters that are held fixed during sampling: ϕ1 controls overall variance level of the GP, while ϕ2 controls the bandwidth for how much neighboring points of the GP affect each other.

When the observation noise level σ is unknown, values of (ϕ1,ϕ2,σ) are obtained jointly by maximizing GP fitting without conditioning on any ODE information, namely

where

yI0|ϕ,σ∼N(0,Kϕ+σ2). The index set

I0 is the smallest evenly spaced set such that all observation time points in this component are in

I0 (i.e.,

τ⊆I0). The priors

πΦ1(ϕ1) and

πσ(σ2) for the variance parameter

ϕ1 and

σ are set to be flat. The prior

πΦ2(ϕ2) for the bandwidth parameter

ϕ2 is set to be a Gaussian distribution. 1) The mean

μΦ2 is set to be half of the period corresponding to the frequency that is the weighted average of all of the frequencies in the Fourier transform of

y on

I0 (the values of

y on

I0 are linearly interpolated from the observations at

τ), where the weight on a given frequency is the squared modulus of the Fourier transform with that frequency, and 2) the SD is set such that

T is three SDs away from

μΦ2. This Gaussian prior on

ϕ2 serves to prevent it from being too extreme. In the subsequent sampling of

(θ,Xτ,σ2), the hyperparameters

ϕ are fixed at

ϕ~, while

σ~ gives the starting value of

σ in the HMC sampler.

If σ is known, then values of (ϕ1,ϕ2) are obtained by maximizing

and held fixed at

ϕ~ in the subsequent HMC sampling of

(θ,Xτ). The priors for

ϕ1 and

ϕ2 are the same as previously defined.

Initialization of XI for the Observed Components.

To provide starting values of XI for the HMC sampler, we use the values of Yτ at the observation time points and linearly interpolate the remaining points in I.

Initialization of the Parameter Vector θ When All System Components Are Observed.

To provide starting values of θ for the HMC sampler, we optimize the posterior as a function of θ alone, holding XI and σ unchanged at their starting values, when there is no unobserved component(s). The optimized θ is then used as the starting value for the HMC sampler in this case.

Settings in the Presence of Unobserved System Components: Setting ϕ, Initializing XI for Unobserved Components, and Initializing θ.

Separate treatment is needed for the setting of ϕ and initialization of (θ, XI) for the unobserved component(s). We use an optimization procedure that seeks to maximize the full posterior in as a function of θ together with ϕ and the whole curve of XI for unobserved components while holding the σ, ϕ, and XI for the observed components unchanged at their initial value discussed above. We thereby set ϕ for the unobserved component and the starting values of θ and XI for unobserved components at the optimized value. In the subsequent sampling, the hyperparameters are fixed at the optimized ϕ, while the HMC sampling starts at the θ and the XI obtained by this optimization.

Prior Tempering.

After ϕ is set, we use a tempering scheme to control the influence of the GP prior relative to the likelihood during HMC sampling. Note that can be written as

As the cardinality of

|I| increases with more discretization points, the prior part

pΘ,X(I)|WI(θ,x(I)|WI=0) grows, while the likelihood part

pY(τ)|X(τ)(y(τ)|x(τ)) stays unchanged. Thus, to balance the influence of the prior, we introduce a tempering hyperparameter

β with the corresponding posterior

A useful setting that we recommend is β=D|I|/N, where D is the number of system components, |I| is the number of discretization time points, and N=∑d=1DNd is the total number of observations. This setting aims to balance the likelihood contribution from the observations with the total number of discretization points.

Inference of dynamic systems from noisy and sparse data via manifold-constrained Gaussian processes

Inference of dynamic systems from noisy and sparse data via manifold-constrained Gaussian processes