Competing Interests: The authors have declared that no competing interests exist.

- Altmetric

- 1 Introduction

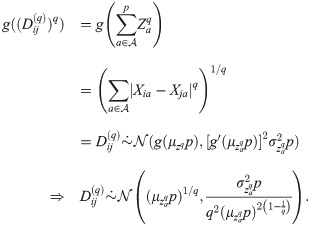

- 2 Limit distribution for Lq on null data

- 3 Lq metric moments for continuous data distributions

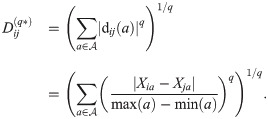

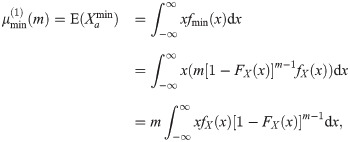

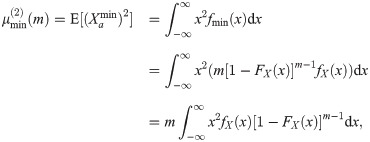

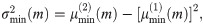

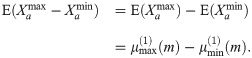

- 4 Moments for max-min normalized Lq metric

- 5 GWAS distance distributions

- 6 Time series correlation-based distance distribution

- 7 Comparison of theoretical and sample moments

- 8 Effects of correlation on distances

- 9 Feature selection with distance distribution-informed nearest neighbors

- 10 Discussion

- Supporting information

The performance of nearest-neighbor feature selection and prediction methods depends on the metric for computing neighborhoods and the distribution properties of the underlying data. Recent work to improve nearest-neighbor feature selection algorithms has focused on new neighborhood estimation methods and distance metrics. However, little attention has been given to the distributional properties of pairwise distances as a function of the metric or data type. Thus, we derive general analytical expressions for the mean and variance of pairwise distances for Lq metrics for normal and uniform random data with p attributes and m instances. The distribution moment formulas and detailed derivations provide a resource for understanding the distance properties for metrics and data types commonly used with nearest-neighbor methods, and the derivations provide the starting point for the following novel results. We use extreme value theory to derive the mean and variance for metrics that are normalized by the range of each attribute (difference of max and min). We derive analytical formulas for a new metric for genetic variants, which are categorical variables that occur in genome-wide association studies (GWAS). The genetic distance distributions account for minor allele frequency and the transition/transversion ratio. We introduce a new metric for resting-state functional MRI data (rs-fMRI) and derive its distance distribution properties. This metric is applicable to correlation-based predictors derived from time-series data. The analytical means and variances are in strong agreement with simulation results. We also use simulations to explore the sensitivity of the expected means and variances in the presence of correlation and interactions in the data. These analytical results and new metrics can be used to inform the optimization of nearest neighbor methods for a broad range of studies, including gene expression, GWAS, and fMRI data.

1 Introduction

Statistical models can deviate from expected behavior depending on whether certain properties of the underlying data are satisfied, such as being normally distributed. The expected behavior of nearest neighbor models is further influenced by the choice of metric, such as Euclidean or Manhattan. For random normal data (), for example, the variance of the pairwise distances of a Manhattan metric is proportional to the number of attributes (p) whereas the variance is constant for a Euclidean metric. Relief methods [1–3] and nearest-neighbor projected-distance regression (NDPR) [4] use nearest neighbors to compute attribute importance scores for feature selection and often use adaptive neighborhoods that rely on the mean and variance of the distance distribution. The ability of this class of methods to identify association effects, like main effects or interaction effects, depends on parameters such as neighborhood radii or number of neighbors k [5, 6]. Thus, knowing the expected pairwise distance values for a given metric and data distribution may improve the performance of these feature selection methods by informing the choice of neighborhood parameters.

For continuous data, the metrics most commonly used in nearest neighbor methods are Lq with q = 1 (Manhattan) or q = 2 (Euclidean). For data from standard normal (

In addition to the novel moment estimates using extreme value theory, we also derive novel asymptotic results for metrics we recently developed for genome-wide association study (GWAS) data [7]. Various metrics have been developed for feature selection and for computing similarity between individuals based on shared genetic variation in GWAS data. We build on the mathematical formalism for continuous data to derive the asymptotic properties of various categorical (genotypic) data metrics for GWAS. We derive asymptotic formulas for the mean and variance for three recently introduced GWAS metrics [7]. These metrics were developed for Relief-based feature selection to account for binary genotype differences (two levels), allelic differences (three levels), and transition/transversion differences (five levels). The mean and variance expressions we derive for these multi-level categorical data types are parameterized by the minor allele frequency and the transition/transversion ratio.

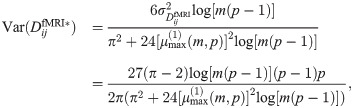

We also introduce a novel metric for correlation data computed from time series, which is motivated by the application of resting-state functional MRI (rs-fMRI) data. We further derive asymptotic estimates for the mean and variance of distance distributions for this new metric. Unlike structural MRI (magnetic resonance imaging) of the brain, which produces a high resolution static image, rs-fMRI produces time-series brain activity. The correlation of this activity between pairs of brain Regions of Interest (ROIs) can be computed from the time series and the pairs used as attributes for machine learning and feature selection [8–11]. An ROI is composed of many smaller brain volumes known as voxels, which may be used as the spatial units, but typically ROIs are used that correspond to larger collections of voxels with known function for emotion or cognition.

For a given subject in an rs-fMRI study, a correlation matrix is computed between ROIs from the ROI time series, resulting in an overall dataset composed of ROI-ROI pairwise correlations for each of the m subjects. Nearest-neighbor based feature selection was applied to rs-fMRI with the private evaporative cooling method [12], where the predictors were pairwise correlations between ROIs. The use of pairwise correlation predictors is a common practice because of convenience for detection of differential connectivity between brain regions that may be of biological importance [13]. However, one may be interested in the importance of attributes at the individual ROI level. The new metric in the current study may be used in NPDR [4] feature selection or other machine learning methods for rs-fMRI correlation matrices to provide attribute importance at the level of individual ROIs. This metric is applicable to general time-series derived correlation data.

To summarize the contributions of this study, we provide multiple resources and novel results. We provide a summary of the asymptotic means and variances of pairwise distances for commonly used metrics and data types. In addition, we provide the mathematical details for deriving these quantities. We derive novel analytical results for range-normalized metrics using extreme value theory. We derive novel analytical results for new metrics for GWAS data. Most asymptotic analysis is for continuous data, but GWAS data is categorical, which requires slightly different approaches. We introduce a novel metric for correlation data derived from rs-fMRI time series, and we derive the metric’s analytical means and variances. We test the accuracy of analytical formulas for means and variances under various simulated conditions, including correlation.

In Section 2, we introduce preliminary notation and apply the Central Limit Theorem (CLT) and the Delta Method to derive asymptotics for pairwise distances. In Section 3, we present general derivations for continuously distributed data sets with m instances and p attributes. Using our more general results, we then consider the special cases of standard normal (

2 Limit distribution for Lq on null data

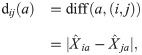

For continuously distributed data, nearest-neighbor feature selection algorithms most commonly define distance between instances (

2.1 Nearest-neighbor projected-distance regression

Like other nearest-neighbor feature selection algorithms, the performance of NPDR depends on appropriate choice of neighborhood optimization criteria. The size of neighborhoods must be chosen appropriately for optimal detection of important statistical effects. It has been shown using simulations that neighborhood size should be as large as possible to optimally detect main effects, whereas smaller neighborhoods are necessary to detecting interactions [6]. NPDR allows for any neighborhood algorithm to be used, such as fixed or adaptive k, and fixed or adaptive radius. Especially in the case of radius methods, one needs some sense of central tendency with respect to pairwise distances between a given target instance and its neighbors. Similar to the radius problem, for fixed-k neighborhoods we need to choose k so that the average distance within neighborhoods is not too large or too small with respect to the empirical average pairwise distance between pairs of instances. In order for the appropriate choice of neighborhood size to be made, we need to know the central tendency and scale of the distance distribution generated on our data.

Although we do not use NPDR in the current study, it is an important motivation for derivations herein, so we briefly describe how NPDR computes importance scores for classification problems. In the case of dichotomous outcomes, NPDR estimates regression coefficients of the following model

All derivations in the following sections are applicable to nearest-neighbor distance-based methods in general, which includes not only NPDR, but also Relief-based algorithms. Each of these methods uses a distance metric (Eq 1) to compute neighbors for each instance

We proceed in the following section by applying the Classical Central Limit Theorem and the Delta Method to derive the limit distribution of pairwise distances on any data distribution that is induced by the standard distance metric (Eq 1). We assume independent samples in order to derive closed-form moment estimates and to show that distances are asymptotically normal. In real data, it is obviously not the case that samples or attributes will be independent; however, the normality assumption for distances is approximately satisfied in a large number of cases. For example, it has been shown using 100 real gene expression data sets from microarrays, that approximately 80% of the data sets are either approximately normal or log-normal in distribution [14]. We generated Manhattan distances (Eq 1, q = 1) on 99 of the same 100 gene expression data sets after applying a pre-processing pipeline. We excluded GSE67376 because this data included only a single sample. Before generating distance matrices, we transformed the data using quantile normalization, removed genes with high coefficient of variation, and standardized samples to have zero mean and unit variance.

We computed densities for each distance matrix, as well as quantile-quantile plots to visually assess normality (S26-S124 Figs in S1 File). The estimated densities and quantile-quantile plots indicate that most of the gene expression data sets yield approximately normally distributed distances between instances. Another example involves real resting-state fMRI data from a study of mood and anxiety disorders [15], where the data was generated both from a spherical ROI parcellation [16] and a graph theoretic parcellation [17]. The data consists of correlation matrices between ROI time series with respect to each parcellation and each subject. Each subject correlation matrix, excluding the diagonal entries, was vectorized and combined into a single matrix containing all subject ROI correlations. We then applied a Fisher r-to-z transformation and standardized samples to be zero mean and unit variance. The output of this process was two data matrices corresponding to each parcellation, respectively. Analogous to the gene expression microarray data, we computed Manhattan distance matrices for each of the two resting-state fMRI data sets. We generated quantile-quantile and density plots for each matrix (S125 and S126 Figs in S1 File). Both sets of pairwise distances were approximately normal.

2.2 Asymptotic normality of pairwise distances

Suppose that

It is clear that |Xia − Xja|q = |dij(a)|q is another random variable, so we let

Furthermore, the collection

Consider the smooth function g(z) = z1/q, which is continuously differentiable for z > 0. Assuming that

Therefore, the distance between two fixed, distinct instances i and j (Eq 1) is asymptotically normal. In particular, when q = 2, the distribution of

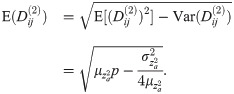

The distribution of pairwise distances convergences quickly to a Gaussian for Euclidean (q = 2) and Manhattan (q = 1) metrics as the number of attributes p increases (Fig 1). We compute the distance between all pairs of instances in simulated datasets of uniformly distributed random data. We simulate data with fixed m = 100 instances, and, by varying the number of attributes (p = 10, 100, 10000), we observe rapid convergence to Gaussian. For p as low as 10 attributes, Gaussian is a good approximation. The number of attributes in bioinformatics data is typically quite large, at least on the order of 103. The Shapiro-Wilk statistic approaches 1 more rapidly for the Euclidean than Manhattan, which may indicate more rapid convergence in the case of Euclidean. This may be partly due to Euclidean’s use of the square root, which is a common transformation of data in statistics.

Convergence to Gaussian for Manhattan and Euclidean distances for simulated standard uniform data with m = 100 instances and p = 10, 100, and 10000 attributes.

Convergence to Gaussian occurs rapidly with increasing p, and Gaussian is a good approximation for p as low as 10 attributes. The number of attributes in bioinformatics data is typically much larger, at least on the order of 103. The Euclidean metric has stronger convergence to normal than Manhattan. P values from Shapiro-Wilk test, where the null hypothesis is a Gaussian distribution.

To show asymptotic normality of distances, we did not specify whether the data distribution

For distance based learning methods, all pairwise distances are used to determine relative importances for attributes. The collection of all distances above the diagonal in an m × m distance matrix does not satisfy the independence assumption used in the previous derivations. This is because of the redundancy that is inherent to the distance matrix calculation. However, this collection is still asymptotically normal with mean and variance approximately equal to those we have previously given (Eq 8). In the next section, we assume actual data distributions in order to define more specific general formulas for standard Lq and max-min normalized Lq metrics. We also derive asymptotic moments for a new discrete metric in GWAS data and a new metric for time series correlation-based data, such as, resting-state fMRI.

3 Lq metric moments for continuous data distributions

In this section, we derive general formulas for asymptotic means and variances of the Lq distance (Eq 1) for standard normal and standard uniform data. With our general formulas for continuous data, we compute moments associated with Manhattan (L1) and Euclidean (L2) metrics. In the subsequent section, we combine the asymptotic analysis of this section with extreme value theory (EVT) to derive mean and variance formulas for the more complicated max-min normalized version of the Lq distance, where the magnitude difference (Eq 2) is divided by the range of each attribute a.

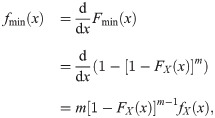

3.1 Distribution of |dij(a)|q = |Xia − Xja|q

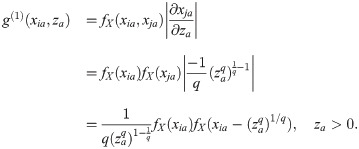

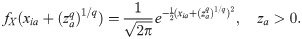

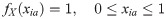

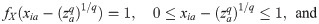

Suppose that

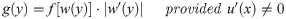

Theorem 3.1 Let f(x) be the value of the probability density of the continuous random variable X at x. If the function given by y = u(x) is differentiable and either increasing or decreasing for all values within the range of X for which f(x)≠0, then, for these values of x, the equation y = u(x) can be uniquely solved for x to give x = w(y), and for the corresponding values of y the probability density of Y = u(X) is given by

Elsewhere, g(y) = 0.

We have the following cases that result from solving for Xja in the equation given by

Suppose that

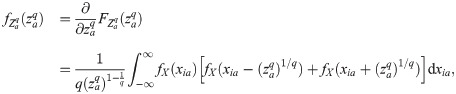

The density function

Suppose that

The density function

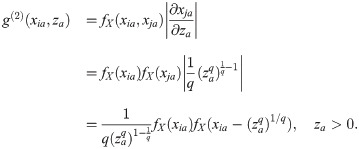

Let

Then it follows from fundamental rules of probability that

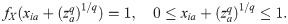

It follows directly from the previous result (Eq 16) that the density function of the random variable

Using the previous result (Eq 17), we can compute the mean and variance of the random variable

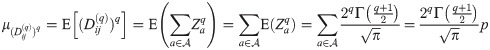

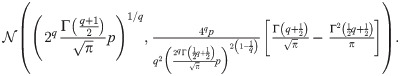

It follows immediately from the mean (Eq 18) and variance (Eq 19) and the Classical Central Limit Theorem (CCLT) that

Applying the convergence result we derived previously (Eq 8), the distribution of

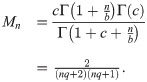

3.1.1 Standard normal data

If

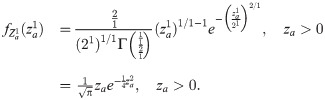

Substituting these marginal densities (Eqs 22–24) into the general density function for

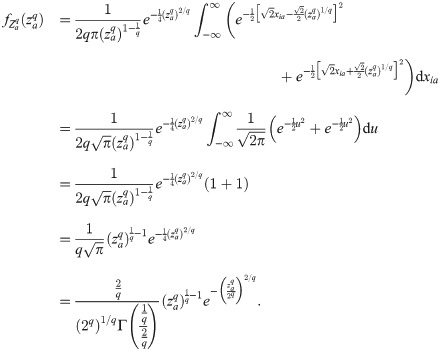

The density function given previously (Eq 25) is a Generalized Gamma density with parameters

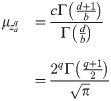

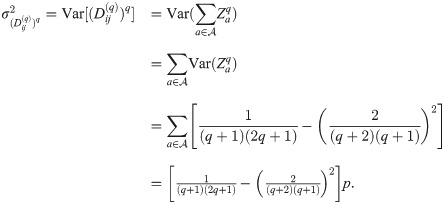

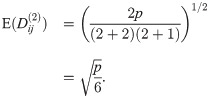

By linearity of the expected value and variance operators under the iid assumption, the mean (Eq 26) and variance (Eq 27) of the random variable

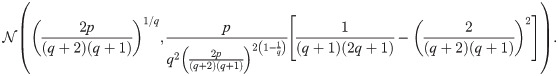

Therefore, the asymptotic distribution of

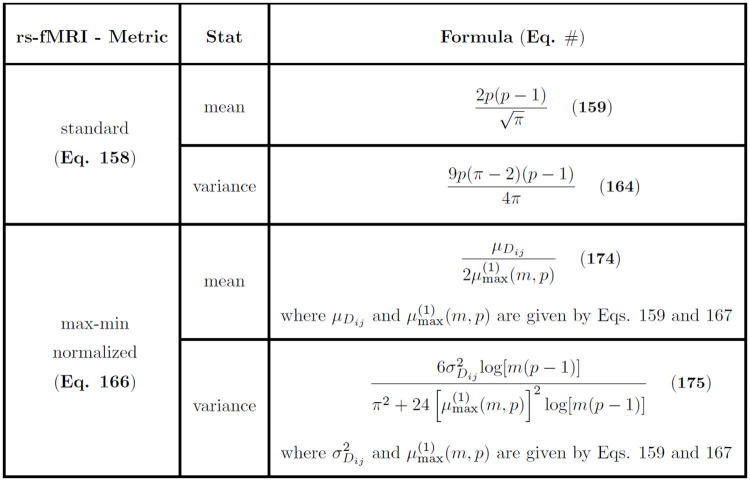

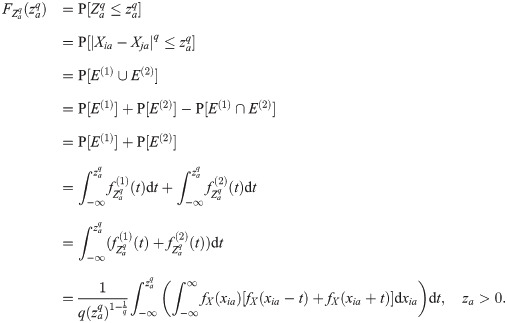

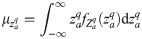

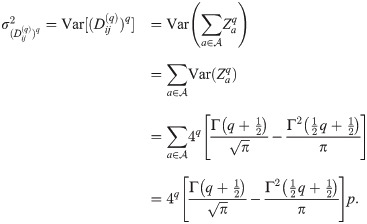

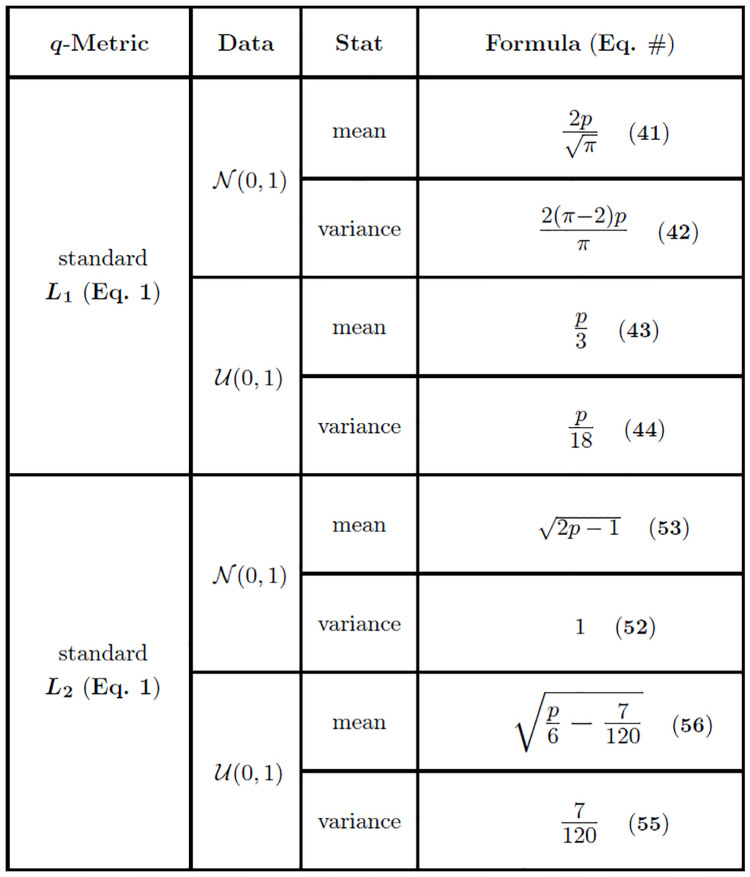

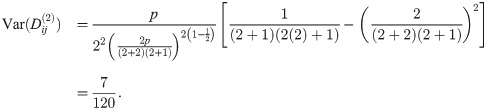

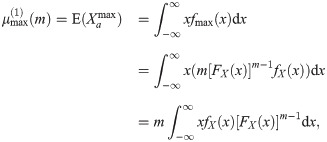

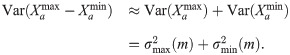

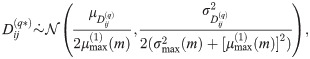

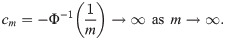

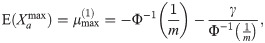

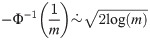

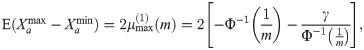

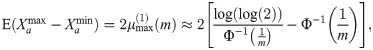

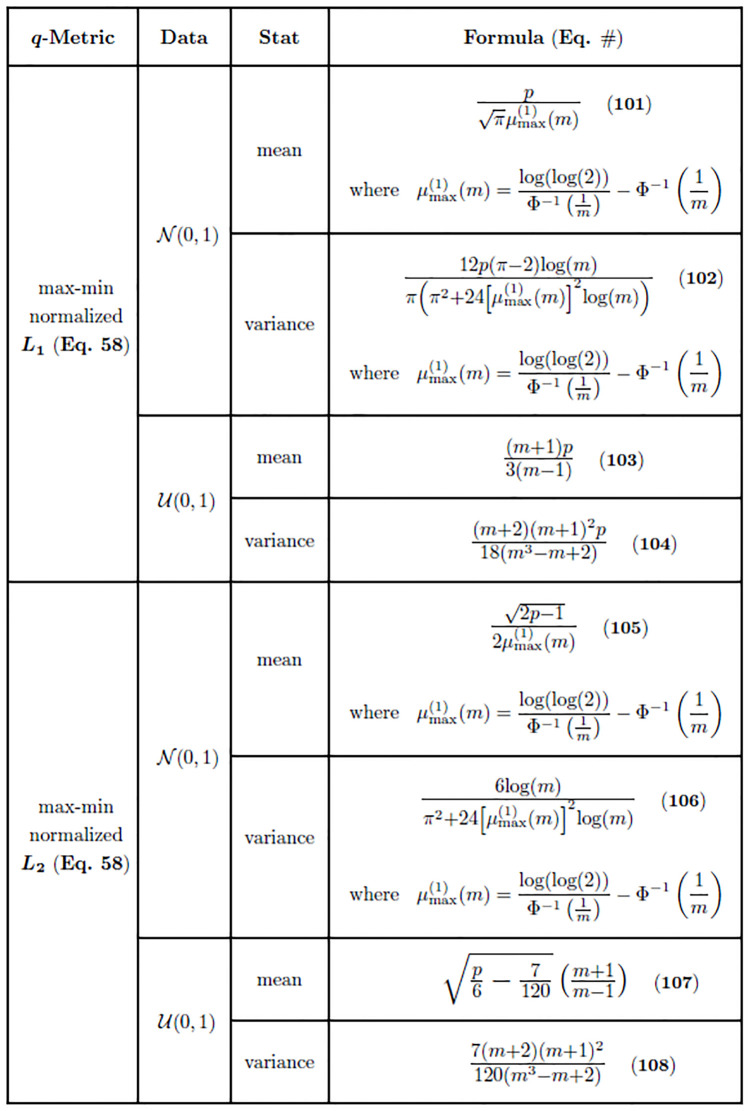

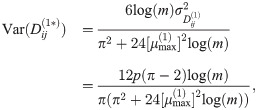

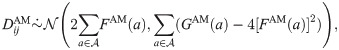

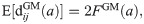

As a useful reference, we tabulate the moment estimates (Eq 30) for the Lq metric on standard normal and uniform data (Fig 2). The derivations for standard uniform data are given in the next subsection. The table is organized by data type (normal or uniform), type of statistic (mean or variance), and corresponding asymptotic formula.

Summary of distance distribution derivations for standard normal (

Asymptotic estimates are given for both standard (Eq 1) and max-min normalized (Eq 58) q-metrics. These estimates are relevant for all

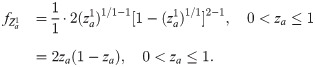

3.1.2 Standard uniform data

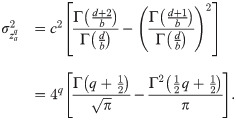

If

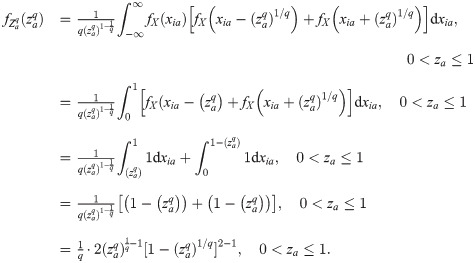

Substituting these marginal densities (Eqs 31–33) into the more general density function for

The previous density (Eq 34) is a Kumaraswamy density with parameters

Using this MGF (Eq 35), the mean and variance of

By linearity of the expected value and variance operators under the iid assumption, the mean (Eq 36) and variance (Eq 37) of the random variable

Therefore, the asymptotic distribution of

As previously noted, we tabulate the moment estimates (Eq 40) for the Lq metric on standard uniform data along with standard normal data (Fig 2). The summary is organized by data type (normal or uniform), type of statistic (mean or variance), and corresponding asymptotic formula. In the next subsections, we show the asymptotic moments of the distance distribution for standard normal and standard uniform data for the special case of Manhattan (q = 1) and Euclidean (q = 2) metrics. These are the most commonly applied metrics in the context of nearest-neighbor feature selection, so they are of particular interest.

3.2 Manhattan (L1)

With our general formulas for the asymptotic mean and variance (Eqs 30 and 40) for any value of

3.2.1 Standard normal data

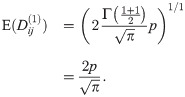

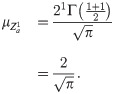

Substituting q = 1 into the asymptotic formula for the mean Lq distance (Eq 30), we have the following for expected L1 distance between two independently sample instances

We see in the formula for the expected Manhattan distance (Eq 41) that

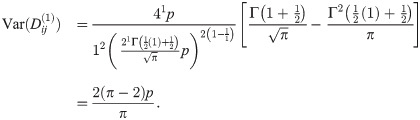

Substituting q = 1 into the formula for the asymptotic variance of

Similar to the mean (Eq 41), the limiting variance of

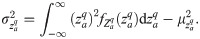

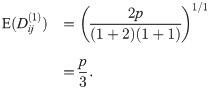

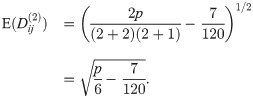

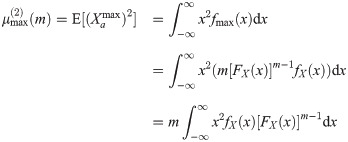

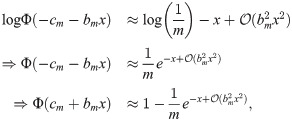

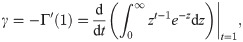

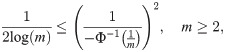

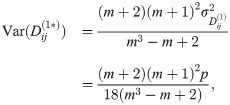

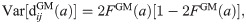

Asymptotic estimates of means and variances for the standard L1 and L2 (q = 1 and q = 2 in Fig 2) distance distributions.

Estimates for both standard normal (

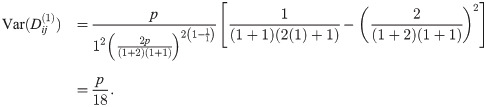

3.2.2 Standard uniform data

Substituting q = 1 into the asymptotic formula of the mean (Eq 40), we have the following for the expected L1 distance between two independently sampled instances

Once again, we see that the mean of

Substituting q = 1 into the formula of the asymptotic variance of

As in the case of the L1 metric on standard normal data, we have a variance (Eq 44) that grows on the order of p. The distances between points in high-dimensional uniform data become more widely dispersed with this metric. The summary of moment estimates given in this section (Eqs 43 and 44) is organized by metric, data type, statistic (mean or variance), and asymptotic formula (Fig 3).

3.2.3 Distribution of one-dimensional projection of pairwise distance onto an attribute

In nearest-neighbor distance-based feature selection like NPDR and Relief-based algorithms, the one-dimensional projection of the pairwise distance onto an attribute (Eq 2) is particularly fundamental to feature quality for association with an outcome. For instance, this distance projection is the predictor used to determine beta coefficients in NPDR. In particular, understanding distributional properties of the projected distances is necessary for defining pseudo P values for NPDR. In this section, we summarize the exact distribution of the one-dimensional projected distance onto an attribute

In previous sections, we derived the exact density function (Eq 17) and moments (Eqs 18 and 19) for the distribution of

Assuming data is standard normal, we substitute q = 1 into the density function of

The mean corresponding to this Generalized Gamma density is computed by substituting q = 1 into the formula for the mean of

Substituting q = 1 into Eq 27 for the variance, we have the following

These last few results (Eqs 45–47) provide us with the distribution for NPDR predictors when the data is from the standard normal distribution. We show density curves for q = 1, 2, …, 5 for the one-dimensional projection for standard normal data (S22 A Fig in S1 File).

If we have standard uniform data, we substitute q = 1 into the density function of

The mean corresponding to this Kumaraswamy density is computed by substituting q = 1 into the formula for the mean of

Substituting q = 1 into the formula for the variance of

In the event that the data distribution is standard uniform, the density function (Eq 48), the mean (Eq 49), and the variance (Eq 50) sufficiently define the distribution for NPDR predictors. As in the case of NPDR predictors for standard normal data, we show density curves for q = 1, 2, …, 5 for the NPDR predictor distribution for standard uniform data (S22 B Fig in S1 File).

The means (Eqs 46 and 49) and variances (Eqs.47 and 50) come from the exact distribution of pairwise distances with respect to a single attribute

3.3 Euclidean (L2)

Moment estimates for the Euclidean metric are obtained by substituting q = 2 into the asymptotic moment formulas for standard normal data (Eq 30) and standard uniform data (Eq 40). As in the case of the Manhattan metric in the previous sections, we initially proceed by deriving Euclidean distance moments in standard normal data.

3.3.1 Standard normal data

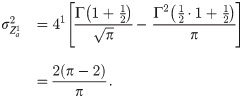

Substituting q = 2 into the asymptotic formula of the mean (Eq 30), we have the following for expected L2 distance between two independently sampled instances

In the case of L2 on standard normal data, we see that the mean of

Substituting q = 2 into the formula for the asymptotic variance of

Surprisingly, the asymptotic variance (Eq 52) is just 1. Regardless of data dimensions m and p, the variance of Euclidean distances on standard normal data tends to 1. Therefore, most instances are contained within a ball of radius 1 about the mean in high feature dimension p. This means that the Euclidean distance distribution on standard normal data is simply a horizontal shift to the right of the standard normal distribution.

For the case in which the number of attributes p is small, we have an improved estimate of the mean (Eq 9). The lower dimensional estimate of the mean is given by

For high dimensional data sets like gene expression [20, 21], which typically contain thousands of genes (or features), it is clear that the magnitude of p will be sufficient to use the standard asymptotic estimate (Eq 51) since

3.3.2 Standard uniform data

Substituting q = 2 into the asymptotic formula of the mean (Eq 40), we have the following for expected L2 distance between two independently sampled instances

As in the case of standard normal data, the expected value of

Substituting q = 2 into the formula for the asymptotic variance of

Once again, the variance of Euclidean distance surprisingly approaches a constant.

For the case in which the number of attributes p is small, we have an improved estimate of the mean (Eq 9). The lower dimensional estimate of the mean is given by

We summarize the moment estimates given in this section for standard Lq metrics (Eqs 55 and 56) organized by metric, data type, statistic (mean or variance), and asymptotic formula (Fig 3). In the next section, we extend these results for the standard Lq metric to derive asymptotics for the attribute range-normalized (max-min) Lq metric used frequently in Relief-based algorithms [1, 3] for scoring attributes. These derivations use extreme value theory to handle the maximum and minimum attributes for standard normal and standard uniform data.

4 Moments for max-min normalized Lq metric

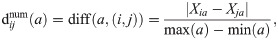

In this section, we derive formulas for asymptopic means and variances of a special Lq metric that is used in Relief-based feature selection methods. In this metric, the difference between pairs of subjects for a given attribute is normalized by the difference between the maximum and minimum of the attribute. For Relief-based methods [1, 3], the standard numeric difference metric (diff) is given by

This normalization leads to Relief attribute scores that are constrained to the interval [−1, 1]. The derivations in this section will invoke extreme value theory (EVT) because of the use of attribute extrema in the metric.

4.1 Distribution of max-min normalized Lq metric

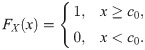

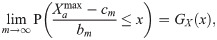

We observe empirically that Gaussian convergence applies to the max-min normalized Lq metric in the case of continuous data. We show this behavior for the special cases of standard uniform (S1 Fig in S1 File) and standard normal (S3 Fig in S1 File). In order to determine moments of asymptotic max-min normalized distance (Eq 57) distributions, we will first derive the asymptotic extreme value distributions of the attribute maximum and minimum. Although the exact distribution of the maximum or minimum requires an assumption about the data distribution, the Fisher-Tippett-Gnedenko Theorem is an important result that allows one to generally categorize the extreme value distribution for a collection of independent and identically distributed random variables into one of three distributional families. This theorem does not, however, tell us the exact distribution of the maximum that we require in order to determine asymptotic results for the max-min normalized distance (Eq 58). We mention this theorem simply to provide some background on convergence of extreme values. Before stating the theorem, we first need the following definition

Definition 4.1

A distribution

Theorem 4.1 (Fisher-Tippett-Gnedenko)

Let

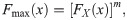

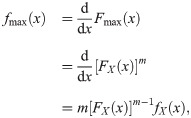

The three distribution families given in Theorem 4.1 are actually special cases of the Generalized Extreme Value Distribution. In the context of extreme values, Theorem 4.1 is analogous to the Central Limit Theorem for the distribution of sample mean. Although we will not explicitly invoke this theorem, it does tell us something very important about the asymptotic behavior of sample extremes under certain necessary conditions. For illustration of this general phenomenon of sample extremes, we derive the distribution of the maximum for standard normal data to show that the limiting distribution is in the Gumbel family, which is a known result. In the case of standard uniform data, we will derive the distribution of the maximum and minimum directly. Regardless of data type, the distribution of the sample maximum can be derived as follows

Using more precise notation, the distribution function of the sample maximum in standard normal data is

Differentiating the distribution function (Eq 60) gives us the following density function for the distribution of the maximum

The distribution of the sample minimum,

With more precise notation, we have the following expression for the distribution function of the minimum

Differentiating the distribution function (Eq 63) gives us the following density function for the distribution of sample minimum

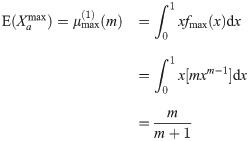

Given the densities of the distribution of sample maximum and minimum, we can easily compute the raw moments and variance. The first moment about the origin of the distribution of sample maximum is given by the following

The second raw moment of the distribution of sample maximum is derived similarly as follows

Using the first (Eq 65) and second (Eq 66) raw moments of the distribution of sample maximum, the variance is given by

Moving on to the distribution of sample minimum, the first raw moment is given by the following

Similarly, the second raw moment of the distribution of sample minimum is given by the following

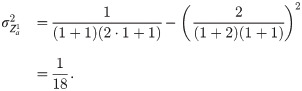

Using the first (Eq 68) and second (Eq 69) raw moments of the distribution of sample minimum, the variance is given by

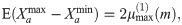

Using the expected attribute maximum (Eq 65) and minimum (Eq 68) for sample size m, the following expected attribute range results from linearity of the expectation operator

For a data distribution whose density is an even function, the expected attribute range (Eq 71) can be simplified to the following expression

For large samples (m ≫ 1) from an exponential type distribution that has infinite support and all moments, the covariance between the sample maximum and minimum is approximately zero [22]. In this case, the variance of the attribute range of a sample of size m is given by the following

Under the assumption of zero skewness, infinite support and even density function, sufficiently large sample size m, and distribution of an exponential type for all moments, the variance of attribute range (Eq 73) simplifies to the following

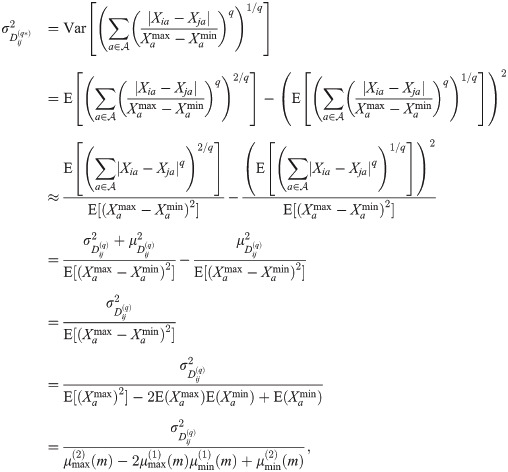

Let

The variance of the max-min normalized distance (Eq 58) distribution is given by the following

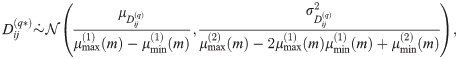

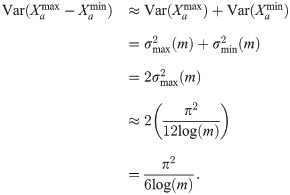

With the mean (Eq 75) and variance (Eq 76) of the max-min normalized distance (Eq 58), we have the following generalized estimate for the asymptotic distribution of the max-min normalized distance distribution

For data with zero skewness, infinite support, and even density function, the expected sample maximum is the additive inverse of the expected sample minimum. This allows us to express the expected max-min normalized pairwise distance (Eq 75) exclusively in terms of the expected sample maximum. This result is given by the following

A similar substitution gives us the following expression for the variance of the max-min normalized distance distribution

Therefore, the asymptotic distribution of the max-min normalized distance distribution (Eq 77) becomes

We have now derived asymptotic estimates of the moments of the max-min normalized Lq distance metric (Eq 58) for any continuous data distribution. In the next two sections, we examine the max-min normalized Lq distance on standard normal and standard uniform data. As in previous sections in which we analyzed the standard Lq metric (Eq 1), we will use the more general results for the max-min Lq metric to derive asymptotic estimates for normalized Manhattan (q = 1) and Euclidean (q = 2).

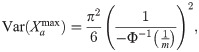

4.1.1 Standard normal data

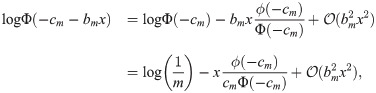

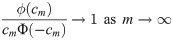

The standard normal distribution has zero skewness, even density function, infinite support, and all moments. This implies that the corresponding mean and variance of the distribution of sample range can be expressed exclusively in terms of the sample maximum. Given the nature of the density function of the sample maximum for sample size m, the integration required to determine the moments (Eqs 65 and 66) is not possible. These moments can either be approximated numerically or we can use extreme value theory to determine the form of the asymptotic distribution of the sample maximum. Using the latter method, we will show that the asymptotic distribution of the sample maximum for standard normal data is in the Gumbel family. Let

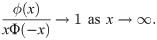

In order to simplify the right-hand side of this expansion (Eq 81), we will use the Mills Ratio Bounds [23] given by the following

The inequalities given above (Eq 82) show that

This further implies that

This gives us the following approximation of the right-hand side of the expansion (Eq 81) given previously

Using the approximation of expansion given previously (Eq 83), we now derive the limit distribution for the sample maximum in standard normal data as

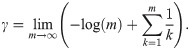

Perhaps a more convenient definition of the Euler-Mascheroni constant is simply

The median of the distribution of the maximum for standard normal data is given by

Finally, the variance of the asymptotic distribution of the sample maximum is given by

For typical sample sizes m in high-dimensional spaces, the variance estimate (Eq 87) exceeds the variance of the sample maximum significantly. Using the fact that

Therefore, the mean of the range of m iid standard normal random variables is given by

It is well known that the sample extremes from the standard normal distribution are approximately uncorrelated for large sample size m [22]. This implies that we can approximate the variance of the range of m iid standard normal random variables with the following result

For the purpose of approximating the mean and variance of the max-min normalized distance distribution, we observe empirically that the formula for the median of the distribution of the attribute maximum (Eq 86) yields more accurate results. More precisely, the approximation of the expected maximum (Eq 85) overestimates the sample maximum slightly. The formula for the median of the sample maximum (Eq 86) provides a more accurate estimate of this sample extreme. Therefore, the following estimate for the mean of the attribute range will be used instead

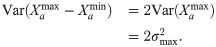

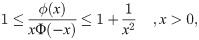

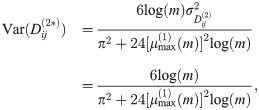

We have already determined the mean and variance (Eq 30) for the Lq metric (Eq 1) on standard normal data. Using the expected value of the sample maximum (Eq 91), the variance of the sample maximum (Eq 90), and the general formulas for the mean and variance of the max-min normalized distance distribution (Eq 80), this leads us to the following asymptotic estimate for the distribution of the max-min normalized distances for standard normal data

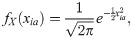

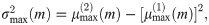

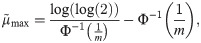

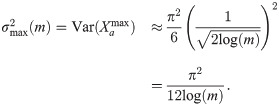

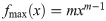

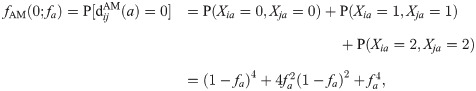

Asymptotic estimates of means and variances for the max-min normalized L1 and L2 distance distributions commonly used in Relief-based algorithms.

Estimates for both standard normal (

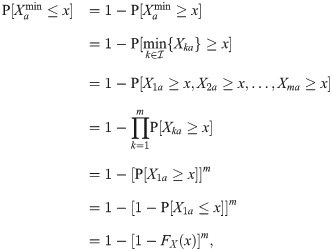

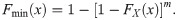

4.1.2 Standard uniform data

Standard uniform data does not have an even density function. Due to the simplicity of the density function, however, we can derive the distribution of the maximum and minimum of a sample of size m explicitly. Using the general forms of the distribution functions of the maximum (Eq 60) and minimum (Eq 63), we have the following distribution functions for standard uniform data

Using the general forms of the density functions of the maximum (Eq 61) and minimum (Eq 64), we have the following density functions for standard uniform data

Then the expected maximum and minimum are computed through straightforward integration as follows

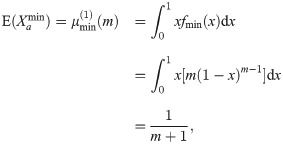

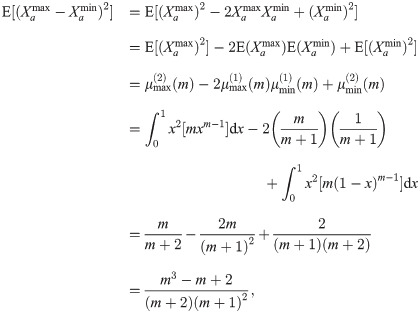

We can compute the second moment about the origin of the sample range as follows

Using the general asymptotic distribution of max-min normalized distances for any data type (Eq 77) and the mean and variance (Eq 40) of the standard Lq distance metric (Eq 1), we have the following asymptotic estimate for the max-min normalized distance distribution for standard uniform data

4.2 Range-Normalized Manhattan (q = 1)

Using the general asymptotic results for mean and variance of max-min normalized distances in standard normal and standard uniform data (Eqs 92 and 100) for any value of

4.2.1 Standard normal data

Substituting q = 1 into the asymptotic formula for the expected max-min normalized distance (Eq 92), we derive the expected normalized Manhattan distance in standard normal data as follows

Similarly, the variance of

4.2.2 Standard uniform data

Substituting q = 1 into the asymptotic formula for the expected max-min pairwise distance (Eq 100), we derive the expected normalized Manhattan distance in standard uniform data as

Similarly, the variance of

4.3 Range-Normalized Euclidean (q = 2)

Analogous to the previous section, we use the asymptotic moment estimates for the max-min normalized metric (D(q*), Eq 58) for standard normal (Eq 92) and standard uniform (Eq 100) data but specific to a range-normalized Euclidean metric (q = 2).

4.3.1 Standard normal data

Substituting q = 2 into the asymptotic formula for the expected max-min normalized pairwise distance (Eq 92), we derive the expected normalized Euclidean distance in standard normal data as

Similarly, the variance of

4.3.2 Standard uniform data

Substituting q = 2 into the asymptotic formula for the expected max-min normalized pairwise distance (Eq 100), we derive the expected normalized Euclidean distance in standard uniform data as

Similarly, the variance of

We summarize moment estimates in figures (Figs 2–4) that contain all of our asymptotic results for both standard and max-min normalized Lq metrics in each data type we have considered. This includes our most general results for any combination of sample size m, number of attributes p, type of metric Lq, and data type (Fig 2). From these more general derivations, we show the results of the standard L1 and L2 metrics for any combination of sample size m, number of attributes p, and data type (Fig 3). Our last set of summarized results show asymptotics for the max-min normalized L1 and L2 metrics for any combination of sample size m, number of attributes p, and data type (Fig 4). For both standard and max-min normalized L2 metrics (Figs 3 and 4), the low-dimensional improved estimates of sample means (Eqs 53 and 56) are used because they perform well at both low and high attribute dimension p.

In the next section, we make a transition into discrete GWAS data. We will discuss some commonly known metrics and then a relatively new metric, which will lead us into novel asymptotic results for this data type.

5 GWAS distance distributions

Genome-wide association study (GWAS) data consists of single nucleotide polymorphisms (SNPs), which are inherited nucleotide changes at loci along the DNA. Each SNP has two possible nucleotide alleles: the minor allele, which is the less frequent nucleotide in the population, and the common allele. The attribute/feature corresponding to each SNP is typically represented as a three-state genotype: homozygous for the minor allele, heterozygous or homozygous for the common allele. Feature selection in GWAS is typically concerned with finding main effect or interacting SNPs that are associated with disease susceptibility [25]. The similarity or distance between individuals in the SNP space is routinely calculated in GWAS for principal component analysis but is also calculated for nearest-neighbor feature selection.

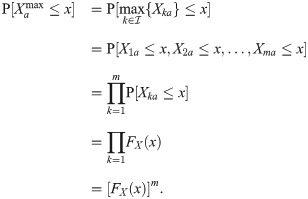

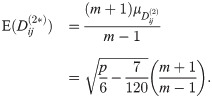

For our asymptotic analysis formalism, consider a GWAS data set with the following encoding based on minor allele frequency

For random GWAS data sets, we can think Xia as the number of successes in two Bernoulli trials. That is,

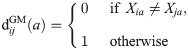

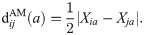

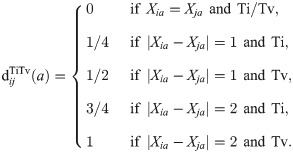

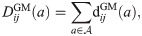

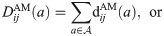

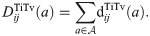

Two commonly known types of distance metrics for GWAS data are the Genotype Mismatch (GM) and Allele Mismatch (AM) metrics. The GM and AM metrics are defined by

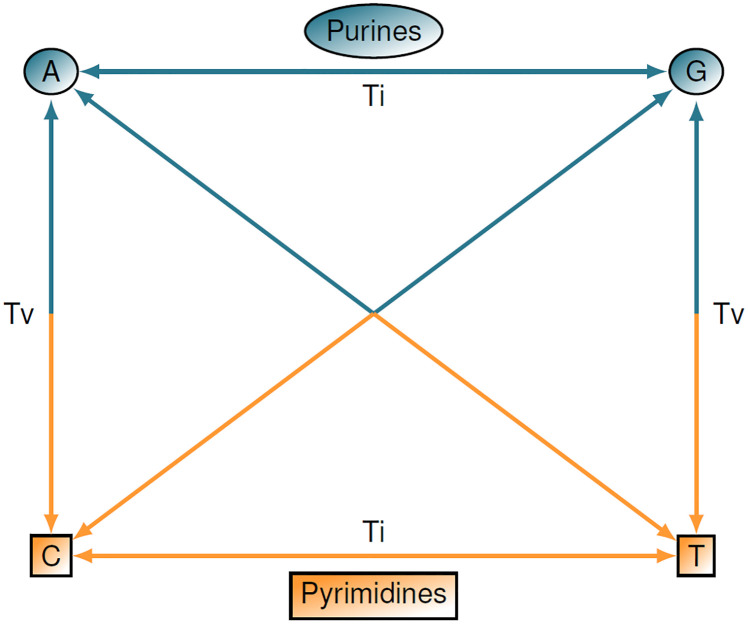

More informative metrics may include differences at the nucleotide level for each allele by considering differences in the rates of transition and transversion mutations (Fig 5). One such discrete metric that accounts for transitions (Ti) and transversions (Tv) was introduced in [7] and can be written as

Purines (A and G) and pyrimidines (C and T) are shown.

Transitions occur when a mutation involves purine-to-purine or pyrimidine-to-pyrimidine insertion. Transversions occur when a purine-to-pyrimidine or pyrimidine-to-purine insertion happens, which is a more extreme case. There are visibly more possibilities for transversions to occur than there are transitions, but there are about twice as many transitions in real data.

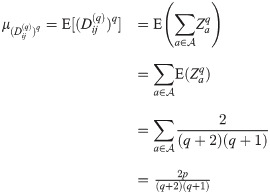

With these GWAS distance metrics, we then compute the pairwise distance between two instances

Assuming that all data entries Xia are independent and identically distributed, we have already shown that the distribution of pairwise distances is asymptotically normal regardless of data distribution and value of q. Therefore, it follows that the distance distributions induced by each of the GWAS metrics (Eqs 110–112) are asymptotically normal. We illustrate Gaussian convergence in the case of GM (S4 Fig in S1 File), AM (S5 Fig in S1 File), and TiTv (S6 Fig in S1 File). With this Gaussian limiting behavior, we will proceed by deriving the mean and variance for each distance distribution induced by these three GWAS metrics.

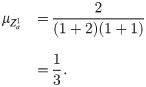

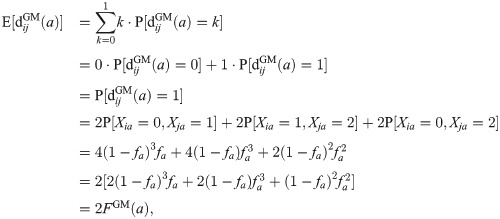

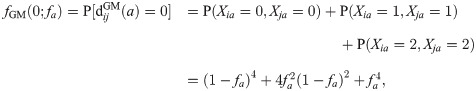

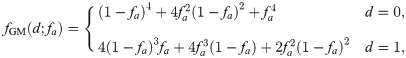

5.1 GM distance distribution

The simplest distance metric in nearest-neighbor feature selection in GWAS data is the genotype-mismatch (GM) distance metric (Eq 113). The GM attribute diff (Eq 110) indicates only whether two genotypes are the same or not. There are many ways two genotypes could differ, but this metric does not record this information. We will now derive the moments for the GM distance (Eq 113), which are sufficient for defining its corresponding asymptotic distribution.

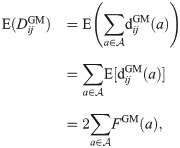

The expected value of the GM attribute diff metric (Eq 110) is given by the following

Then the expected pairwise GM distance between instances

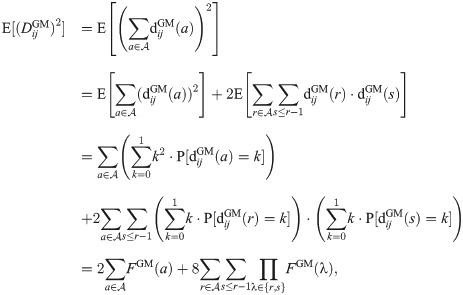

The second moment about the origin for the GM distance is computed as follows

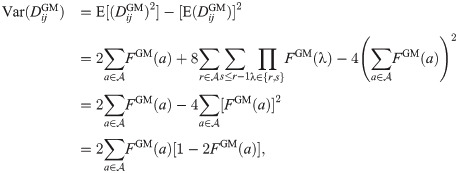

Using the first (Eq 117) and second (Eq 118) raw moments of the GM distance, the variance is given by

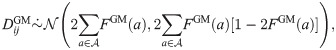

With the mean and variance estimates (Eqs 117 and 119), the asymptotic GM distance distribution is given by the following

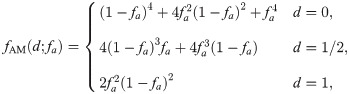

5.2 AM distance distribution

As we have mentioned previously, the AM attribute diff metric (Eq 111) is slightly more dynamic than the GM metric because the AM metric accounts for differences between the alleles of two genotypes. In this section, we derive moments of the AM distance metric (Eq 114) that adequately define its corresponding asymptotic distribution.

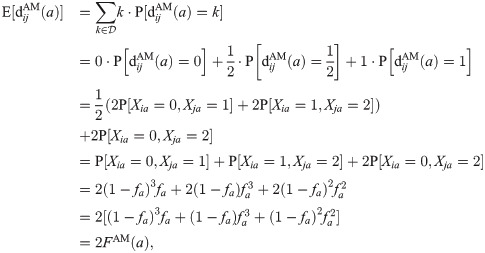

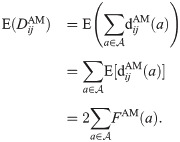

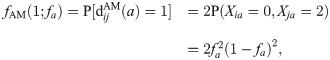

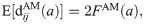

The expected value of the AM attribute diff metric (Eq 111) is given by the following

Using the expected AM attribute diff (Eq 121), the expected pairwise AM distance (Eq 114) between instances

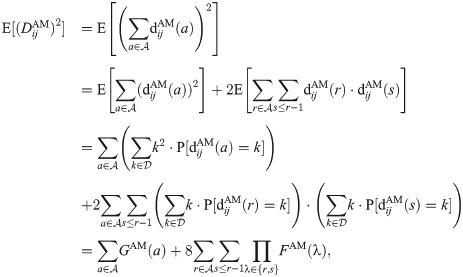

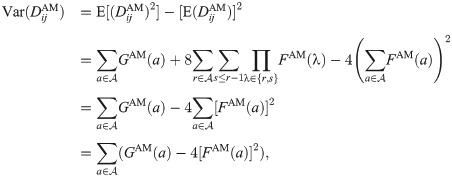

The second moment about the origin for the AM distance is computed as follows

Using the first (Eq 122) and second (Eq 123) raw moments of the asymptotic AM distance distribution, the variance is given by

With the mean (Eq 122) and variance (Eq 124) estimates of AM distances, the asymptotic AM distance distribution is given by the following

This concludes our analysis of the AM metric in GWAS data when the independence assumption holds for minor allele probabilities fa and binomial samples

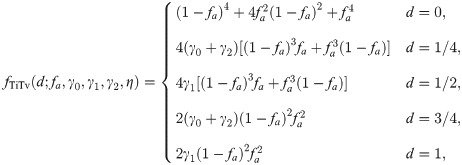

5.3 TiTv distance distribution

The TiTv metric allows for one to account for both genotype mismatch, allele mismatch, transition, and transversion. However, this added dimension of information requires knowledge of the nucleotide makeup at a particular locus. A sufficient condition to compute the TiTv metric between instances

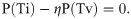

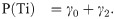

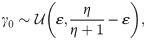

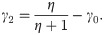

This additional encoding is always given in a particular GWAS data set, which leads us to consider the probabilities of PuPu, PuPy, and PyPy. These will be necessary to determine asymptotics for the TiTv distance metric. Let γ0, γ1, and γ2 denote the probabilities of PuPu, PuPy, and PyPy, respectively, for the p loci of data matrix X. In real data, there are approximately twice as many transitions as there are transversions. That is, the probability of a transition P(Ti) is approximately twice the probability of transversion P(Tv). It is likely that any particular data set will not satisfy this criterion exactly. In this general case, we have P(Ti) being equal to some multiple η times P(Tv). In order to enforce this general constraint in simulated data, we define the following set of equalities

The sum-to-one constraint (Eq 126) is natural in this context because there are only three possible genotype encodings at a particular locus, which are PuPu, PuPy, and PyPy. Solving the Ti/Tv ratio constraint (Eq 127) for η gives

Using this PuPu, PuPy, and PyPy encoding, the probability of a transversion occurring at any fixed locus a is given by the following

Using the sum-to-one constraint (Eqs 126) and the probability of transversion (Eq 127), the probability of a transition occuring at locus a is computed as follows

Also using the sum-to-one constraint (Eq 126) and the Ti/Tv ratio constraint (Eq 127), it is clear that we have

Then it immediately follows that we have

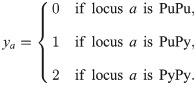

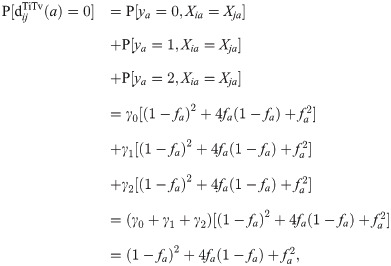

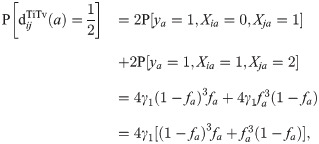

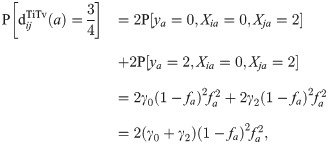

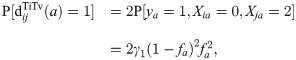

However, we can derive the mean and variance of the distance distribution induced by the TiTv metric without specifying any relationship between γ0, γ1, and γ2. We proceed by computing

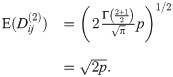

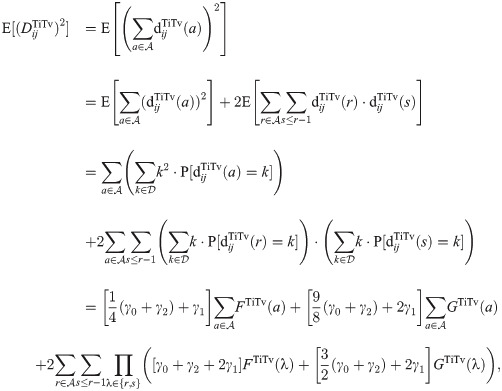

We derive

We derive

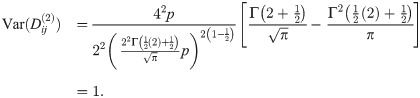

We derive

We derive

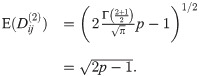

We derive

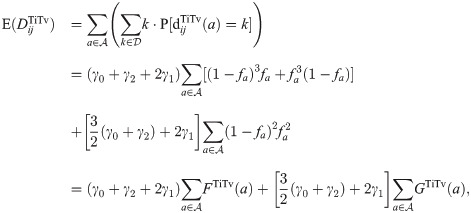

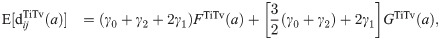

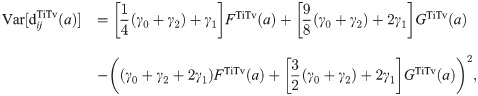

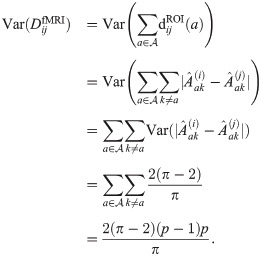

Using the TiTv diff probabilities (Eqs 133–137), we compute the expected TiTv distance between instances

The second moment about the origin for the TiTv distance is computed as follows

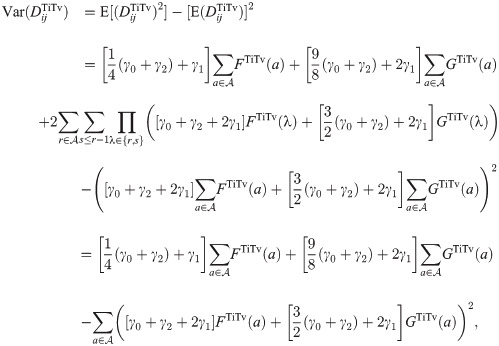

Using the first (Eq 138) and second (Eq 139) raw moments of the TiTv distance, the variance is given by

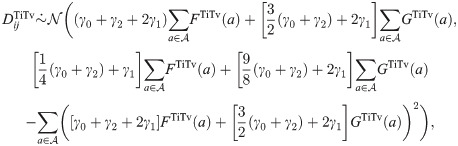

With the mean (Eq 138) and variance (Eq 140) estimates, the asymptotic TiTv distance distribution is given by the following

Given upper and lower bounds l and u, respectively, of the success probability sampling interval, the average success probability (or average MAF) is computed as follows

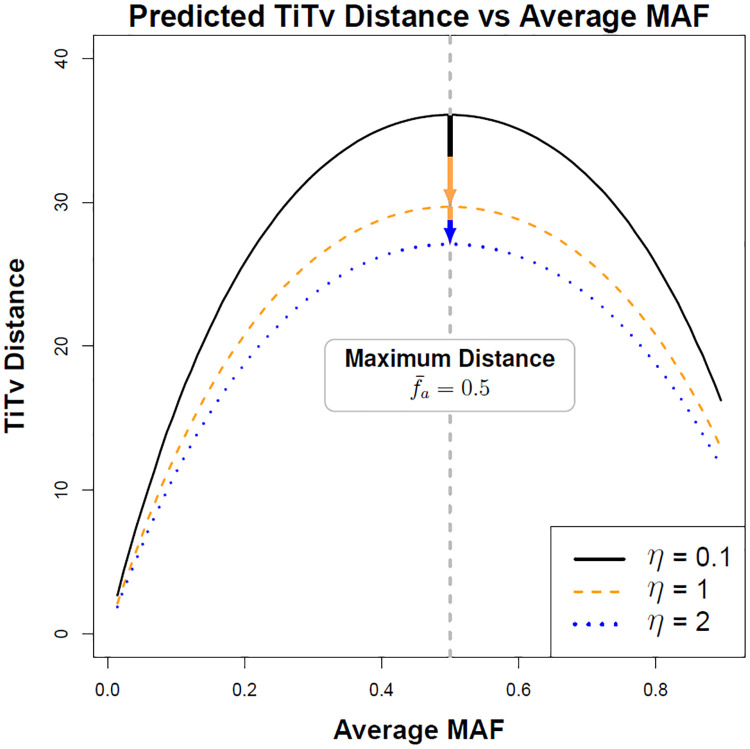

The maximum TiTv distance occurs at

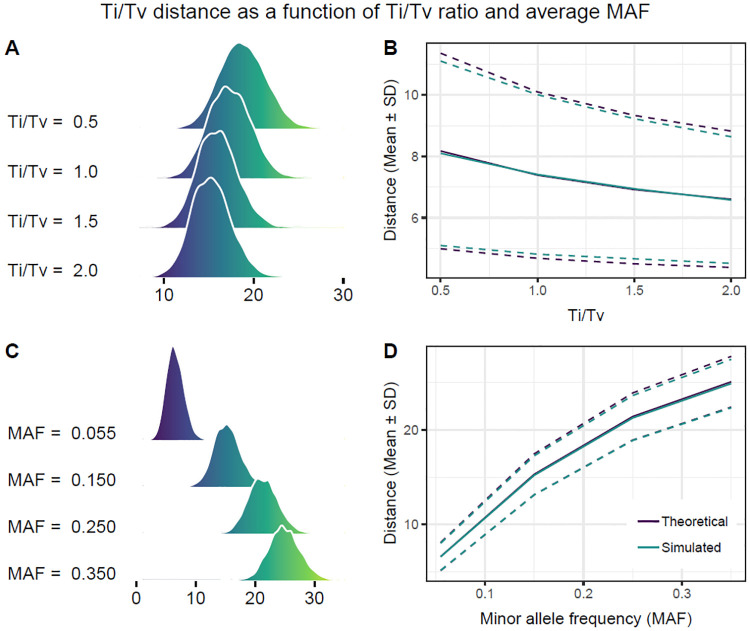

Predicted average TiTv distance as a function of average minor allele frequency

Success probabilities fa are drawn from a sliding window interval from 0.01 to 0.9 in increments of about 0.009 and m = p = 100. For η = 0.1, where η is the Ti/Tv ratio given by Eq 126, Tv is ten times more likely than Ti and results in larger distance. Increasing to η = 1, Tv and Ti are equally likely and the distance is lower. In line with real data for η = 2, Tv is half as likely as Ti so the distances are relatively small.

We also compared theoretical and sample moments as a function of η = Ti/Tv and

Density curves and moments of TiTv distance as a function of average MAF

We fix m = p = 100 for all simulated TiTv distances. (A) For fixed

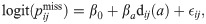

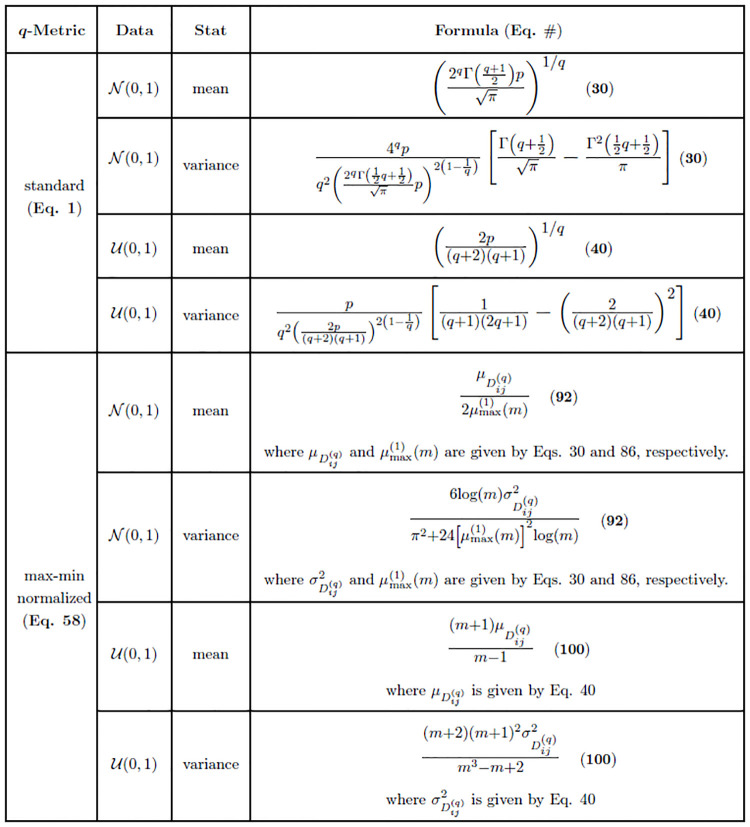

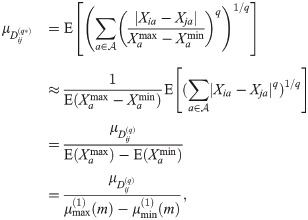

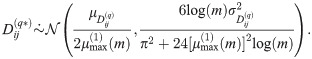

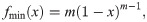

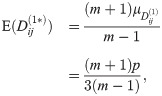

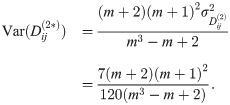

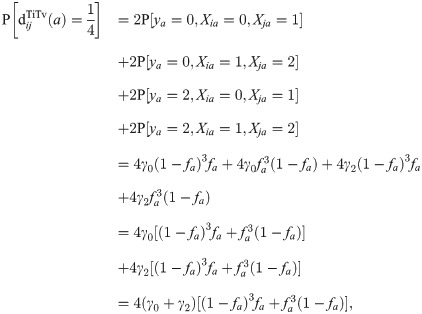

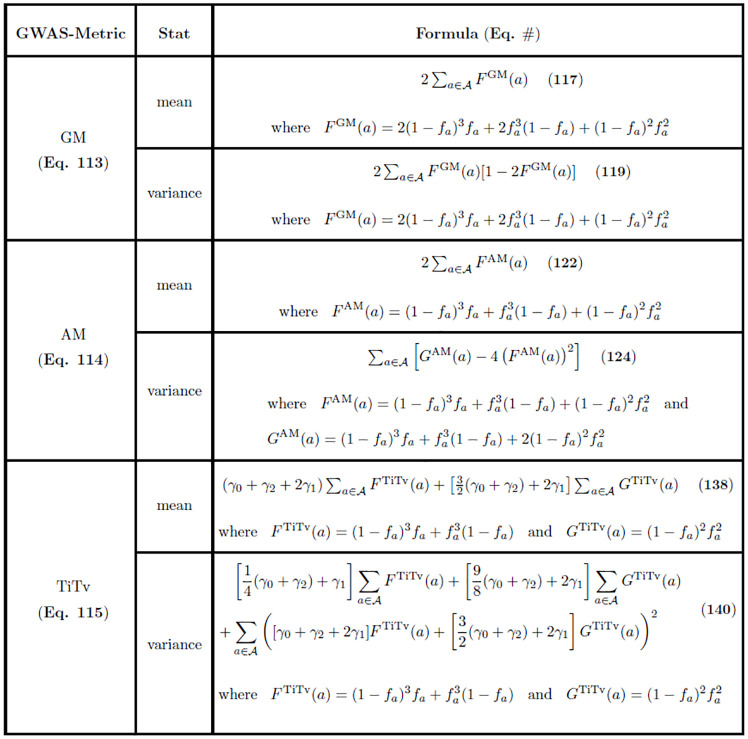

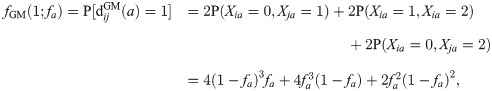

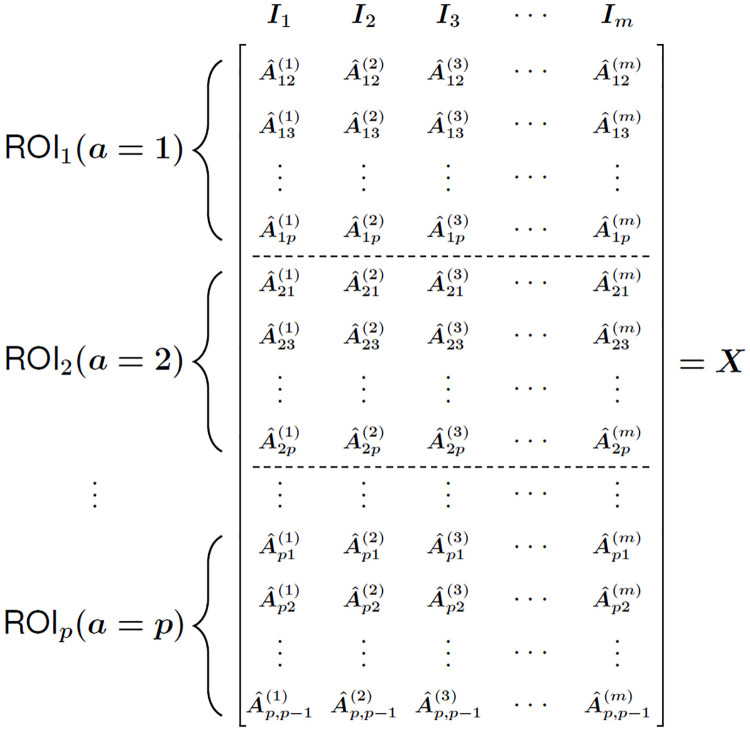

We summarize our moment estimates for GWAS distance metrics (Eqs 113–115) (Fig 8) organized by metric, statistic (mean or variance), and asymptotic formula. Next we consider the important case of distributions of GWAS distances projected onto a single attribute (Eqs 110–112).

Asymptotic estimates of means and variances of genotype mismatch (GM) (Eq 113), allele mismatch (AM) (Eq 114), and transition-transversion (TiTv) (Eq 115) distance metrics in GWAS data (p ≫ 1).

GWAS data

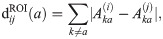

5.4 Distribution of one-dimensional projection of GWAS distance onto a SNP

We previously derived the exact distribution of the one-dimensional projected distance onto an attribute in continuous data (Section 3.2.3), which is used as the predictor in NPDR to calculate relative attribute importance in the form of standardized beta coefficients. GWAS data and the metrics we have considered are discrete. Therefore, we derive the density function for each diff metric (Eqs 110–112), which also serves as the probability distribution for each metric, respectively.

The support of the GM metric (Eq 110) is simply {0, 1}, so we derive the probability,

Similarly, the probability that the GM diff is equal to 1 is derived as follows

This leads us to the probability distribution of the GM diff metric, which is the distribution of the one-dimensional GM distance projected onto a single SNP. This distribution is given by

The mean and variance of this GM diff distribution can easily be derived using this newly determined density function (Eq 145). The average GM diff is given by the following

The variance of the GM diff metric is given by

The support of the AM metric (Eq 111) is {0, 1/2, 1}. Beginning with the probability of the AM diff being equal to 0, we have the following probability

The probability of the AM diff metric being equal to 1/2 is computed similarly as follows

Finally, the probability of the AM diff metric being equal to 1 is given by the following

As in the case of the GM diff metric, we now have the probability distribution of the AM diff metric. This also serves as the distribution of the one-dimensional AM distance projected onto a single SNP, and is given by the following

The mean and variance of this AM diff distribution is derived using the corresponding density function (Eq 151). The average AM diff is given by

The variance of the AM diff metric is given by

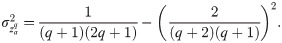

For the TiTv diff metric (Eq 112), the support is {0, 1/4, 1/2, 3/4, 1}. We have already derived the probability that the TiTv diff assumes each of the values of its support (Eqs 133–137). Therefore, we have the following distribution of the TiTv diff metric

The mean and variance of this TiTv diff distribution is derived using the corresponding density function (Eq 154). The average TiTv diff is given by

The variance of the TiTv diff metric is given by

These novel distribution results for the projection of pairwise GWAS distances onto a single genetic variant, as well as results for the full space of p variants, can inform NPDR and other nearest-neighbor distance-based feature selection algorithms. We show density curves for GM (S23 Fig in S1 File), AM (S24 Fig in S1 File), and TiTv (S25 Fig in S1 File) for each possible support value. Next we introduce our new diff metric and distribution results for time-series derived correlation-based data, with a particular application to resting-state fMRI.

6 Time series correlation-based distance distribution

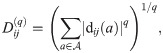

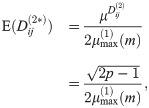

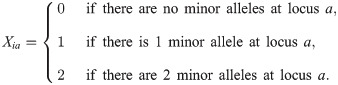

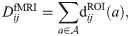

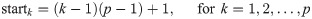

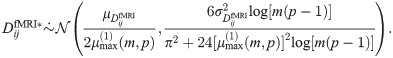

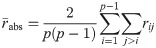

In this section, we introduce a new metric and projected distance for correlation data, and we derive its asymptotic properties. For this type of data, each of the m subjects has a correlation matrix A(p×p) between pairs of attributes from the set

In rs-fMRI feature selection applications, a common approach is to use the correlation between ROIs as the attribute. However, our goal is to allow the individual ROIs to be the attributes of interest (a) even though the data is correlation. Thus, we propose the following attribute projection (diff)

In order for comparisons between different correlations to be possible, we first perform a Fisher r-to-z transform on the correlations. This transformation makes the data approximately normally distributed with stabilized variance across different samples. After this transformation, we then load all of the transformed correlations into a p(p − 1) × m matrix X (Fig 9). Each column of X represents a single instance (or subject) in rs-fMRI data. Contrary to a typical p × m data set, each row does not represent a single attribute. Rather, each attribute (or ROI) is represented by p − 1 consecutive rows. The first p − 1 rows represent ROI1, the next p − 1 rows represent ROI2, and so on until the last p − 1 rows that represent ROIp. For a given column of X, we exclude pairwise correlations between an ROI and itself. Therefore, the matrix does not contain

Organization based on brain regions of interest (ROIs) of resting-state fMRI correlation dataset consisting of transformed correlation matrices for m subjects.

Each column corresponds to an instance (or subject) Ij and each subset of rows corresponds to the correlations for an ROI attribute (p sets). The notation

These indices allow us to extract just the rows necessary to compute the rs-fMRI diff for a fixed ROI.

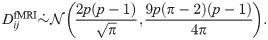

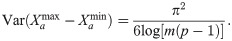

We further transform the data matrix X by standardizing so that each of the m columns has zero mean and unit variance. Therefore, the data in matrix X are approximately standard normal. Since we assume independent samples, the standard rs-fMRI distance is asymptotically normal. Gaussian limiting behavior is illustrated in the form of histograms as shown previously (S7 Fig in S1 File). Recall that the mean (Eq 41) and variance (Eq 42) of the Manhattan (L1) distance distribution for standard normal data are

The expected pairwise rs-fMRI distance (Eq 159) grows on the order of p(p − 1), which is the total number of transformed pairwise correlations in each column of X (Fig 9). This is similar to the case of a typical m × p data matrix in which the data is standard normal and Manhattan distances are computed between instances.

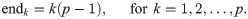

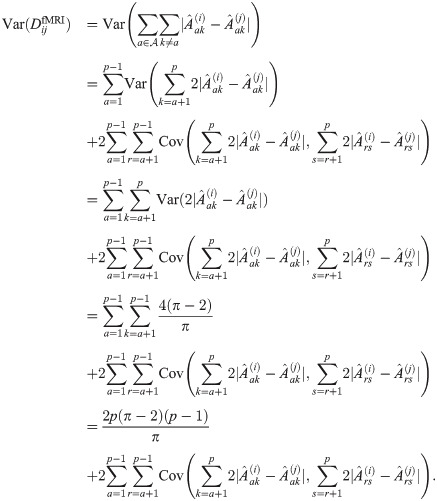

We first derive the variance of the rs-fMRI distance by making an independence assumption with respect to the magnitude differences

Similar to the case of an m × p data matrix containing standard normal data, we have an rs-fMRI distance variance that grows on the order of p(p − 1), which is the total number of pairwise associations in a column of data matrix X (Fig 9). Therefore, the expected rs-fMRI distance (Eq 159) and the variance of the rs-fMRI distance (Eq 160) increase on the same order.

The independence assumption used to derive the variance of our rs-fMRI distance metric (Eq 160) is not satisfied because a single value of the diff (Eq 157) includes the same fixed ROI, a, for each term in the sum for all k ≠ a. Therefore, the linear application of the variance operator we have previously employed does not account for the additional cross-covariance that exists. However, we have seen empirically that the theoretical variance of the distance we computed for the rs-fMRI distance metric (Eq 160) still reasonably approximates the sample variance, there is a slight discrepancy between our theoretical rs-fMRI distance metric variance (Eq 160) and the sample variance. More precisely, the formula we have given for the variance (Eq 160) consistently underestimates the sample variance of the rs-fMRI distance. To adjust for this discrepancy, we determine a corrected formula by assuming that there is dependence between the terms of the rs-fMRI diff and estimate the cross-covariance between rs-fMRI diffs of different pairs of ROIs.

We begin the derivation of our corrected formula by writing the variance as a two-part sum, where the first term in the sum involves the variance of the magnitude difference

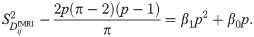

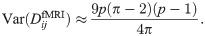

In order to have a formula in terms of the number of ROIs p only, we estimate the double sum on the right-hand side of the equation of rs-fMRI distance variance (Eq 161). Through simulation, it can be seen that the difference between the actual sample variance

The coefficient estimates found through least squares fitting are β1 = −β0 ≈ 0.08. These estimates allow us to arrive at a functional form for the double sum in the right-hand side of the rs-fMRI distance variance equation (Eq 161) that is proportional to

Therefore, the variance of the rs-fMRI distances is approximated well by the following

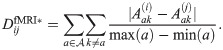

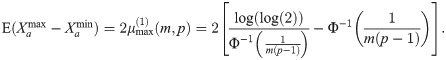

With the mean (Eq 159) and variance (Eq 164) estimates, we have the following asymptotic distribution for rs-fMRI distances

6.1 Max-min normalized time series correlation-based distance distribution

Previously (Section 4) we determined the asymptotic distribution of the sample maximum of size m from a standard normal distribution. We can naturally extend these results to our transformed rs-fMRI data because X (Fig 9) is approximately standard normal. Furthermore, we have previously mentioned that the max-min normalized Lq metric yields approximately normal distances with the iid assumption. We show a similar result for max-min normalized rs-fMRI distances (S8 Fig in S1 File). We proceed with the definition of the max-min normalized rs-fMRI pairwise distance.

Consider the max-min normalized rs-fMRI distance given by the following equation

Assuming that the data X has been r-to-z transformed and standardized, we can easily compute the expected attribute range and variance of the attribute range. The expected maximum of a given attribute in data matrix X is estimated by the following

The variance can be esimated with the following

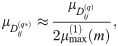

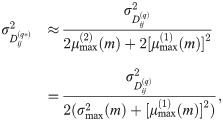

Let

6.2 One-dimensional projection of rs-fMRI distance onto a single ROI

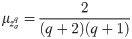

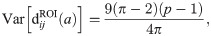

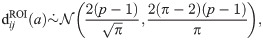

Just as in previous sections (Sections. 3.2.3 and 5.4), we now derive the distribution of our rs-fMRI diff metric (Eq 157). Unlike what we have seen in previous sections, we do not derive the exact distribution for this diff metric. We have determined empirically that the rs-fMRI diff is approximately normal. Although the rs-fMRI diff is a sum of p − 1 magnitude differences, the Classical Central Limit Theorem does not apply because of the dependencies that exist between the terms of the sum. Examination of histograms and quantile-quantile plots of simulated values of the rs-fMRI diff easily indicate that the normality assumption is safe. Therefore, we derive the mean and variance of the approximately normal distribution of the rs-fMRI diff. As we have seen previously, this normality assumption is reasonable even for small values of p.

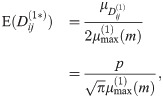

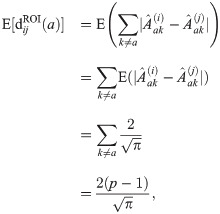

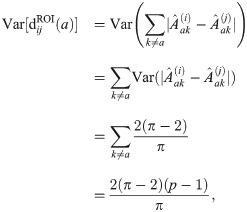

The mean of the rs-fMRI diff is derived by fixing a single ROI a and considering all pairwise associations with other ROIs

Considering the variance of the rs-fMRI diff metric, we have two estimates. The first estimate uses the variance operator in a linear fashion, while the second will simply be a direct implication of the corrected formula of the variance of rs-fMRI pairwise distances (Eq 164). Our first estimate is derived as follows

Using the corrected rs-fMRI distance variance formula (Eq 164), our second estimate of the rs-fMRI diff variance is given directly by the following

Empirically, the first estimate (Eq 171) of the variance of our rs-fMRI diff is closer to the sample variance than the second estimate (Eq 172). This is due to fact that we are considering only a fixed ROI

6.3 Normalized Manhattan (q = 1) for rs-fMRI

Substituting the non-normalized mean (Eq 159) into the equation for the mean of the max-min normalized rs-fMRI metric (Eq 169), we have the following

Similarly, the variance of

We summarize the moment estimates for the rs-fMRI metrics for correlation-based data derived from time series (Fig 10). We organize this summary by standard and attribute range-normalized rs-fMRI distance metric, statistic (mean or variance), and asymptotic formula.

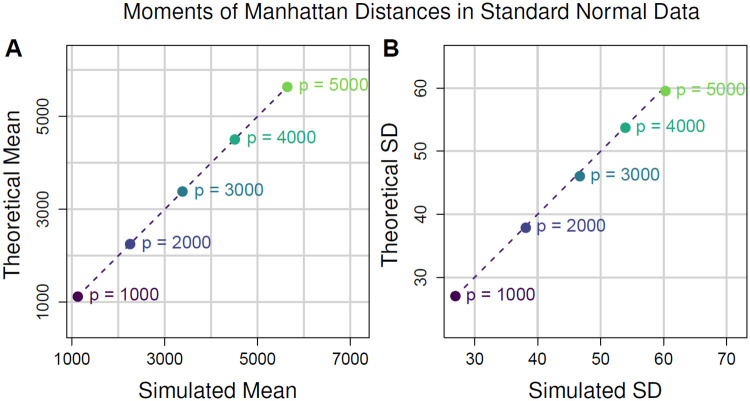

7 Comparison of theoretical and sample moments

We compare our analytical asymptotic estimates of sample moments for distributions of pairwise distances in high attribute dimension by generating random data for various dimensions m and p (Fig 11). We fix m = 100 samples and compute Manhattan (Eq 1) distance matrices from standard normal data for p = 1000, 2000, 3000, 4000, and 5000 attributes. For each value of p, we generate 20 random datasets and compute the mean and standard deviation of pairwise distances. We then average these 20 simulated means and standard deviations. For comparison, we compute the theoretical moments (Eqs 41 and 42) for each value of p and fixed m = 100 from the theoretical formulas. Scatter plots of theoretical versus simulated mean (Fig 11A) and theoretical versus simulated standard deviation (Fig 11B) indicate that our theoretical asymptotic formulas for sample moments are reliable for both large and relatively small numbers of attributes. For other combinations of data type, distance metric, sample size m, and number of attributes p, we find similar agreement between theoretical formulas and simulated moments (S9-S21 Figs in S1 File).

Comparison of theoretical and sample moments of Manhattan (Eq 1) distances in standard normal data.

(A) Scatter plot of theoretical vs simulated mean Manhattan distance (Eq 41). Each point represents a different number of attributes p. For each value of p we fixed m = 100 and generated 20 distance matrices from standard normal data and computed the average simulated pairwise distance from the 20 iterations. The corresponding theoretical mean was then computed for each value of p for comparison. The dashed line represents the identity (or y = x) line for reference. (B) Scatter plot of theoretical vs simulated standard deviation of Manhattan (Eq 1) distance (Eq 42). These standard deviations come from the same random distance matrices for which mean distance was computed for A. Both theoretical mean and standard deviation approximate the simulated moments quite well.

8 Effects of correlation on distances

All of the derivations presented in previous sections were for the cases where there is no correlation between instances or between attributes. We assumed that any pair (Xia, Xja) of data points for instances i and j and fixed attribute a were independent and identically distributed. This was assumed in order to determine asymptotic estimates in null data. That is, data with no main effects, interaction effects, or pairwise correlations between attributes. Within this simplified context, our asymptotic formulas for distributional moments are reliable. However, in real data are numerous statistical effects that impact distance distributional properties. That being said, we have shown that for Manhattan distances generated on real gene expression microarray data (S26-S124 Figs in S1 File) and distances generated with our new metric (Eq 158) on real rs-fMRI data (S125-S126 Figs in S1 File) that the normality assumption is approximately satisfied in many cases. In simulated data, we find that deviation from normality is caused primarily by large magnitude pairwise correlation between attributes. Pairwise attribute correlation can be the result of main effects, where attributes have different within-group means. On the other hand, there could be an underlying interaction network in which there are strong associations between attributes. If attributes are differentially correlated between phenotype groups, then interactions exist that change the distance distribution. In the following few sections, we consider particular cases of the Lq metric for continuous and discrete data under the effects of pairwise attribute correlation.

8.1 Continuous data

Without loss of generality, suppose we have X(m×p) where

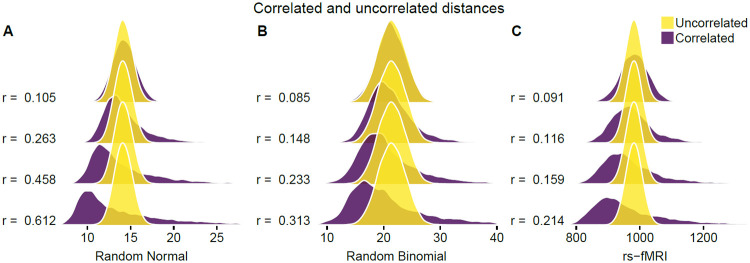

Distance densities from uncorrelated vs correlated bioinformatics data.

(A) Euclidean distance densities for random normal data with and without correlation. Correlated data was created by multiplying random normal data by upper-triangular Cholesky factor from randomly generated correlation matrix. We created correlated data for average absolute pairwise correlation (Eq 176)

In order to introduce a controlled level of correlation between attributes, we created correlation matrices based on a random graph with specified connection probability, where attributes correspond to the vertices in each graph. We assigned high correlations to connected attributes from the random graph and low correlations to all non-connections. Using the upper-triangular Cholesky factor U for uncorrelated data matrix X, we computed the following product to create correlated data matrix Xcorr

The new data matrix Xcorr has approximately the same correlation structure as the randomly generated correlation matrix created from a random graph.

8.2 GWAS data

Analogous to the previous section, we explore the effects of pairwise attribute correlation in the context of GWAS data. Without loss of generality, we let m = p = 100 and consider only the TiTv metric (Eq 115). To create correlated GWAS data, we first generated standard normal data with random correlation structure, just as in the previous section. We then applied the standard normal cumulative distribution function (CDF) to this correlated data in order transform the correlated standard normal variates into uniform data with preserved correlation structure. We then subsequently applied the inverse binomial CDF to the correlated uniform data with random success probabilities

8.3 Time-series derived correlation-based datasets

For our correlation data-based metric (Eq 158), we consider additional effects of correlation between features. Without loss of generality, we let m = 100 and p = 30. We show an illustration of the effects of correlated features in this context (Fig 12C). Based on the density estimates, it appears that correlation between features introduces positive skewness at low values of

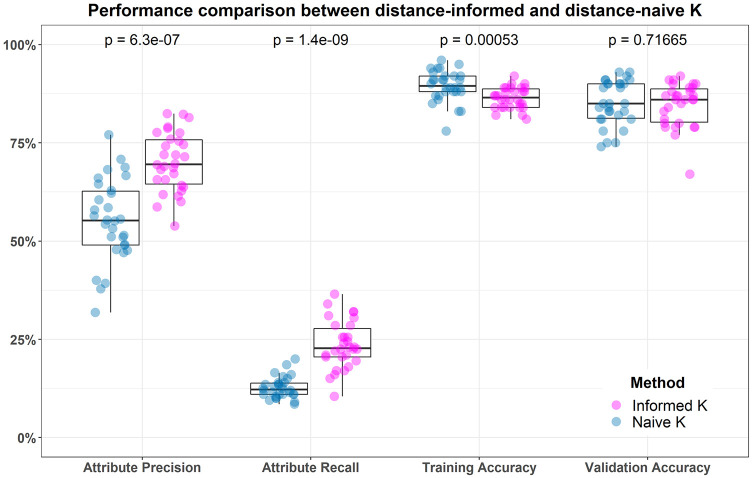

9 Feature selection with distance distribution-informed nearest neighbors

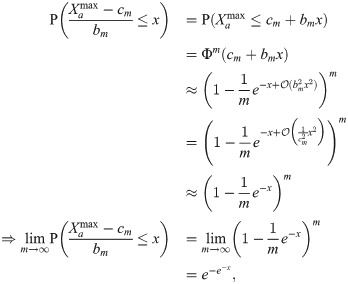

Our derivation of asymptotic moments of distance distributions has been motivated by the need to improve performance of feature selection in nearest-neighbor algorithms. The choice of k or a neighborhood radius can have a large impact on selected features [5]. Historically, the general rule-of-thumb for fixed k was k = 10. However, this rule-of-thumb does not adapt to properties of the data, such as sample size m or number of features p. As we have shown for random data with uncorrelated attributes, mean distance or standard deviation of sample distances increases in direct proportion to some function of p. As a result, the rule-of-thumb can be out of step with the average distance between neighbors in a real data set. Parameterizing the neighborhood sizes by the expected moments of the distance distribution, under the assumption of independent data and uncorrelated features, can improve upon naive neighborhood approaches.

The adaptive radius method MultiSURF outperformed fixed k methods for detecting interaction effects in simulated data [1]. In another simulation study, it was shown that MultiSURF performed relatively well in detecting both interaction effects and main effects [5]. The MultiSURF approach gives each target instance i its own tailored neighborhood radius Ri (Eq 178) as a function of the average pairwise distance to the target instance i (

The study in Ref. [5] showed that fixed k = ⌊m/6⌋ = 16 empirically gave approximately the same neighborhood size as MultiSURF on average, but the fixed k = ⌊m/6⌋ = 16 method modestly improved the detection of main effects and performed approximately the same for interaction effects in 100 replicated simulations. Furthermore, in Ref. [4], the approximation of k = ⌊m/6⌋ to the average MultiSURF radius (Eq 178) neighborhood order, although very accurate for m = 100, was more precisely shown to be

We compare the performance of Relief nearest-neighbor feature selection with data-informed kα = 1/2 and the rule-of-thumb k = 10 (Fig 13). We use consensus features nested cross-validation (cnCV) to perform feature and model selection while avoiding overfitting [28]. The cnCV approach has been shown to select fewer false positive features on average across all simulation replicates than standard nested cross-validation while simultaneously maintaining a low false negative rate for functional features. Our application of cnCV (cncv https://github.com/insilico/cncv) uses the Relief nearest-neighbor method for feature selection and random forest for classification, which was parameterized by ntree = 1000 trees and mtry = pf/3 randomly selected features at each node split. The value pf is the total features in a given training fold.

Simulation comparison between rule-of-thumb naive k = 10 and distance-distribution informed kα = 1/2.

Precision and recall for the functional features are significantly improved using informed k versus naive k = 10. The training and validation classification accuracy are similar for the two values of k with slightly less overfitting for informed-k.

For the comparison, we simulate data with an underlying interaction network, where interacting features have no main effects [29], and then we add main effect features. Each simulated data set has p = 1000 attributes, where 100 are functional, and m = 100 instances (50 cases and 50 controls). For statistical comparison, we create 30 replicate simulations, and each simulated data set is split into a training and a validation set for indepedent assessment.

The distance distribution informed-kα = 1/2 shows a statistically significantly advantage over naive k = 10 for feature selection performance (left two plots of Fig 13). The training and validation accuracy are very similar and very high for both types of k. The training accuracy is slightly higher for naive k = 10, but there is more of a drop in its validation accuracy, which suggests possible overfitting. The validation accuracy for informed kα = 1/2 is closer to its training accuracy, which suggests that its training accuracy is a better estimation of the true accuracy.

10 Discussion

Nearest-neighbor distance-based feature selection is a class of methods that are relatively simple to implement, and they perform well at detecting interaction effects in high dimensional data. Theoretical analysis of the limiting behavior of distance distributions for various data types and dimensions may lead to improved hyperparameter estimates of these feature selection methods. Furthermore, these theoretical results may help guide the choice of distance metric for a given dataset. Most often, distance-based feature selection methods use the Lq metric (Eq 1) with q = 1 or q = 2. However, these two realizations of the Lq metric have considerably different behavior for the mean and variance of their respective limiting distributions. For instance, the expected distance for L1 and L2 for standard normal data is proportional to p (Eq 41 and Fig 3) and

These results can inform the choice of L1 or L2 depending on context. For instance, distances become harder to distinguish from one another in high dimensions, which is one of the curses of dimensionality. In the case of L2, the asymptotic distribution (

We derived distance asymptotics for some of the most commonly used metrics in nearest-neighbor distance-based feature selection, as well as two new metrics for GWAS (Eq 115) and a new metric for time-series correlation-based data (Eqs 158 and 166) like resting-state fMRI. These are novel results that show the behavior of distances in random data. We also extended the asymptotic results of the standard Lq metrics to derive new estimates of the mean and variance of the attribute range-normalized Lq (max-min) distance for standard normal (Eq 92) and standard uniform (Eq 100) data using extreme value theory. Our derivations provide an important reference for those using nearest-neighbor feature selection or classification methods in common bioinformatics data. In particular, the range-normalized asymptotic results apply directly to Relief-based algorithms that use the range of each attribute to constrain its score to be within [−1, 1].

We derived the asymptotic mean and variance of the recently developed transition-transversion (TiTv) metric (Eq 112) for nearest-neighbor feature selection in GWAS data [30]. Our novel asymptotic estimates for the TiTv metric, as well as for the GM (Eq 110) and AM (Eq 111) metrics, provide an important reference to aid in neighborhood parameter selection for GWAS. We also showed how the Ti/TV ratio η (Eq 127) and minor allele frequency (or success probability) fa affect these discrete distances. For the GM and AM metrics, the distance is solely determined by the minor allele frequencies because the genotype encoding is not taken into account. We showed how both minor allele frequency and Ti/Tv ratio uniquely affects the TiTv distance (Fig 7A and 7C). Because transversions are more disruptive forms of mutation than transitions, this additional dimension of information is important to consider, which is why we have provided asymptotic results for this metric.

We developed a new nearest-neighbor metric for time-series correlation-based data, motivated in part by feature selection for resting-state fMRI studies. The new metric (Eq 157) allows us to use regions of interest (ROIs) as attributes. Previously Relief-based methods would only compute the importance of ROI-ROI pairs based on differential correlation, but this new metric allows one to compute the individual contribution of each ROI. Nearest-neighbor feature selection would be a useful tool for case-control studies to determine important ROIs due to interactions and to help elucidate the network structure of the brain as it relates to the phenotype of interest. With our new rs-fMRI metric (Eq 158), we can apply NPDR or any other nearest neighbor feature selection algorithm to determine the importance of individual ROIs in classifying important phenotypes (e.g., major depressive disorder versus healthy controls).

In addition to asymptotic Lq distance distributions, we also provided the exact distributions for the one-dimensional projection of the Lq distance onto individual attributes (Sections. 3.2.3, 5.4, and 6.2). These distributions are important for all nearest-neighbor distance-based feature selection algorithms, such as Relief or NPDR, because the Lq distance is a function of the one-dimensional attribute projection (diff). In particular, these projected distance distributions are important for improving inference for predictors in NPDR, which are one-dimensional attribute projections.

Deviations from Gaussian for the distribution of the pairwise distances could be an indication of interaction or other statistical effects in the data. We explored Gaussianity of Manhattan distances in real gene expression microarrays (S26-S124 Figs in S1 File) and rs-fMRI data (S125, S126 Figs in S1 File). In most of the cases, we found distances are approximately normally distributed after standardizing samples to be zero mean and unit variance. One implication of this is that we can roughly predict how many neighbors to expect within a fixed radius about a given target instance. In the cases where the distribution deviates from Gaussian, an important future goal is to understand how the expected moments are modified. This will help us identify fixed-k neighborhoods for NPDR feature selection that avoid the potentially high variability of radius-based neighborhood sizes and increase the power to detect important statistical effects. Another future direction is to apply the asymptotic techniques to derive means and variances for other new metrics such as set-theoretic distance measures [31, 32].

In addition to interaction effects, correlation between attributes and instances can cause significant deviations from the asymptotic variances derived in this work, which assumed independence between variables. To illustrate this deviation, we showed how strong correlations lead to positive skewness in the distance distribution of random normal, binomial, and rs-fMRI data (Fig 12A, 12B and 12C). Pairwise correlation between attributes causes very little change to the average distance, so our mean asymptotic results for uncorrelated data also are good approximations when attributes are not independent. In contrast, the sample variance of distances deviates from the uncorrelated case substantially as the average absolute pairwise attribute correlation increases (Eq 176). For fixed or adaptive-radius neighborhood methods, this deviation can increase the probability of including neighbors for a given instance and may reduce the power to detect interactions. A future goal is to derive formulas for the variance of metrics that adjust for correlation in the data. The increased variance for distances with correlated data may inform the choice of metric and optimization of neighborhoods in nearest-neighbor feature selection.

References

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

Theoretical properties of distance distributions and novel metrics for nearest-neighbor feature selection

Theoretical properties of distance distributions and novel metrics for nearest-neighbor feature selection