Competing Interests: A.R. acknowledges funding from Equinor, Vestas, Vattenfall, the industrial partners of the Norwegian Research Council. There are no patents, products in development or marketed products to declare. This does not alter our adherence to PLOS ONE policies on sharing data and materials.

- Altmetric

Hybrid physics-machine learning models are increasingly being used in simulations of transport processes. Many complex multiphysics systems relevant to scientific and engineering applications include multiple spatiotemporal scales and comprise a multifidelity problem sharing an interface between various formulations or heterogeneous computational entities. To this end, we present a robust hybrid analysis and modeling approach combining a physics-based full order model (FOM) and a data-driven reduced order model (ROM) to form the building blocks of an integrated approach among mixed fidelity descriptions toward predictive digital twin technologies. At the interface, we introduce a long short-term memory network to bridge these high and low-fidelity models in various forms of interfacial error correction or prolongation. The proposed interface learning approaches are tested as a new way to address ROM-FOM coupling problems solving nonlinear advection-diffusion flow situations with a bifidelity setup that captures the essence of a broad class of transport processes.

Introduction

Numerical simulations are the workhorse for the design, testing, and implementation of scientific infrastructure and engineering applications. While immense advances in computational mathematics and scientific computing have come to fruition, such simulations usually suffer a curse of dimensionality limiting turnaround. The field of multifidelity computing, therefore, aims to address this computational challenge by exploiting the relationship between high-fidelity and low-fidelity models. One such multifidelity approach becomes crucial, especially for multi-query applications, such as optimization, inference, and uncertainty quantification, that require multiple model evaluations in an outer-workflow loop. To this end, sampling-based approaches have been often introduced to leverage information from many evaluations of inexpensive low-fidelity models fused by only a few carefully selected high-fidelity computations. An excellent review of the state-of-the-art multifidelity approaches for outer-loop contexts can be found in [1].

In this paper, we focus on a different type of multifidelity formulation targeting domain decomposition type problems that consist of multiple zones with different characteristics as well as multiphysics systems where different levels of solvers are devoted to coupled physical phenomena. A key aspect of the zonal multifidelity approach is its ability to handle intrinsic heterogeneous physical properties, varying geometries, and underlying governing dynamics. This heterogeneity can be mild as in aerospace applications with spatially varying parameters. However, in media where there is a permittivity such as in electrostatics or porous media, this might be more pronounced. For example, fluid flow in rock often follows Darcy’s law, whereas flow in a fracture is modeled as Poiseuille flow. Moreover, a related process in subsurface flows might include a high fidelity approach around wells (that drive the flow) and a low-fidelity model for subdomains in the interior [2, 3]. This discussion can also be extended to an active flow control problem to elucidate the concept of the zonal multifidelity approach that we tackle in this work. In general, boundary-layer control poses a grand challenge in many aerospace applications including lift enhancement, noise mitigation, transition delay, and drag reduction. Among many other actuator technologies, blooming jets [4–6] and sweeping jets [7–9] offer new prospective solutions in improving the aerodynamics efficiency and performance of the future air vehicle systems. The size of these actuators is usually orders of magnitude smaller than the length scales of the entire computational domain (e.g., an aircraft wing or tail). Including the full representations of each controller’s internal flow dynamics in a comprehensive numerical analysis of the entire system is an extremely daunting approach [10]. Meanwhile, the effective flow physics of these actuators can often be accurately characterized by a latent reduced order space due to the existence of strong coherent structures such as quasi-periodic or time-periodic shedding, pulsation, or jet actuation. Therefore, in practice, those flow actuators can be modeled by considering a reduced order surrogate coupled and tied to the global simulation of the whole wing or tail [11, 12].

The above examples illustrate that different levels of models and descriptions can be devoted to different zones and components of the problem in order to allocate computational resources more effectively and economically. This might be the case for many other coupled multiphysics systems, such as geometric multiscale [13–18] and heterogeneous multiscale [19, 20] problems, fluid-structure interactions [21], and industrial scale applications [22–24]. Since various zones and/or physics in these systems are connected through interfaces, data sharing, and consistent interface treatment among respective models are inevitable. Likewise, multirate and locally adaptive stepping methods can yield a mismatch at the space-time interface, and simple interpolation or extrapolation might lead to solution divergence or instabilities [25]. Moreover, even if we are interested in simulating just one portion of the domain corresponding to some specific dynamics, we still need to specify the physically consistent interface conditions. Running a high fidelity solver everywhere only to provide the flow state at the interface seems to be unreasonable. Therefore, we consider formulating an interface modeling approach to facilitate the development of efficient and reliable multifidelity computing. This should serve and advance the applicability of the emerging digital twin technologies in many sectors [26]. However, just like any technology, it comes with its own needs and challenges [27–32]. In practice, two modeling paradigms are in order.

Physics-based modeling: This approach involves careful observation of a physical phenomenon of interest, development of its partial understanding, expression of the understanding in the form of mathematical equations, and ultimately, solution of these equations. Due to the partial understanding and numerous assumptions along the steps from observation to the solution of the equations, a large portion of the essential governing physics might be, intentionally or unintentionally, ignored. The applicability of high fidelity simulators with minimal assumptions has so far been limited to the offline design phase only. Despite this significant drawback, what makes these models attractive are sound foundations from first principles, interpretability, generalizability, and existence of robust theories for the analysis of stability and uncertainty. However, most of these models are generally computationally expensive, do not adapt to new scenarios automatically, and can be susceptible to numerical instabilities.

Data-driven modeling: With the abundant supply of big data, open-source cutting edge and easy-to-use machine learning libraries, cheap computational infrastructure, and high quality, readily available training resources, data-driven modeling has become very popular. Compared to the physics-based modeling approach, these models thrive on the assumption that data is a manifestation of both known and unknown physics and hence when trained with an ample amount of data, the data-driven models might learn the full physics on their own. This approach, involving in particular deep learning, has started achieving human-level performance in several tasks that were until recently considered impossible for computers. Notable among these are image classification [33], dimensionality reduction [34], medical treatment [35], smart agriculture [36], physical sciences [37–39] and beyond. Some of the advantages of these models are online learning capability, computational efficiency for inference, accuracy even for very challenging problems as far as the training, validation and test data are prepared properly. However, due to their data-hungry and black-box nature, poor generalizability, inherent bias and lack of robust theory for the analysis of model stability, their acceptability in high stake applications like digital twin and autonomous systems is fairly limited. In fact, the numerous vulnerabilities of deep neural networks have been exposed beyond doubt in several recent works [40–42].

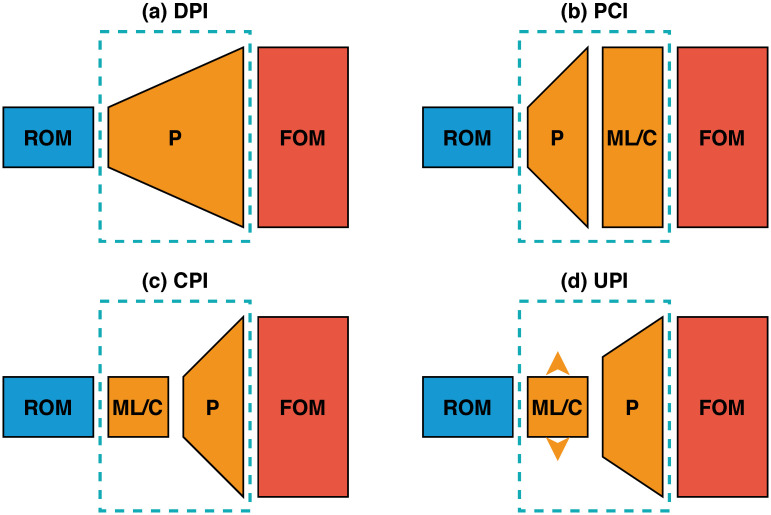

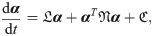

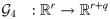

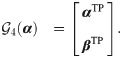

In this work, we put forth a hybrid analysis and modeling (HAM) framework as a new paradigm in modeling and simulations by promoting the strengths and mitigating the weaknesses of physics-driven and data-driven modeling approaches. Our HAM approach combines the generalizability, interpretability, robust foundation and understanding of physics-based modeling with the accuracy, computational efficiency, and automatic pattern-identification capabilities of advanced data-driven modeling technologies. In the context of multifidelity computing, we advocate and explore the utilization of statistical inference to bridge low-fidelity and high-fidelity descriptions. In particular, we adopt the long short-term memory (LSTM) neural network to match the reduced order model (ROM) and full order model (FOM) solutions at their intersect. To form the building blocks of our HAM approach for coupling ROM and FOM descriptions, we introduce an array of interface modeling paradigms as depicted in Fig 1 and described next.

The proposed multifidelity concepts toward hybrid ROM/FOM coupling.

Dashed blocks refer to the interface learning approaches introduced in the present work: (a) Direct Prolongation Interface (DPI), (b) Prolongation followed by a machine learning Correction Interface (PCI), (c) machine learning Correction followed by a Prolongation Interface (CPI), and (d) Uplifted Prolongation Interface (UPI) where the latent space is enhanced through machine learning before we apply the prolongation operator.

The Direct Prolongation Interface (DPI) approach utilizes standard projection based ROMs, where the system’s state at the interface is obtained by the reconstruction of a Galerkin projection ROM solution. However, traditional Galerkin ROM often yields an inaccurate solution in case of systems with strong nonlinearity. Therefore, we utilize machine learning to correct and augment ROM solution in a hybrid framework, as follows.

In the Prolongation followed by machine learning Correction Interface (PCI) methodology, an LSTM is used to rectify the field reconstruction from Galerkin ROM at the interface by learning the correction in the higher dimensional space. Although this seems to be a straightforward implementation, it might amount to learning a high dimensional correction vector, especially for two- and three-dimensional domains.

To mitigate the potential computational challenges dealing with excessively large input/output vectors, a machine learning Correction followed by Prolongation Interface (CPI) approach can be employed to provide a closure effect to remedy the instabilities and inaccuracies of Galerkin ROM due to modal truncation.

For CPI, the LSTM learns the correction terms in ROM space, defined by the number of modes in ROM approximation. As a result, the reconstruction quality will eventually be limited by the Galerkin ROM dimension. Therefore, the Uplifted Prolongation Interface (UPI) framework not only corrects the Galerkin ROM solution, but also expands the ROM subspace to enhance the reconstruction quality. Our primary motivation in this paper is to describe and test these four interface modeling approaches to tackle ROM/FOM coupling problems and show how we can elucidate these multifidelity mechanisms within the HAM framework.

ROM-FOM coupling framework

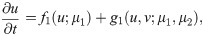

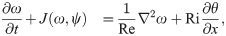

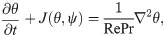

In order to demonstrate the performance of the introduced HAM approaches for ROM-FOM coupling, we consider a coupled system as follows,

Reduced order model

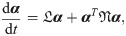

Introducing a spatial discretization to Eq (1), it can be rewritten in a semi-discrete continuous-time as follows,

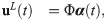

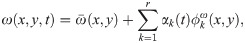

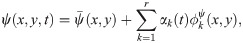

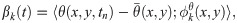

We exploit the advances and developments of ROM techniques to build surrogate models to economically resolve portions of domain and/or physics. The ROM solution can thus be used to infer the flow conditions at the interface so that a FOM solver can be efficiently employed for the sub-domains of interest. The standard Galerkin ansatz is applied for the dynamics of u as

In the following, we formulate the four methodologies outlined in Fig 1 to match the ROM solution for α with the FOM solution at the interface. For all cases, a ROM representation is adopted for u, which can be economically solved to compute an estimate of the interface flow condition to feed the FOM solver of v. For example, in multi-component systems like that depicted in Fig 2, the ROM solution at the interface is regarded as a boundary condition for the FOM.

DPI: Direct Prolongation Interface. The objective of the DPI approach is to recover the flow variables at the interface from the ROM solution (i.e., the time integration of Eq (6)). In other words, we seek to learn a mapping

Since the ROM approximation is built upon the assumption of representing the flow within a low order subspace, the approximation given by Eq (4) basically introduces a projection error. This error can be significant for complex systems, where the flow dynamics are characterized by a wide spectrum while only few modes are considered to minimize the computational burden of solving the ROM. Moreover, the nonlinear interactions as well as the modal truncation coupled with the Galerkin projection methodology usually cause Eq (6) to yield erroneous predictions of the coefficients α(t). Therefore, the solution from the DPI approach is potentially inaccurate [46]. Consequently, the reconstruction

PCI: Prolongation followed by Correction Interface. The PCI framework aims to correct the mapping

We highlight that the PCI approach can be feasible for one-dimensional (1D) problems (where the interface can be just a single point). However, for higher dimensional systems, the sizes of input and output vectors grow rapidly (unless a too coarse mesh is adopted). For such cases, PCI becomes prohibitive, and the learning and correction should be performed in a reduced latent space instead.

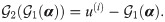

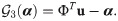

CPI: Correction followed by Prolongation Interface. The CPI methodology works by introducing the correction in the latent subspace, rather than the FOM space. This is especially crucial for 2D and 3D configurations. In particular, the CPI aims at curing the deviation in modal coefficients predicted from solving the Galerkin ROM equations, known as closure error. Due to the modal cut-off in ROM approximation, Eq (6) does not necessarily capture the true projected trajectory of α(t). Therefore, we introduce an LSTM mapping

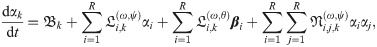

UPI: Uplifted Prolongation Interface. Although the CPI methodology cures the closure error and provides a stabilized solution, it does not address projection error. Unless a large number of modes are resolved, the projection error can be significant, especially for problems with discontinuities and shocks. To deal with those situations, an uplifting ROM has been proposed [46], where both closure and projection errors are taken care of. For closure, similar to CPI, the Galerkin ROM predictions are tuned to match their true projection values. In addition, following Galerkin ROM solution, the ROM subspace is expanded to capture some of the smaller scales missing in the initial subspace as follows,

Therefore, a complementary model for β has to be constructed so that the uplifting approach can be employed. To accomplish this, a mapping from the first r modal coefficients to the next q modes is assumed to exist. Nonlinear Galerkin projection has been pursued to express this mapping as

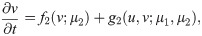

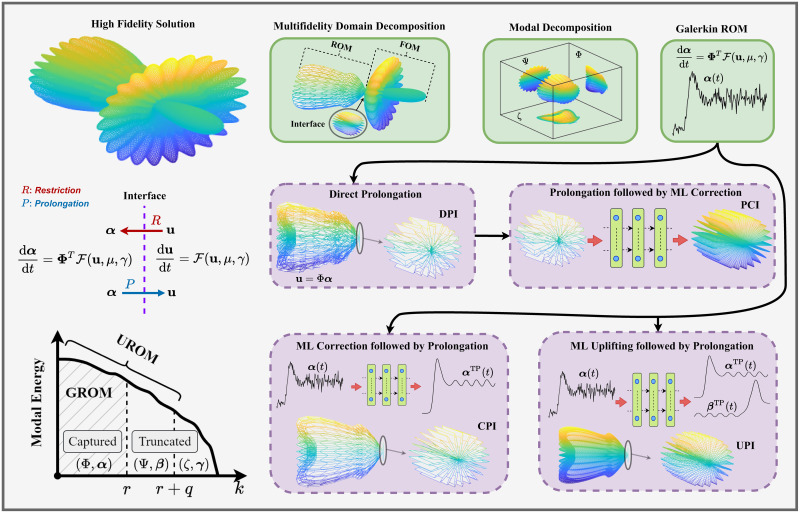

Schematic illustration of the methodologies introduced to utilize ROM to economically provide sound interface conditions in a multifidelity domain decomposition problems.

Galerkin ROM yields inaccurate predictions (represented by rough curve), and direct prolongation of these results might be not efficient. PCI adds a correction effect to the prolonged solution in FOM space. Instead, CPI and UPI introduce the correction at ROM level before prolongation. UPI adds an extra superresolution effect to augment solution quality.

The ROM-FOM coupling philosophy as well as the introduced interface learning approaches are summarized in the cartoon shown in Fig 2. These methodologies are also applicable to a wide range of computational problems with multifidelity domain decomposition. The depicted system is assumed to be fully characterized by three mutually orthogonal sets of basis functions, namely Φ, Ψ, and ζ as below

On the other hand, CPI treats the Galerkin ROM deficiencies at the ROM level. In particular, it introduces a closure effect to better predict α. This closure simply compensates the effects of truncated modes (i.e., Ψ and ζ) onto the dynamics of Φ. This yields a better estimate of α, as illustrated by the smooth curve in Fig 2. We note that in CPI, the effects of Ψ and ζ are only considered to improve the prediction of α. However, their contributions to the solution manifold reconstruction are not included, resulting in a substantial reconstruction error (projection error). To deal with this caveat, UROM seeks to add a superresolution enhancement by incorporating the Ψ set of basis into the reconstruction step by learning the dynamics of the corresponding β coordinates. This is represented by a higher resolution (denser) reconstruction in UPI case, compared to CPI, and DPI. Note that the PCI is still showing the highest resolution (the densest reconstruction) as it nudges the prediction at the interface to its FOM counterpart (i.e., including all Φ, Ψ, and ζ).

Demonstrations

We demonstrate the ROM-FOM coupling methodologies using two examples of varying complexities. In the first one, we describe a fluid flow scenario over a bizonal domain with heterogeneous physical properties using the one-dimensional Burgers problem. For this case, we shall see that the interface between different sub-domains is defined by a single point (i.e., d = 1). Second, we consider the Marsigli flow problem represented by the two-dimensional Boussinesq equations to demonstrate the ROM-FOM coupling for multiphysics systems. In particular, a ROM solver is devoted for the mass and momentum transport equations while a FOM is reserved for the energy transport.

The one-dimensional Burgers problem

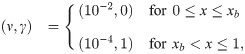

In order to represent a zonal multifidelity simulation, we consider the following one dimensional (1D) viscous Burgers problem,

The discretized domain is divided into a left zone with

For data generation, we solve the full order model representing the 1D Burgers equation (Eq (16)) over the entire domain using a spatial mesh resolution of 4096 and time step of 2.5 × 10−6. For initial condition, we consider a square-like wave defined as,

For ROM construction, we consider the truncated solution snapshots for the left part of the domain (i.e., 0 ≤ x ≤ 0.75) for t ∈ [0, 1]. For POD basis generation, we use only 200 snapshots distributed evenly from t = 0 to t = 1, to reduce the computational cost of solving the corresponding eigenvalue problem. Once ROM is constructed, it is integrated in time with a time step of 2.5 × 10−4 to match the time step in the right part of the domain (to be handled via a FOM solver). During the deployment phase of the coupled system, the ROM feeds the FOM solver with the boundary condition at xb. On the other hand, the effects of the interface on the ROM dynamics are considered during the offline stage of data generation and basis construction.

We utilize labelled data at the time interval of t ∈ [0, 1] for the training and validation of the LSTM neural networks. In particular, four fifths of the collected data set are randomly selected for training while the remaining one fifth is reserved for validation purposes (to avoid overfitting). We highlight that numerical experiments reveal that the performance in our case is not significantly sensitive to the neural network hyperparameters. Thus, we manually tune the hyperparameters during the training and validation stages by repeating the previous procedure multiple times while varying the seeds for the random number generator and using averaged loss values for assessment. We adopt an LSTM architecture of 2 layers with 20 cells in each layer. In the meantime, we note that automated hyperparameter search tools can be utilized for optimized architectures. Testing of the proposed schemes is performed for t ∈ [0, 2], corresponding to a temporal extrapolation behavior with respect to the training interval.

Since the coupling between ROM and FOM is represented by the physical interface at xb, we notice that the Θ in the DPI map (i.e.,

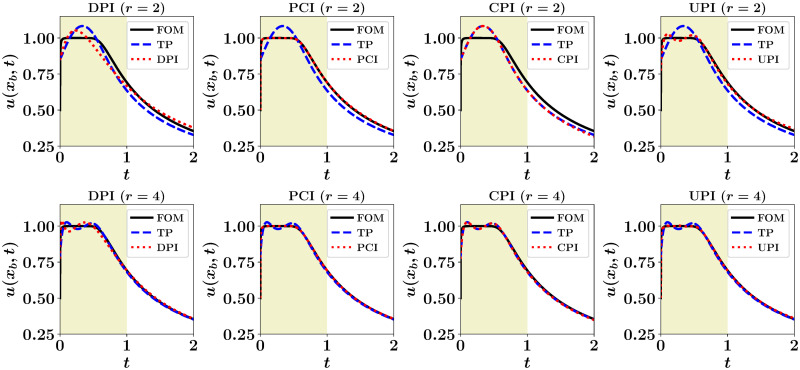

Velocity at the interface obtained by considering ROM in the left part of the domain, with r = 2 (top) and r = 4 (bottom).

FOM solution corresponds to solving the governing equation over the entire domain, while TP denotes the projection of the FOM solution in the left zone onto the corresponding POD subspace.

It can be seen that DPI results, especially for r = 2, are not very accurate due to the mutual effects of modal truncation and model nonlinearity in Galerkin ROM. On the other hand, the PCI solution gives almost perfect match with FOM. As the PCI approach nudges the prolonged ROM solution to its FOM counterpart, it gives even higher accuracy than TP. That is TP is limited by the maximum quality that can be obtained using a rank-r approximation. For CPI, since the LSTM introduces a closure effect, it steers the ROM results to match the TP solution. Finally, the UPI recovers some of the smaller scales (truncated modes) so it yields better reconstruction than TP since it spans a larger subspace. For r = 2, UPI uplifts the solution to a rank-4 approximation, while for r = 4, it is uplifted to a rank-8 approximation.

For quantitative assessment, we document the ℓ2 norm of the deviation of the predicted velocity at the interface compared to the FOM solution in Table 1. Results are reported for r ∈ {2, 4, 8}. We see that the error in the CPI case almost matches that of TP, while PCI gives the highest accuracy since it is trained to learn the correction with respect to the FOM solution. Also, CPI results are significantly close to TP, illustrating the closure effect introduced by CPI to account for the effect of modal truncation on ROM dynamics. Another interesting observation is that UPI quality at a given value of r is equivalent to TP with twice that value. This indicates that UPI is able to give a superresolution effect up to 2r (since we select q = r). Also, we notice that at r = 2, DPI yields lower ℓ2 norm than TP. This is because TP solution is obtained by the projection of the FOM solution onto the POD subspace generated using data at t ∈ [0, 1]. So, this subspace is optimal only for t ∈ [0, 1], while testing is performed up to t = 2. Therefore, TP solution no longer represents the best rank-r approximation beyond t = 1.

| Setup | r = 2 | r = 4 | r = 8 |

|---|---|---|---|

| TP | 4.56 | 1.25 | 0.21 |

| DPI | 3.67 | 1.46 | 0.44 |

| PCI | 0.23 | 0.07 | 0.03 |

| CPI | 4.57 | 1.27 | 0.23 |

| UPI | 1.28 | 0.24 | 0.11 |

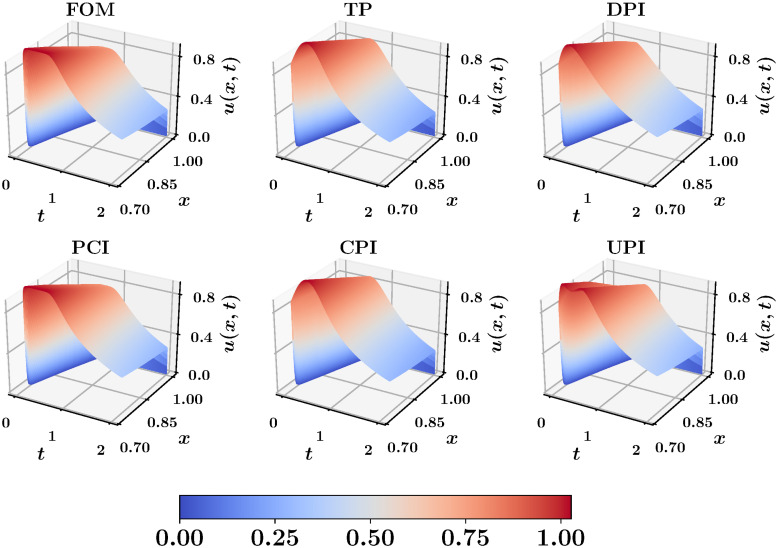

Finally, we investigate the coupling efficiency by solving the right part (i.e., 0.75 ≤ x ≤ 1) using a high fidelity FOM solver applied only onto this subdomain. This is fed with a boundary condition u(0.75, t) from the low-fidelity interface learning approaches described before. Fig 4 shows the spatiotemporal velocity profile with r = 2, compared to the FOM predictions. Again, we observe that the solution with PCI boundary is similar to this FOM solution. Also, CPI matches the TP results, but they both smooth-out the surface peak because of the low rank limitations. Although it seems that the accuracy of UPI cannot exceed that of CPI with r + q assuming optimal performance for both CPI and UPI, there is a computational side in this comparison. The CPI with r + q modes implies the solution of a Galerkin ROM with a dimension of r + q, while the UPI requires the solution of a Galerkin ROM with r. We have seen that for fluid flows with quadratic nonlinearity, the computational cost of solving a Galerkin ROM scales cubically with the number of modes. Thus, the implementation of CPI with r + q with q = r is about 8 times more costly than UPI with r + q. At this point, we highlight that the selection of r and q is highly dependent on the problem in hand, the corresponding decay of POD eigenvalues, and the level of accuracy sought. A compromise between computational cost of solving a Galerkin ROM with r and the corresponding stability, the amount of information captured by r and r + q modes, and reliability of UPI with r + q is always in place.

Spatio-temporal velocity profile obtained from applying high fidelity (FOM) solver onto the right subdomain (0.75 ≤ x ≤ 1), fed with interface boundary from a low-fidelity (ROM) solution with r = 2.

The two-dimensional Boussinesq problem

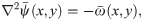

Boussinesq equations represent a simple approach for modeling geophysical waves such as oceanic and atmospheric circulations induced by temperature differences [49] as well as other situations, like isothermal flows with density stratification. In the Boussinesq approximation, variations of all fluid properties other than the density are neglected completely. Moreover, the density dependence is ignored in all terms except for gravitational force (giving rise to buoyancy effects). As a result, the continuity equation can be adopted in its constant density form, and the momentum equation can be simplified significantly. The dimensionless form of the 2D incompressible Boussinesq equations in vorticity-streamfunction formulation can be represented by the following two coupled transport equations [50, 51],

We utilize the 2D Boussinesq equation to illustrate the ROM-FOM coupling for multiphysics situations. In particular, we suppose that we are more interested in the temperature field predictions. Thus, we dedicate a FOM solver for Eq (24) However, we see that the solution of this equation requires evaluating the streamfunction field at each time step. The kinematic relationship between vorticity and streamfunction is given by the following Poisson equation,

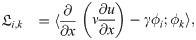

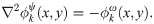

Thus, the Galerkin ROM of Eq (23) can be written as

We notice that the ROM defined by Eq (30) equipped with Eq (27) can be adopted to approximate the instantaneous streamfunction field, which is required to solve Eq (24) for the temperature in FOM space. On the other hand, the solution of the FOM (i.e., Eq (24)) along with Eq (31) feeds the ROM solver with β values. This constitutes a two-way ROM-FOM coupling problem, in contrast to the one-way coupling in the aforementioned 1D Burgers example. We also highlight that the computational cost of the projection step (i.e., Eq (31)) is minimal compared to the solution of Eq (24).

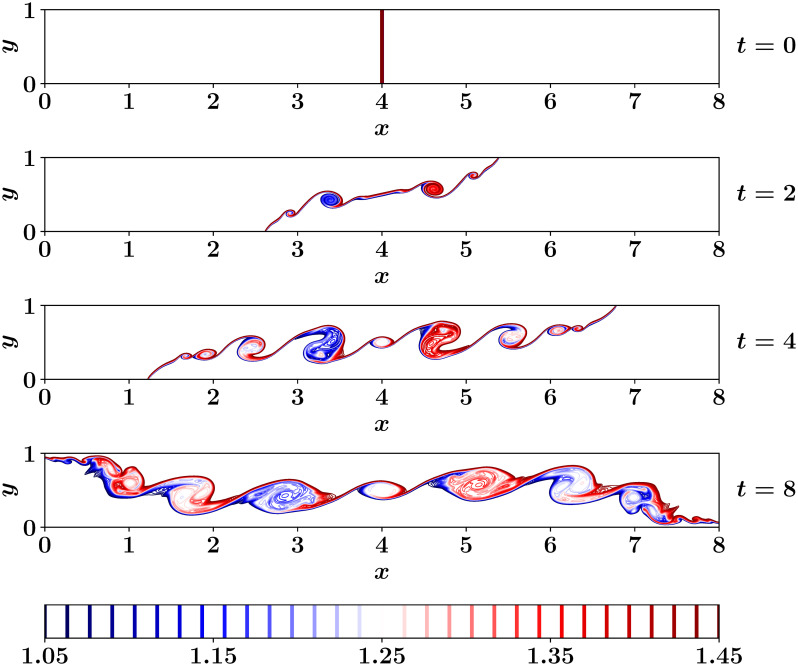

For demonstration, we consider a strong-shear flow exhibiting the Kelvin-Helmholtz instability, known as Marsigli flow or lock-exchange problem. The physical process in this flow problem explains how differences in temperature/density can cause currents to form in the ocean, seas and natural straits. For example, Marsigli discovered that the Bosporus currents are a consequence of the different water densities in the Black and Mediterranean seas [52]. Basically, when fluids of two different densities meet, the higher density fluid slides below the lower density one. This is one of the primary mechanisms by which ocean currents are formed [53].

The problem is defined by two fluids of different temperatures, in a rectangular domain (x, y) ∈ [0, 8] × [0, 1] with a a vertical barrier dividing the domain at x = 4, keeping the temperature, θ, of the left half at 1.5 and temperature of the right half at 1. Initially, the flow is at rest (i.e., ω(x, y, 0) = ψ(x, y, 0) = 0), with uniform temperatures at the right and left regions (i.e., θ(x, y, 0) = 1.5 ∀ x ∈ [0, 4] and θ(x, y, 0) = 1 ∀ x ∈ (4, 8]). Free slip boundary conditions are assumed for flow field, and adiabatic boundary conditions are prescribed for temperature field. Reynolds number of Re = 104, Richardson number of Ri = 4, and Prandtl number of Pr = 1 are set in Eqs (23) and (24). A Cartesian grid of 4096 × 512, and a timestep of Δt = 5 × 10−4 are used for the FOM simulations. Standard second-order central finite difference schemes are adopted for the discretization of linear terms while the second order Arakawa scheme [54] is used to compute the Jacobian term. The evolution of the temperature field is shown in Fig 5 at t = 0, 2, 4, 8. At time zero, the barrier is removed instantaneously triggering the lock-exchange problem, where the higher density fluid (on the right) slides below the lower density fluid (on the left) causing an undercurrent flow moving from right to left. Conversely, an upper current flow moves from left to right, causing a strong shear layer between the counter-current flows. As a result, vortex sheets are produced, exhibiting the Kelvin-Helmholtz instability.

Temperature field at different time instances for 2D Boussinesq problem using 4096 × 512 grid and Δt = 0.0005.

Considering the dimensionality of this problem, we emphasize that the PCI approach becomes highly unfeasible. For example, with the current mesh resolution (i.e., 4096 × 512), the dimension of the state space of the prolonged interface is ≈ 2 × 106. Building and training of LSTMs with an input and output vector sizes of two millions become prohibitive. Even the training of convolutional neural network with such high resolutions (which are typical in fluid flow simulations) requires excessive computational resources. Therefore, we present results for ROM-FOM coupling using approaches that operate in ROM space (i.e., DPI, CPI and UPI). We note that 800 time snapshots are 454 stored for POD basis construction. For the Galerkin ROM solver, r = 8 modes are retained. We also utilize the dataset of the stored 800 snapshots for LSTM training and validation (80% randomly selected for training and the rest for validation, similar to the previous example). A two-layer LSTM with 20 cell in each layer constitutes our LSTM architecture. During the testing phase, the trained neural networks are deployed at each and every timestep. This corresponds to the application of the presented approached 16000 times.

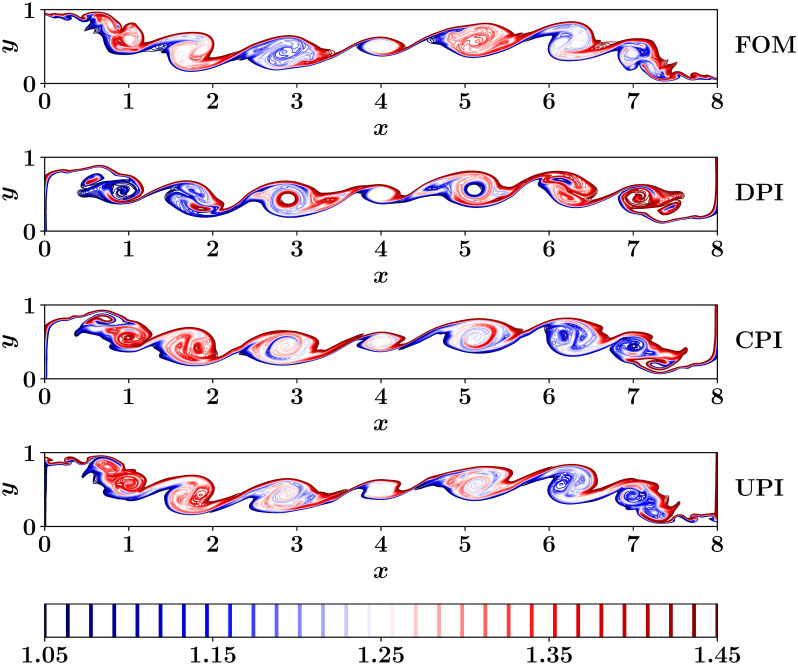

Fig 6 shows the predictions of the temperature field at final time (i.e., t = 8) computed from DPI, CPI, and UPI approaches compared to the FOM field. We emphasize that the ROM-FOM coupling results correspond to the solution of the vorticity equation with a ROM solver, which feeds the FOM solver with streamfunction to solve the temperature equation only as opposed to the FOM results which comes from the solution of both the 2D Boussinesq equations using a FOM simulation. Although the CPI results are better than those of DPI, we can observe that the fine details of the flow field are not accurately captured. That is 8 modes are not sufficient to sufficiently represent the flow field. This is a common problem for convection-dominated flows which exhibit slow decay in the POD eigenvalues and the generated global basis functions suffer from modal deformation [55]. On the other hand, the implementation of the UPI approach with r = 8 and q = 8 recovers an increased amount of the fine flow structures that are not well-represented by the first r = 8 modes.

Final temperature fields as obtained from different ROM-FOM coupling approached, compared to the FOM solution.

We note that the PCI becomes infeasible for higher dimensional systems.

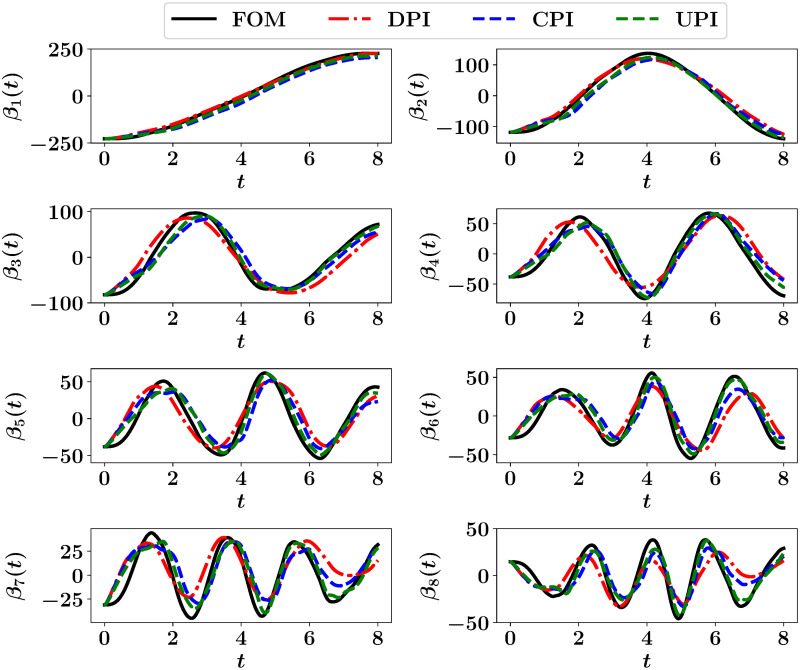

Although the ROM is built for the vorticity equation only, the basis functions of the temperature fields should be generated as well to carry-out the coupling from FOM to ROM. Moreover, in order to illustrate the temporal variation of the coupling quality, we project the resolved temperature fields at different times onto their POD basis. This is depicted in Fig 7, showing the effect of different approaches on the resulting predictions of temperature fields. The FOM trajectory corresponds to the solution of both the 2D Boussinesq equations in FOM space, then projecting the obtained fields on the basis functions of θ (see Eq (31)). For the rest, the streamfunction fields are obtained from ROM predictions and fed into FOM solver to compute the temperature fields.

Projection of the predicted temperature fields at different times from FOM, DPI, CPI and UPI onto the POD basis function.

Conclusions

We provide an interface learning approach via ROM-FOM coupling for multifidelity simulation environments. This learning paradigm is built with a hybrid analysis and modeling (HAM) framework to enhance the ROM approximation of interface conditions. A demonstration with a bizonal 1D Burgers problem is considered to assess the performance of the introduced learning schemes for multi-component systems. For 1D problems, we find that a prolongation followed by a machine learning correction interface (PCI) yields very good predictions. However, this might be unfeasible for 2D and 3D cases, where a correction in ROM subspace is essential. For such, a machine learning correction in ROM space followed by a prolongation interface (CPI) can produce sufficient accuracy. For complex systems where the projection error is significantly large, an uplifted prolongation interface (UPI) methodology can be adopted to recover some of the truncated scales. This is further illustrated using the lock-exchange problem governed by the 2D Boussinesq problem, where the ROM and FOM solvers address the vorticity and temperature equations, respectively. The coupling from ROM to FOM is represented by the 2D streamfunction fields reconstructed from the ROM solver, saving the run time for Possion equation, which is the most demanding part of an incompressible flow solver. Owing to the relative simplicity, robustness and ease of these interface learning methods, we expect a growing number of applications in a large variety of interfacial problems in science and engineering. Of particular interest, this ROM-FOM coupling could be a viable method for developing next generation digital twin technologies.

Acknowledgements

O.S. gratefully acknowledges the U.S. DOE Early Career Research Program support. A.R. acknowledges support from Operational Control for Wind Power Plants (OPWIND) project and its industries partners (Equinor, Vestas, Vattenfall).

References

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

Multifidelity computing for coupling full and reduced order models

Multifidelity computing for coupling full and reduced order models