Competing Interests: For a portion of time during this study, GWT received salary from a commercial company: Google. This does not alter our adherence to PLOS ONE policies on sharing data and materials. Specifically, during this time GWT worked on this study in his capacity as a professor at the University of Guelph. This appointment was ongoing during his sabbatical at Google.

- Altmetric

Reasoning about graphs evolving over time is a challenging concept in many domains, such as bioinformatics, physics, and social networks. We consider a common case in which edges can be short term interactions (e.g., messaging) or long term structural connections (e.g., friendship). In practice, long term edges are often specified by humans. Human-specified edges can be both expensive to produce and suboptimal for the downstream task. To alleviate these issues, we propose a model based on temporal point processes and variational autoencoders that learns to infer temporal attention between nodes by observing node communication. As temporal attention drives between-node feature propagation, using the dynamics of node interactions to learn this key component provides more flexibility while simultaneously avoiding issues associated with human-specified edges. We also propose a bilinear transformation layer for pairs of node features instead of concatenation, typically used in prior work, and demonstrate its superior performance in all cases. In experiments on two datasets in the dynamic link prediction task, our model often outperforms the baseline model that requires a human-specified graph. Moreover, our learned attention is semantically interpretable and infers connections similar to actual graphs.

Introduction

Graph structured data arise from fields as diverse as social network analysis, epidemiology, finance, and physics, among others [1–4]. A graph is comprised of a set of N nodes,

The focus of GNNs thus far has been on static graphs [1]— graphs with a fixed adjacency matrix A. However, a key component of network analysis is often to predict the state of a graph as A evolves over time. For example, knowledge of the evolution of person-to-person interactions during an epidemic facilitates analysis of how a disease spreads [11], and can be expressed in terms of the links between people in a dynamic graph. Other applications include predicting friendship, locations of players or some interaction between them in team sports, such as soccer [12, 13].

In many dynamic graph applications, edges can be: 1) short term (and usually frequent) interactions, such as direct contacts, messaging, passes in sports, or 2) long term intrinsic connections, such as sibling connections, affiliations, and formations. In practice, these long term edges are often specified by humans, and can be suboptimal and expensive to obtain. The performance of methods such as DyRep [14] rely heavily on the quality of long term edge information.

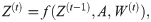

To alleviate this limitation, we take a different approach and infer long term structure jointly with the target task. The inferred structure is modelled as temporal attention between edges (Fig 1). We use DyRep [14] as a backbone model and Neural Relational Inference (NRI) [15] to learn attention.

A simple example illustrating the goal of our work, which is to learn temporal attention between nodes by observing the dynamics of events.

The learned attention is then used to make better future predictions. Dotted edges denote attention values yet to be updated.

We also propose a bilinear transformation layer for pairs of node features instead of concatenation as typically employed in prior work, including DyRep and NRI. On two dynamic graph datasets, Social Evolution [16] and GitHub (https://www.gharchive.org/), we achieve strong performance on the task of dynamic link prediction, and show interpretability of learned temporal attention sliced at particular time steps.

1 Related work

Prior work [13, 15, 17–21] addressing the problem of learning representations of entire dynamic graphs has tended to develop methods that are very specific to the application, with only a few shared ideas. This is primarily due to the difficulty of learning from temporal data in general and temporal graph-structured data in particular, which remains an open problem [3] that we address in this work.

RNN-based methods. Given an evolving graph

Temporal knowledge graphs. The problem of inferring missing links in a dynamic graph is analogous to the problem of inferring connections in a temporal knowledge graph (TKG). In a TKG, nodes and labelled edges represent entities and the relationships between them, respectively [23]. Each edge between two nodes encodes a real-world fact that can be represented as a tuple, such as: (Alice, Liked, Chopin, 2019). In this example, Alice and Chopin are the nodes, the fact that Alice liked Chopin is the relationship, and 2019 is the timestamp associated with that fact. It may be that Alice no longer likes Chopin, as tastes change over time. To allow these timestamps to inform link prediction, previous work has provided a score for the entire tuple together [23], or by extending static embedding methods to incorporate the time step separately [24–27]. These methods tend to focus on learning embeddings of particular relationships, instead of learning the entire TKG.

Dynamic link prediction. There are several recent papers that have explored dynamic link prediction. In DynGem [28], graph autoencoders are updated in each time step. The skip-gram model in [29] incorporates temporal random walks. Temporal attention is revisited in DySAT [30], whereas [31] learn the next graph in a sequence based on a reconstruction error term. DynamicTriad [32] uses a triad closure to learn temporal node embeddings. LSTMs are featured in both GC-LSTM [33] and DyGGNN [34], and both involve graph neural networks, although only the latter uses whole-graph encoding.

DyRep [14] is a method that has been proposed for learning from dynamic graphs based on temporal point processes [35]. This method:

supports two time scales of graph evolution (i.e. long-term and short-term edges);

operates in continuous time;

scales well to large graphs; and

is data-driven due to employing a GNN similar to (1).

These key advantages make DyRep favorable, compared to other methods discussed above.

Another interesting approach to the modeling of dynamic graphs is based on the neural Hawkes processes [36]. However, it is limited to a few event types, which was addressed only recently in [37]. This approach is similar to DyRep, since it also relies on the temporal point processes and uses a neural network to learn dynamic representation. In [14], DyRep was shown to outperform a particular version of the Hawkes processes, which further supports our choice of using DyRep as the baseline. We leave a detailed comparison between a more recent version of the Hawkes processes [37] and DyRep for future work.

Finally, closely related to our work, there are a few applications where graph

2 Background: DyRep

Here we describe relevant details of the DyRep model. A complete description can be found in [14]. DyRep is a representation framework for dynamic graphs evolving according to two elementary processes:

k = 0: Long-term association, in which nodes and edges are added or removed from the graph affecting the evolving adjacency matrix

k = 1: Communication, in which nodes communicate over a short time period, whether or not there is an edge between them.

For example, in a social network, association may be represented as one person adding another as a friend. A communication event may be an SMS message between two friends (an association edge exists) or an SMS message between people who are not friends yet (an association edge does not exist). These two processes interact to fully describe information transfer between nodes. Formally, an event is a tuple ot = (u, v, τ, k) of type k ∈ {0, 1} between nodes u and v at continuous time point τ with time index t. For example, the tuple o1 = (1, 3, 9:10AM, 1) would indicate that node u = 1 communicates with node v = 3 at time point t = 1 corresponding to τ = 9:10 in the morning.

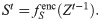

2.1 Node update

Each event between any pair of nodes u, v triggers an update of node embeddings

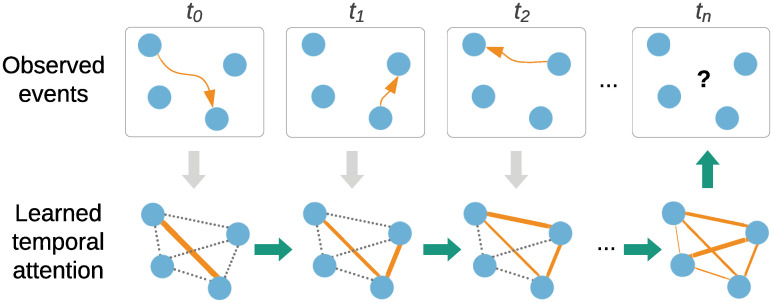

A recursive update of node embeddingsz and temporal attention S.

An event between nodes u = 1 and v = 3 creates a temporary edge that allows the information to flow (in pink arrows) from neighbors of node u to node v. Orange edges denote updated attention values. Normalization of attention values to sum to one can affect attention of neighbors of node v. Dotted edges denote absent edges (or edges with small values).

Attention-based term

We highlight the importance of the first term, which is affected by temporal attention St−1 between node u and all its one-hop neighbors

The other two terms in (2) include a recurrent update of node v’s features and a function of the waiting time between the current event and the previous event involving node v.

2.2 Attention update

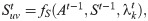

In DyRep, attention St between nodes u, v depends on three terms: 1) long term associations At−1; 2) attention St−1 at the previous time step; and 3) conditional intensity

Conditional intensity λ

Conditional intensity

In DyRep, λ is mainly used to compute the loss and optimize the model, so that its value between nodes involved in the events should be high, whereas between other nodes it should be low.

But λ also affects attention values using a hard-coded algorithm, which makes several assumptions (see Algorithm 1 in [14]). In particular, in the case of a communication event (k = 1) between nodes u and v, attention St is only updated if an association exists between them (

In this paper, we extend the DyRep model in two ways. First, we examine the benefits of learned attention instead of the DyRep’s algorithm in (4) by using a variational autoencoder, more specifically its encoder part, from NRI [15]. This permits learning of a sparse representation of the interactions between nodes instead of using a hard-coded function fS and known adjacency matrix A (Section 3.1). Second, both the original DyRep work [14] and [15] use concatenation to make predictions for a pair of nodes (see (5) above and (6) in [15]), which only captures relatively simple relationships. We are interested in the effect of allowing a more expressive relationship to drive graph dynamics, and specifically to drive temporal attention (Sections 3.1 and 3.2). We demonstrate the utility of our model by applying it to the task of dynamic link prediction on two graph datasets (Section 4).

3 Latent dynamic graph model

In [15], Neural Relational Inference (NRI) was proposed, showing that in some settings, models that use a learned representation, in which the human-specified graph structure is discarded, can outperform models that use the explicit graph. A learned sparse graph representation can retain the most salient features, i.e., only those connections that are necessary for the downstream task, whereas the human-specified graph may have redundant connections.

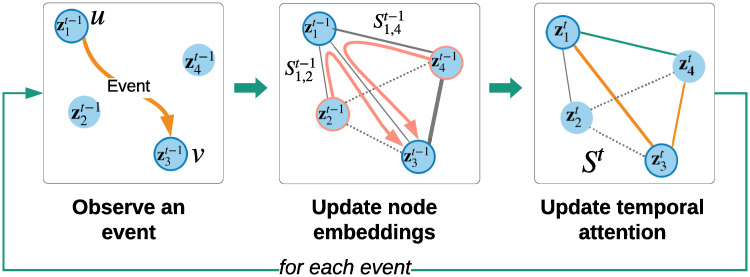

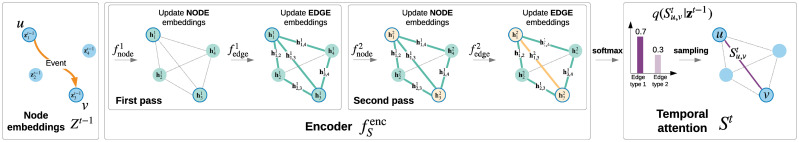

While NRI learns the latent graph structure from observing node movement, we learn the graph by observing how nodes communicate. In this spirit, we repurpose the encoder of NRI, combining it with DyRep, which gives rise to our latent dynamic graph (LDG) model, described below in more detail (Fig 3). We also summarize our notation in Table 1.

![Overview of our approach relative to DyRep [14], in the context of dynamic link prediction.](/dataresources/secured/content-1765990742894-830b3b70-fabb-4acc-9263-e6ad6fc36326/assets/pone.0247936.g003.jpg)

Overview of our approach relative to DyRep [14], in the context of dynamic link prediction.

During training, events ot are observed, affecting node embeddings Z. In contrast to DyRep, which updates attention weights St in a predefined hard-coded way based on associative connections At, such as CloseFriend, we assume that graph At is unknown and our latent dynamic graph (LDG) model based on NRI [15] infers St by observing how nodes communicate. We show that learned St has a close relationship to certain associative connections. Best viewed in colour.

| Notation | Dimensionality | Definition |

|---|---|---|

| τ | 1 | Point in continuous time |

| t | 1 | Time index |

| tv−1 | 1 | Time index of the previous event involving node v |

| τtv−1 | 1 | Time point of the previous event involving node v |

| i, j | 1 | Index of an arbitrary node in the graph |

| v, u | 1 | Index of a node involved in the event |

| At | N × N | Adjacency matrix at time t |

|  | One-hop neighbourhood of node u |

| Zt | N × d | Node embeddings at time t |

| d | Embedding of node v at time t |

| d | Attention-based embedding of node u’s neighbors at time t − 1 |

| d | Learned hidden representation of node j after the first pass |

| d | Learned hidden representation of an edge between nodes i and j after the first pass |

| d | Learned hidden representation of node j after the second pass |

| r | Learned hidden representation of an edge between nodes u and v involved in the event after the second pass (Fig 4) |

| St | N × N × r | Attention at time t with r multirelational edges |

| N × r | Attention between node u and its one-hop neighbors at time t |

| r | Attention between nodes u and v at time t for all edge types r |

| 1 | Conditional intensity of edges of type k at time t between nodes u and v |

| ψk | 1 | Trainable rate at which edges of type k occur |

| ωk | 2d | Trainable compatibility of nodes u and v at time t |

| Ωk | d × d | Trainable bilinear interaction matrix between nodes u and v at time t |

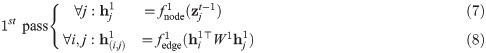

3.1 Bilinear encoder

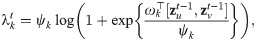

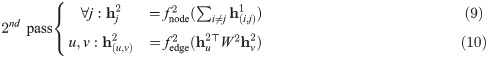

DyRep’s encoder (4) requires a graph structure represented as an adjacency matrix A. We propose to replace this with a sequence of learnable functions

(7):

(8):

(9):

(10):

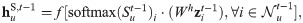

Inferring an edge

Even though only nodes u and v have been involved in the event, to infer the edge

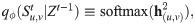

The softmax function is applied to the edge embedding

Replacing (4) with (5) means that it is not necessary to maintain an explicit representation of the human-specified graph in the form of an adjacency matrix. The evolving graph structure is implicitly captured by St. While St represents temporal attention weights between nodes, it can be thought of as a graph evolving over time, therefore we call our model a Latent Dynamic Graph (LDG). This graph, as we show in our experiments, can have a particular semantic interpretation.

The two passes in (7)–(10) are important to ensure that attention

Unlike DyRep, the proposed encoder generates multiple edges between nodes, i.e.,

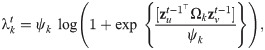

3.2 Bilinear intensity function

Earlier in (5) we introduced conditional intensity

Why bilinear? Bilinear layers have proven to be advantageous in settings like Visual Question Answering (e.g., [41]), where multi-modal embeddings interact. It takes a form of xWy, so that each weight Wij is associated with a pair of features xi,yj, where i, j ∈ d and d is the dimensionality of features x and y. That is, the result will include products xiWijyj. In the concatenation case, the result will only include xiWi, yjWj, i.e. each weight is associated with a feature either only from x or from y, not both. As a result, bilinear layers have some useful properties, such as separability [42]. In our case, they permit a richer interaction between embeddings of different nodes. So, we replace NRI’s and DyRep’s concatenation layers with bilinear ones in (8), (10) and (12).

3.3 Training the LDG model

Given a minibatch with a sequence of

The first two terms,

Following [15], we consider uniform and sparse priors. In the uniform case, θi = 1/r, i = 1, …, r, such that the KL term becomes the sum of entropies H over the events

In case of sparse pθ(S) and, for instance, r = 2 edge types, we set pθ(S) = [0.90, 0.05, 0.05], meaning that we generate r + 1 edges, but do not use the non-edge type corresponding to high probability, and leave only r sparse edges. In this case, the KL term becomes:

4 Experiments

4.1 Datasets

We evaluate our model on the link (event) prediction task using two datasets (Table 2). Source code is available at https://github.com/uoguelph-mlrg/LDG.

| Dataset | Social Evolution | Github |

|---|---|---|

| # nodes | 83 | 284 |

| # initial associations | 575 | 149 |

| # final associations | 708 | 710 |

| # train comm events | 43,469 | 10,604 |

| # train assoc events | 365 | 1,040 |

| # test comm events | 10,462 | 8,667 |

| # test assoc events | 73 | 415 |

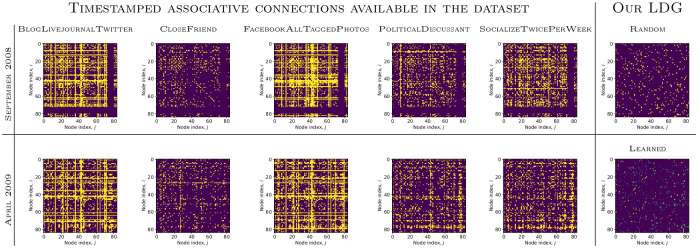

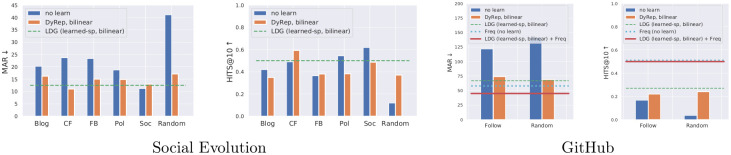

Social Evolution [16]. This dataset consists of over one million events ot = (u, v, τ, k). We preprocess this dataset in a way similar to [14]. A communication event (k = 1) is represented by the sending of an SMS message, or a Proximity or Call event from node u to node v; an association event (k = 0) is a CloseFriend record between the nodes. We also experiment with other associative connections (Fig 5). As Proximity events are noisy, we filter them by the probability that the event occurred, which is available in the dataset annotations. The filtered dataset on which we report results includes 83 nodes with around 43k training and 10k test communication events. As in [14], we use events from September 2008 to April 2009 for training, and from May to June 2009 for testing.

Predicting links by leveraging training data statistics without any learning (“no learn”) turned out to be a strong baseline.

We compare it to learned models with different human-specified graphs used for associations. Here, for the Social Evolution dataset the abbreviations are following: Blog: BlogLivejournalTwitter, Cf: CloseFriend, Fb: FacebookAllTaggedPhotos, Pol: PoliticalDiscussant, Soc: SocializeTwicePerWeek, Random: Random association graph.

GitHub. This dataset is provided by GitHub Archive and compared to Social Evolution is a very large network with sparse association and communication events. To learn our model we expect frequent interactions between nodes, therefore we extract a dense subnetwork, where each user initiated at least 200 communication and 7 association events during the year of 2013. Similarly to [14], we consider Follow events in 2011-2012 as initial associations, but to allow more dense communications we consider more event types in addition to Watch and Star: Fork, Push, Issues, IssueComment, PullRequest, Commit. This results in a dataset of 284 nodes and around 10k training events (from December to August 2013) and 8k test events (from September to December 2013).

We evaluate models only on communication events, since the number of association events is small, but we use both for training. At test time, given tuple (u, τ, k), we compute the conditional density of u with all other nodes and rank them [14]. We report Mean Average Ranking (MAR) and HIST@10: the proportion of times that a test tuple appears in the top 10.

4.2 Implementation details

We train models with the Adam optimizer [44], with a learning rate of 2×10−4, minibatch size

An adjacency matrix of the latent dynamic graph (LDG), St, for one of the sparse edge types generated at the end of training, compared to randomly initialized St and associative connections available in the Social Evolution dataset at the beginning (top row) and end of training (bottom row).

A quantitative comparison is presented in Table 3.

| Model | Learned attention | MAR ↓ | HITS@10 ↑ | |||

|---|---|---|---|---|---|---|

| Concat | Bilinear | Concat | Bilinear | |||

| Social Evolution | GCN (CloseFriend) | ✘ | 27.1 ± 0.6 | 29.4 ± 2.6 | 0.42 ± 0.01 | 0.33 ± 0.05 |

| GAT (CloseFriend) | ✘ | 48.2 ± 5.0 | 52.4 ± 2.6 | 0.09 ± 0.04 | 0.07 ± 0.02 | |

| DyRep (CloseFriend) | ✘ | 16.0 ± 3.0 | 11.0 ± 1.2 | 0.47 ± 0.05 | 0.59 ± 0.06 | |

| DyRep (Fb) | ✘ | 20.7 ± 5.8 | 15.0 ± 2.0 | 0.29 ± 0.21 | 0.38 ± 0.14 | |

| LDG (random, uniform) | ✘ | 19.5 ± 4.9 | 16.0 ± 3.3 | 0.28 ± 0.19 | 0.35 ± 0.17 | |

| LDG (random, sparse) | ✘ | 21.2 ± 4.4 | 17.1 ± 2.6 | 0.26 ± 0.10 | 0.37 ± 0.08 | |

| LDG (learned, uniform) | ✔ | 22.6 ± 6.1 | 16.9 ± 1.1 | 0.18 ± 0.09 | 0.37 ± 0.06 | |

| LDG (learned, sparse) | ✔ | 17.0 ± 5.8 | 12.7 ± 0.9 | 0.37 ± 0.14 | 0.50 ± 0.06 | |

| GitHub | GCN (Follow) | ✘ | 53.5 ± 1.9 | 53.8 ± 0.3 | 0.36 ± 0.00 | 0.36 ± 0.00 |

| GAT (Follow) | ✘ | 107.1 ± 3.6 | 111.5 ± 3.9 | 0.21 ± 0.02 | 0.20 ± 0.01 | |

| DyRep (Follow) | ✘ | 100.3 ± 10 | 73.8 ± 5.0 | 0.187 ± 0.01 | 0.221± 0.02 | |

| LDG (random, uniform) | ✘ | 90.3 ± 17.1 | 68.7 ± 3.3 | 0.21 ± 0.02 | 0.24 ± 0.02 | |

| LDG (random, sparse) | ✘ | 95.4 ± 14.9 | 71.6 ± 3.9 | 0.20 ± 0.01 | 0.23 ± 0.01 | |

| LDG (learned, uniform) | ✔ | 92.1 ± 15.1 | 66.6 ± 3.6 | 0.20 ± 0.02 | 0.27 ± 0.03 | |

| LDG (learned, sparse) | ✔ | 90.9 ± 16.8 | 67.3 ± 3.5 | 0.21 ± 0.03 | 0.28 ± 0.03 | |

| LDG (learned, sparse) + Freq | ✔ | 47.8 ± 5.7 | 43.0 ± 2.8 | 0.48 ± 0.03 | 0.50 ± 0.02 | |

We also train two simple baselines based on GCN [8] and GAT [45]. Both of them have three graph convolutional layers with 32 hidden units in each layer and the GAT has 4 attention heads. To train these baselines, in the first training iteration we propagate the initial node embeddings through the GCN or GAT using the association graph at the current timestamp obtaining node embeddings z. We then use z to compute intensity λ for all events in the minibatch according to (5) or (12) and afterwards the baseline DyRep loss (the first two terms in (13)). For the following training iteration, the input to the GCN or GAT are the output embeddings from the previous training iteration, which allows the embeddings to evolve during training. The main limitation of theses baselines compared to DyRep or LDG is that the communication events are only used to compute λ and do not define the node propagation scheme neither during training or testing.

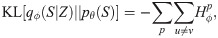

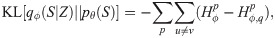

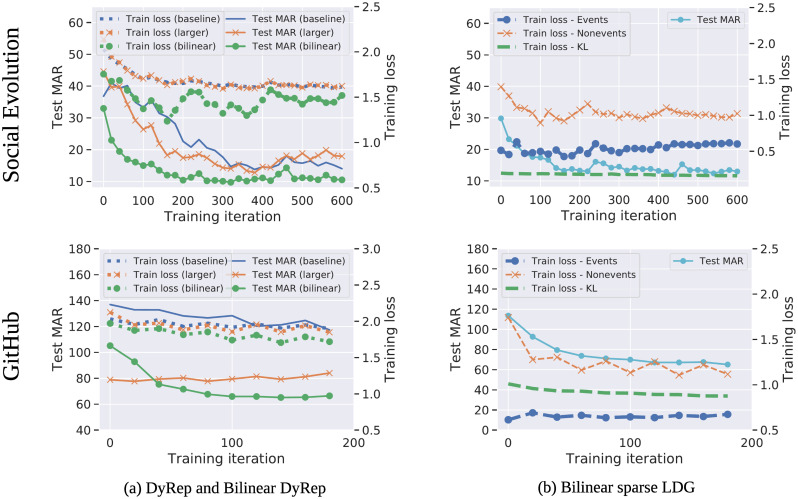

4.3 Quantitative evaluation

On Social Evolution, we report results of the baseline DyRep with different human-specified associations and compare them to the models with learned temporal attention (LDG) (Table 3, Fig 5). Models with learned attention perform better than most of the human-specified associations, further confirming the finding from [15] that the underlying graph can be suboptimal. However, these models are still slightly worse compared to CloseFriend, which means that this graph is a strong prior. We also show that bilinear DyRep and LDG models consistently improve results and exhibit better training behaviour, including when compared to a larger linear model with an equivalent number of parameters (Fig 7).

(a) Training curves and test MAR for baseline DyRep, bilinear DyRep, and larger baseline DyRep, with a number of trainable parameters equal to the bilinear DyRep. (b) Training curves and test MAR for the bilinear LDG with sparse prior, showing that all three components of the loss (see (13)) generally decrease or plateau, reducing test MAR.

Both DyRep and LDG also outperform simple GCN and GAT baselines, which confirms the importance of modeling the dynamics of both association and communication events. Among these baselines, GAT shows significantly worse results. This can be explained by the difficulty of training attention heads in the GAT. These baselines also often diverge when combined with the bilinear intensity function and so we had to apply additional squashing of embeddings (see our implementation for full details). Therefore, in these cases, bilinear models underperform.

GitHub is a more challenging dataset with more nodes and complex interactions, so that the baseline DyRep model has a very large MAR of 100 (random predictions have the MAR of around 140). On this dataset, bilinear DyRep and LDG models provide a much larger gain, while models with learned attention further improve results. The baseline DyRep model using Follow associations is performing poorly, even compared to our model with randomly initialized graphs. These results imply that we should either carefully design graphs or learn them jointly with the rest of the model as we do in our work.

Surprisingly, on this dataset, the GCN baseline outperform DyRep and LDG. This can be due to a certain artifact in the dataset or that there exists a simple pattern of events, and more complex models cannot capture them. Note that while GCN is better than LDG in this case, the GCN model leverages the ground truth association graph, which is unavailable to the LDG.

We compare our implementation of DyRep to [14] and other static and dynamic models (Table 4). Our results are worse than those reported in [14] due to the possible implementation differences (no source code of [14] is available) and different preprocessing steps of the Social Evolution dataset. However, we highlight that our results are still significantly better than other baselines.

We also perform additional ablation studies of our LDG (Table 5). In particular, we report the results of using the bilinear transformation only for the encoder (NRI) components or only for computing the conditional intensity. The results suggest a more important role of the latter. While using the bilinear form only for the NRI component degrades the performance, combining it with the bilinear intensity function leads to the best results. The results of our LDG without or with the bilinear transformation on the GitHub dataset are presented in Table 3.

4.4 Interpretability of learned attention

While the models with a uniform prior have better test performance than those with a sparse prior in some cases, sparse attention is typically more interpretable. This is because the model is forced to infer only a few edges, which must be strong since that subset defines how node features propagate (Table 6). In addition, relationships between people in the dataset tend to be sparse. To estimate agreement of our learned temporal attention matrix with the underlying association connections, we take the matrix St generated after the last event in the training set and compute the area under the ROC curve (AUC) between St and each of the associative connections present in the dataset.

| Associative Connection | Initial | Final | ||

|---|---|---|---|---|

| Uniform | Sparse | Uniform | Sparse | |

| BlogLivejournalTwitter | 53 | 57 | 57 | 69 |

| CloseFriend | 65 | 69 | 76 | 84 |

| FacebookAllTaggedPhotos | 55 | 57 | 58 | 62 |

| PoliticalDiscussant | 59 | 63 | 61 | 68 |

| SocializeTwicePerWeek | 60 | 66 | 63 | 70 |

| Github Follow | 79 | 80 | 85 | 86 |

These associations evolve over time, so we consider initial and final associations corresponding to the first and last training events. AUC is used as opposed to other metrics, such as accuracy, to take into account sparsity of true positive edges, as accuracy would give overoptimistic estimates. We observe that LDG learns a graph most similar to CloseFriend. This is an interesting phenomenon, given that we only observe how nodes communicate through many events between non-friends. Thus, the learned temporal attention matrix is capturing information related to the associative connections.

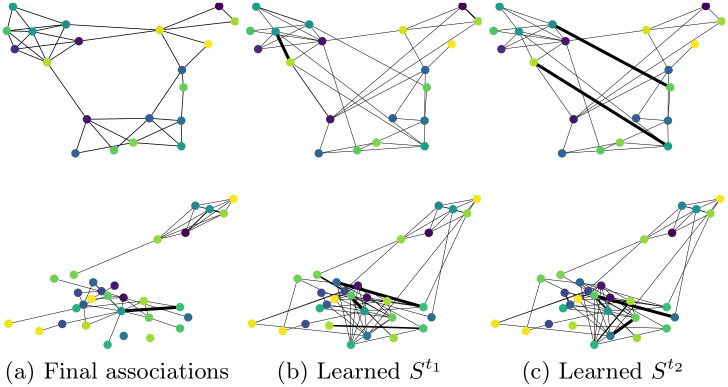

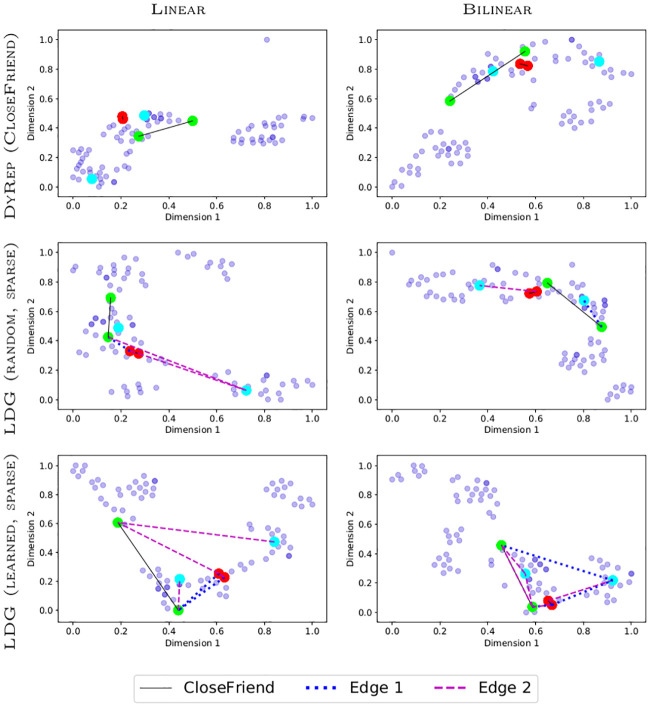

We also visualize temporal attention sliced at different time steps and can observe that the model generally learns structure similar to human-specified associations (Fig 8). However, attention can evolve much faster than associations, which makes it hard to analyze in detail. Node embeddings of bilinear models tend to form more distinct clusters, with frequently communicating nodes generally residing closer to each other after training (Fig 9). We notice bilinear models tend to group nodes in more distinct clusters. Also, using the random edges approach clusters nodes well and embeds frequently communicating nodes close together, because the embeddings are mainly affected by the dynamics of communication events.

Final associations of the subgraphs for the Social Evolution (top row) and GitHub (bottom row) datasets compared to attention values at different time steps selected randomly.

tSNE node embeddings after training (coordinates are scaled for visualization) on the Social Evolution dataset.

Lines denote associative or sampled edges. Darker points denote overlapping nodes. Red, green, and cyan nodes correspond to the three most frequently communicating pairs of nodes, respectively.

4.5 Leveraging the dataset bias

While there can be complex interactions between nodes, some nodes tend to communicate only with certain nodes, which can create a strong bias. To understand this, we report results on the test set, which were obtained simply by computing statistics from the training set (Fig 5).

For example, to predict a link for node u at time τ, we randomly sample node v from those associated with u. In the Random case, we sample node v from all nodes, except for u. This way we achieve high performance in some cases, e.g., MAR = 11 by exploiting SocializeTwicePerWeek, which is equivalent to learned DyRep. This result aligns with our intuition that people who socialize with each other tend to communicate more, whereas such associations as friends tend to be stronger, longer term relationships that do not necessary involve frequent communications.

Another bias present in the datasets is the frequency bias, existing in many domains, e.g. visual relationship detection [48]. In this case, to predict a link for node u at time τ we can predict the node with which it had most of communications in the training set. On GitHub this creates a very strong bias with performance of MAR = 58. We combine this bias with our LDG model by averaging model predictions with the frequency distribution and achieve our best performance of MAR = 45. Note that this bias should be used carefully as it may not generalize to another test set. A good example is the Social Evolution dataset, where communications between just 3-4 nodes correspond to more than 90% of all events, so that the rank of 1-2 can be easily achieved. However, such a model would ignore potentially changing dynamics of events at test time. Therefore, in this case the frequency bias is exploiting the limitation of the dataset and the result would be overoptimistic. In contrast the DyRep model and our LDG model allow node embeddings to evolve over time based on current dynamics of events, so that it can adapt to such changes.

5 Conclusion

We introduced a novel model extending DyRep and NRI for dynamic link prediction. We found that, in many cases, attention learned using our model can be advantageous compared to attention computed based on the human-specified graph, which can be suboptimal and expensive to produce. Furthermore, we showed that bilinear layers can capture essential pairwise interactions outperforming a more common concatenation-based layer in all evaluated cases.

Acknowledgements

The authors thank Elahe Ghalebi and Brittany Reiche for their helpful comments.

References

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

Learning temporal attention in dynamic graphs with bilinear interactions

Learning temporal attention in dynamic graphs with bilinear interactions