Competing Interests: The authors have declared that no competing interests exist.

- Altmetric

The restoration of the Poisson noisy images is an essential task in many imaging applications due to the uncertainty of the number of discrete particles incident on the image sensor. In this paper, we consider utilizing a hybrid regularizer for Poisson noisy image restoration. The proposed regularizer, which combines the overlapping group sparse (OGS) total variation with the high-order nonconvex total variation, can alleviate the staircase artifacts while preserving the original sharp edges. We use the framework of the alternating direction method of multipliers to design an efficient minimization algorithm for the proposed model. Since the objective function is the sum of the non-quadratic log-likelihood and nonconvex nondifferentiable regularizer, we propose to solve the intractable subproblems by the majorization-minimization (MM) method and the iteratively reweighted least squares (IRLS) algorithm, respectively. Numerical experiments show the efficiency of the proposed method for Poissonian image restoration including denoising and deblurring.

1 Introduction

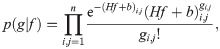

In many real applications, during the image recording, the measurement of light inevitably leads to the uncertainty of striking particles on the image sensor. In other words, the finite number of electrons or photons carrying energy in an image sensor may cause statistical fluctuations, which are usually modeled as the Poisson distribution [1–3]. As another degradation factor, image formation may involve undesirable blurrings such as motion or out-of-focus. By rewriting the concerned images into column-major vectorized form, we can regard the observed image as a realization of Poisson random vector with expected value Hf + b, where H is a n2 × n2 convolution matrix corresponding to the point spreading function (PSF) which models blur effects,

Poissonian image restoration calls for applying an inverse procedure to approximate f from an observation g degraded by blur and Poisson noise. A general option to tackle this problem is to use the maximum a posteriori (MAP) estimation from Bayesian perspective. Taking the Poisson statistics of noise into account, we can write the conditional distribution of the observed data g as

Since the efficiency of the restoration model hinges on the choice of image regularizer, numerous authors have proposed different regularization methods. Tikhonov regularization of form

Another classical method, the total variation (TV) regularizer ϕ(f) = ‖f‖TV (see Section 2), has been widely accepted since the noisy signals have larger TV than the original signal [18]. Bardsley et al. [19] proved that minimizing the Poisson likelihood function in conjunction with TV regularization is well-posed, and they also verified its convergence by a nonnegatively constrained and projected quasi-Newton minimization algorithm. Landi et al. [20] also adopted the TV regularizer for denoising the medical images collected by the photon-counting devices and extended the PNCG method introduced in [2]. Tao et al. [21] appended a non-negativity constraint and used the half-quadratic splitting method to solve the related minimization problem. In addition to the methods mentioned above, other authors employed the effective optimization techniques for solving the TV regularized Poisson deblurring model, including the alternating extra-gradient method [22], the primal-dual method [23, 24] and the alternating direction method of multipliers (ADMM) [25, 26]. It is well-known that the TV regularization methods could preserve fine details such as sharp edges, but they often exhibit false jump discontinuities causing spurious staircase artifacts in smooth regions of the restored images. This may be due to the fact that the TV regularization tends to transform the smooth regions of the solution into piecewise constant ones while solving the minimization problem.

One possible remedy for this oversharpening behavior is to introduce high-order TV, which could penalize jumps more. Zhou et al. [27] selected the second-order TV ϕ(f) = ‖f‖HTV (see Section 2) as a regularizer, and solved by using the alternating minimization scheme. Their numerical experiments indicated the advantage of the second-order TV in avoiding the staircase artifacts, compared with the classical TV regularization [28]. But the high-order TV usually transforms the smooth signal into over-smoothing [29], so the hybrid regularizations combining the high-order TV with other regularizers were also considered. Among the hybrid models, Zhang et al. [30] obtained better results than the model with a strongly convex term

Considering that the tight framelet framework can facilitate the sparse representation of images [35], Shi et al. [36] combined the framelet regularization with non-local TV and non-negativity constraint in the non-blind stage of blind Poisson deblurring. Zhang et al. [37] proposed the nonconvex and noncontinuous model with ℓ0 norm of the tight wavelet representation as a regularizer. Fang and Yan [38] combined the ℓ1 norm of framelet coefficients with TV for Poissonian image deconvolution to reduce artifacts yielded by only using TV regularization. In addition to working with transform-domain sparsity mentioned above, the overlapping group sparsity, which describes the natural tendency of large values to arise near other large values rather than in isolation, has been widely concerned in the field of image restoration, due to its remarkable ability to exploit the structural information of the natural image gradient [4, 39–41]. Especially, for the Poisson noisy restoration, Lv et al. [4] focused on the regularization by the total variation with overlapping group sparsity (TVOGS) and they showed that the solution obtained by the ADMM framework is superior to the first-order TV regularized methods [25, 42] and the high-order regularized methods [27].

To sum up, the high-order TV or the overlapping group sparse prior can be the most feasible option to alleviate the staircase artifacts. Nonetheless, the high-order TV, due to the over-penalizing tendency of the ℓ1 norm [43], often causes over-smoothing at the edges while the overlapping group sparsity tends to smoothen out blockiness in the restored images more globally. Adam and Paramesran, motivated by the work of Chen et al. [44], proposed a hybrid regularization model that combined the overlapping group sparse total variation with the high-order nonconvex TV to denoise images corrupted by Gaussian noise [40]. Recently, they extended it to non-blind deblurring under Gaussian noise [45]. In this paper, we present an extension of Adam’s regularizer to the problem of restoring the Poisson noisy images. This regularizer takes the advantages of both the high-order nonconvex regularizer and overlapping group sparsity regularizer, i.e., it can simultaneously facilitate the pixel-level and structured sparsities of the natural image in the gradient domain. Therefore, we expect that it can not only effectively reduce staircase artifacts but also preserve well sharp edges in the restored image. However, its optimization is more challenging than Gaussian deblurring due to the ill-conditioned non-quadratic data fidelity term. We employ the alternating direction method of multipliers to solve the derived minimization problem. In particular, during the ADMM iterations, we solve two intractable subproblems: one is from overlapping group sparse prior and solved by majorization-minimization (MM) method with well-known quadratic majorizer; another is from the nonconvex ℓp(0 < p < 1) quasi norm, which is solved by the iteratively reweighted least squares (IRLS) algorithm with the motivation that the IRLS is guaranteed to converge to its local minima provided better theoretical and practical abilities than the iteratively reweighted ℓ1 (IRL1) algorithm [52–55].

The rest of this paper is organized as follows. In Section 2, we introduce some notations that will be used to formulate our proposed method. We also briefly review the essential concepts and tools including the overlapping group sparsity prior, the ADMM algorithm, the MM method, and the IRLS algorithm. Section 3 establishes a novel Poissonian image deblurring model which comprises the generalized Kullback Leibler (KL) divergence as data fidelity term, and a combined first-order and second-order total variation priors with overlapping group sparsity as a regularization term. Sequentially, we derive an effective algorithm for minimizing the non-convex and non-smooth objective function under the ADMM optimization framework. Section 4 demonstrates the superiority of our method via numerical experiments, followed by analyzing the parameter setting and convergence behavior. We finally conclude this paper in Section 5.

2 Preliminaries

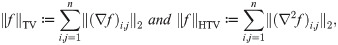

2.1 Notations

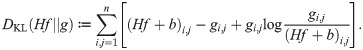

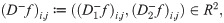

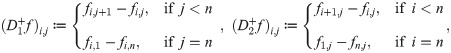

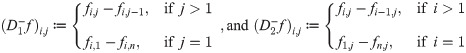

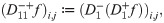

It is necessary to introduce some notations throughout this paper. Assuming that all images under consideration are gray-scale images of size n × n, we lexicographically stack the columns of an image matrix into a vector form. For example, the (i, j)th pixel of image f becomes the ((j − 1)n + i)th entry of the vector, just written as fi,j. Under the periodic boundary conditions for image f, we introduce the discrete forward difference operator

Second-order difference operators can be recursively defined by using the first-order difference operators such as

2.2 TVOGS and MM algorithm

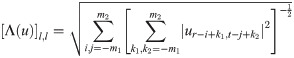

To describe the structural sparsity of image gradient, we define a pixel-group

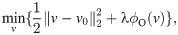

Next, we review a minimization problem of the form

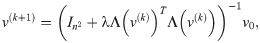

To solve the above sophisticated OGS model, the majorization-minimization approach is used with

Algorithm 1 MM algorithm for minimizing Eq (12)

input λ > 0, K, Nin(inner iterations)

initialize v(0) = v0, k = 0.

while k < Nin do ▹ MM inner loop

1:

2:

3: k = k + 1;

end while

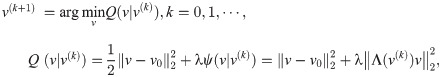

2.3 Alternating direction method of multipliers

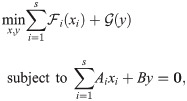

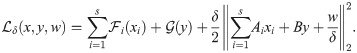

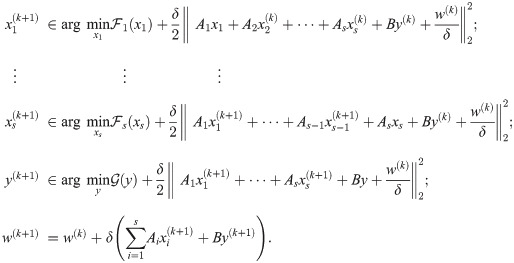

The standard form of the ADMM [47] is designed to tackle the distributed convex optimization problem with linear equality constraints, and many variants have been developed [48–50]. Recently, Wang et al. [51] extended it to the problems involving the nonconvex and nonsmooth muti-blocks objective, as follows:

In general,

The ADMM algorithm proceeds to alternatively update each variable (x(k+1), y(k+1)) until it reaches a stationary point of Eq (17) or meets a stopping criterion. Then, we have the following iterative scheme:

The following lemma establishes the convergence result of ADMM with the nonconvex and nonsmooth objective [51].

Lemma 1. Let

S1 (coercivity) Let

S2 (feasibility) Im(A) ⊆ Im(B), where Im(⋅) is defined as the image of a matrix;

S3 (Lipschitz sub-minimization paths)

(a) For any fixed x, there exists a Lipschitz continuous map

(b) For i = 1, ⋯, s and fixed x−i

and y, there exists a Lipschitz continuous map

S4 (objective-

S5 (objective-

2.4 Iteratively reweighted least squares algorithm

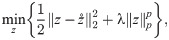

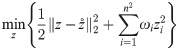

IRLS algorithm [52, 53] solves the following nonconvex ℓp norm sparsity problem:

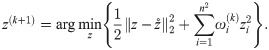

We summarize the IRLS method in Algorithm 2.

Algorithm 2 IRLS algorithm for minimizing Eq (19)

input

initialize

while not converged do

1: update weight: ω(k) = λp((z(k))2 + ϵ)p/2−1;

2: update z:

3: k = k + 1;

end while

3 Proposed model and optimization method

In this section, we first present the proposed model and then solve it in the framework of nonconvex and nonsmooth ADMM.

3.1 Model formulation

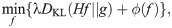

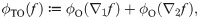

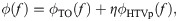

Considering the advantages of the overlapping group sparse total variation and the high-order nonconvex total variation, we investigate the Poisson noisy image restoration problem with the following regularizer:

In the model above, λ and η are used to control the data fidelity term and the nonconvex second-order regularizer, respectively. The data fidelity term is an ill-conditioned non-quadratic log-likelihood, while the regularizer is nonconvex and nonsmooth due to the presence of the ℓp(0 < p < 1) quasi norm. This may increase the difficulty in minimizing the model. To circumvent this difficulty, we propose an efficient algorithm based on the framework of nonconvex and nonsmooth ADMM in the following subsection.

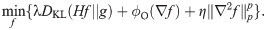

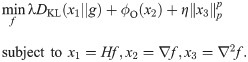

3.2 Optimization

In order to apply the variable splitting scheme, we introduce three auxiliary variables to transform the original complicated minimization problem into the following equivalent linear constraint minimization problem:

We establish a corresponding relation between the variables to conform with the ADMM framework Eq (16), as shown below:

(1)

(2)

(3)

where 01, 02, 03 denote zero matrix of size 2n2 × 4n2, 4n2 × n2, n2 × 2n2, respectively, and

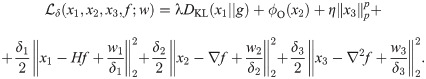

Then, the ADMM for solving Eq (24) starts its iteration to update every variable by minimizing Eq (25) alternatively. This iterative scheme could be split into several subproblems.

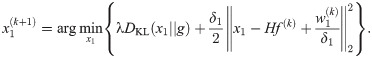

3.2.1 x1-subproblem

According to the ADMM scheme, pulling out the terms with x1 from Eq (25) yields

It is apparent that each component of x1 can be solved separately. Through the basic mathematical manipulations in the case of b = 0, we obtain

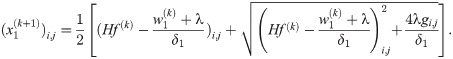

3.2.2 x2-subproblem

Minimizing

It is easy to see that this minimization problem matches the framework of Eq (12), thus x2 can be solved by Algorithm 1.

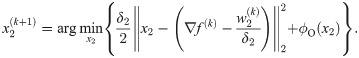

3.2.3 x3-subproblem

By omitting terms irrespective of x3, we have

Since this problem involves the nonconvex ℓp norm, minimizing x3 is not straightforward. But, after some simple modifications, we can apply the IRLS algorithm according to Algorithm 2.

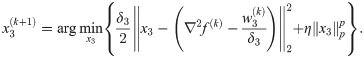

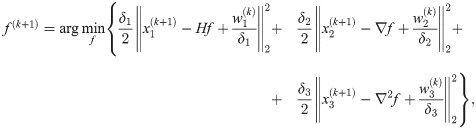

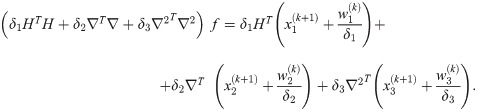

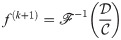

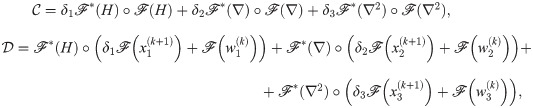

3.2.4 f-subproblem

Considering Eq (25) with respect to f, we have

We assume that both the image and convolution matrix are periodically extended, thus well-known fast Fourier transform can be adopted to efficiently solve Eq (31) [4, 56, 57], which results in an optimal solution

3.2.5 w-subproblem

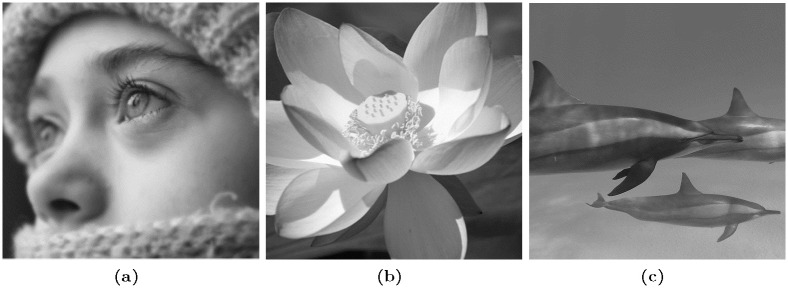

Finally, the updating scheme of the Lagrangian multipliers can be written as

In summary, the key steps of the ADMM algorithm for solving the suggested model are described in Algorithm 3.

Algorithm 3 The ADMM algorithm for solving Eq (23)

input p, λ, η, δ, K, Nin.

initialize f(0), k = 0, w(0) = 0.

while a stopping criteria unsatisfied do ▹ outer loop

1: Update

2: Update

3: Update

4: Update f(k+1) according to Eq (32).

5: Update

6: k = k + 1.

end while

We discuss the convergence of the proposed algorithm. Inspired by [51], by verifying the assumptions in Lemma 1, we can obtain the following convergence result for Algorithm 3.

Theorem 1. The sequence of (x1, x2, x3, f) generated by Algorithm 3 with any sufficiently large δ and any start point will converge to a stationary point of the augmented Lagrangian

Proof. Since our model fits the framework of Eq (16), it remains only to check S1–S5 in Lemma 1. It is easy to verify that DKL(x1||g) is coercive [58] as well as ϕO(x2) and

The convergence of Algorithm 3 can also be verified experimentally.

4 Numerical experiments

In this section, we present the numerical experiments to illustrate the effectiveness of the proposed method for the Poisson noisy image restoration, compared with the closely related state-of-the-art methods including TVOGS [4], SAHTV [5], SB-FA [38] and FT-ADMM [37]. The TVOGS method introduced the overlapping group sparse TV prior combined with the box-constraint as a regularizer and solved the optimization model under the ADMM framework, but denoising is out of their consideration. In the SAHTV method, Liu et al. [5] combined the first and second-order TV priors, and updated their pixel-wise weighting coefficients with the local information during the consecutive estimation of the latent image. FT-ADMM, whose regularizer is the ℓ0 norm of the wavelet representation of the image, was also proposed to efficiently eliminate the staircase artifacts. Fang and Yan [38] proposed to combine the ℓ1 norm of the framelet representation of the latent image with the TV prior, and solved the resultant model by the split Bregman method. Since they recommended using the SB-FA algorithm without the TV prior through the numerical experiments, we adopt SB-FA as a competitive method rather than SB-FATV with the TV prior.

All experiments in this paper are implemented on Windows 10 64-bit and Matlab R2016b running on a desktop computer with an Intel Core i5-4590 CPU 3.3GHz and 8GB of RAM. We uploaded the code for our algorithm on https://github.com/KSJhon/PoissonDeblur_hybrid.

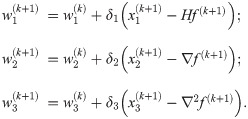

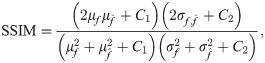

To proceed with the simulation experiments, we intentionally degrade the clean image to construct the corrupted image. More specifically, an original clean image is first scaled to a preset peak value MAXf, which decides the different levels of Poisson noise. After that, the scaled image is convolved with a given PSF to simulate the blurring effect, and further contaminated by the signal-dependent Poisson noise. In the following experiments, we set MAXf to be 100, 200, 300, and 350, in which the lower MAXf indicates the relatively higher noise level. For the deblurring simulations, we consider three types of blur kernels: (1) a 9 × 9 Gaussian kernel with standard deviation 1; (2) a linear motion blur with a motion length 5 and an angle 45° in the counterclockwise direction; (3) a kernel from Levin et al.’s public dataset [59]. All blurring operations are fulfilled by the Matlab built-in function “fspecial”, and the Poisson noise is generated by “poissrnd” without any additional parameter. The test images with various sizes are shown in Fig 1.

Test images used in our experiments.

(a): “beauty” (256 × 256). This image was republished from https://www.flickr.com/photos/90471071@N00/3201337190 under a CC BY license, with permission from Irina Gheorghita, original copyright 2009; (b): “lotus” (500 × 500). This image was republished from https://www.flickr.com/photos/robertlylebolton/7797693474 under a CC BY license, with permission from Robert Lyle Bolton, original copyright 2012; (c): “dolphin” (512 × 512). This image was republished from https://www.flickr.com/photos/grovecrest/6981622176 under a CC BY license, with permission from Simon Lewis, original copyright 2011.

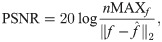

We quantitatively evaluate the performances of the proposed method and the competing methods by means of the peak signal-to-noise ratio (PSNR) and the structural similarity (SSIM), defined as

4.1 Selection of parameters

Since the convergence of the nonconvex ADMM and its suboptimization in Algorithm 3 depends on the values of parameters, they require careful tuning.

For the choice of p, as in the experiments reported in [40], we set it to be 0.1 throughout the experiments, with the consideration of the important aspect of ℓp norm, which says that taking p sufficiently close to 0 favors to preserve sharp edges in the restored image.

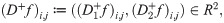

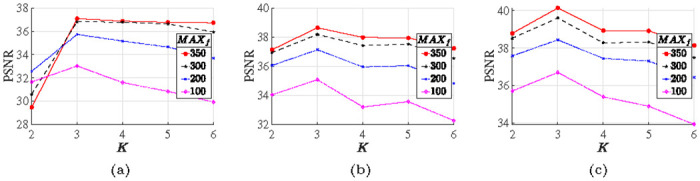

The group size K also plays an important role in balancing the trade-off between the global noise filtering performance and the computational cost. In order to find the optimal K, we conduct a denoising experiment by varying K while keeping other parameters remained, as shown in Fig 2. Obviously, K = 3 is the best choice for all noise levels, so we fix K = 3 in the following experiments. In addition, the number of inner iterations, Nin, also affects the MM algorithm. We straightforwardly fix Nin = 5 as indicated in [4, 29, 39, 40]. To balance the accuracy and speed, we empirically set Nout = 50, ε = 10−3. The other parameters, including the regularization parameters (λ, η) and penalty parameter δ, are manually adjusted to achieve the highest improvement in the PSNR and SSIM values. In the following subsections, we compare the proposed method (named HTVp-OGS) with the state-of-the-art methods for denoising and deblurring images under the Poisson noise. Unless otherwise indicated, all parameters involved in the competitive algorithms are carefully selected near the given default values to give the best performance, respectively.

Evolution of PSNR values of denoised images with MAXf = 350, 300, 200 and 100 along the varying group sizes.

(a): “beauty” image; (b): “lotus” image; (c): “dolphin” image.

4.2 Denoising

Here, we show that our model provides better results in Poisson noise removal through the comparison with the state-of-the-art methods: SB-FA [38], SAHTV [5], and FT-ADMM [37].

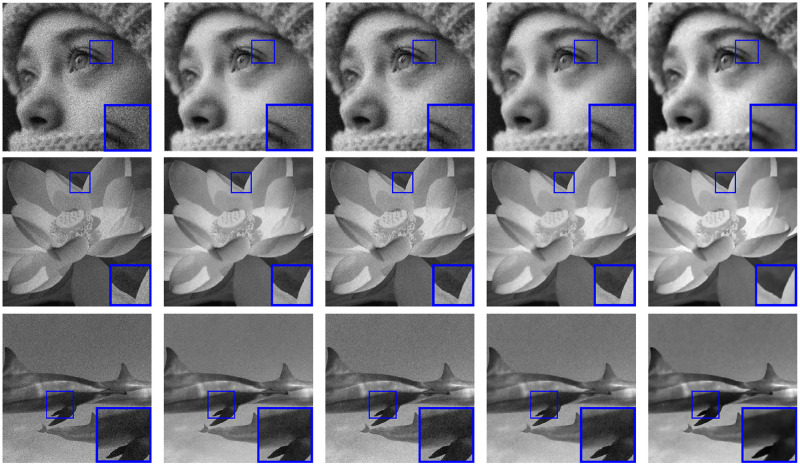

To evaluate the denoising performance, we just set H as an identity matrix, and empirically fix δ = (5 ⋅ 10−3, 3 ⋅ 10−2, 2 ⋅ 10−4)T. We can observe that λ = 3 ⋅ MAXf can bring the satisfactory results of Eq (23). The other regularization parameter η is hand-tuned to get the best denoising performance according to the noise level. The denoising results, in terms of the PSNR and SSIM values, are shown in Table 1. From Table 1, it is obvious that the PSNR and SSIM values of the images denoised by our model are higher than those of the other three methods (SB-FA, SAHTV, FT-ADMM). We display in Fig 3. the denoised versions and the zoom-in regions obtained by our method and other competitive methods from the noisy images with the noise level MAXf = 100.

Denoised images and a zoom-in region from the Poisson noisy images with the noise level MAXf = 100.

first column: Poisson noisy images; second column: denoised images by SB-FA [38]; third column: denoised images by SAHTV [5]; fourth column: denoised images by FT-ADMM [37]; fifth column: denoised images by HTVp-OGS.

| MAXf | clean(f) | noisy(g) | denoised( | |||

|---|---|---|---|---|---|---|

| SB-FA | SAHTV | FT-ADMM | HTVp-OGS | |||

| 350 | beauty | 27.97/0.649 | 34.96/0.929 | 35.41/0.941 | 34.17/0.920 | 37.08/0.955 |

| lotus | 28.12/0.530 | 36.34/0.913 | 37.42/0.936 | 34.96/0.898 | 38.64/0.957 | |

| dolphin | 27.95/0.465 | 38.18/0.926 | 38.41/0.944 | 36.75/0.910 | 40.14/0.966 | |

| 300 | beauty | 27.31/0.620 | 34.29/0.912 | 34.77/0.931 | 33.73/0.906 | 36.82/0.953 |

| lotus | 27.46/0.498 | 35.63/0.890 | 36.73/0.922 | 34.42/0.876 | 38.18/0.955 | |

| dolphin | 27.28/0.431 | 37.04/0.898 | 37.89/0.933 | 35.67/0.884 | 39.61/0.964 | |

| 200 | beauty | 25.55/0.539 | 32.16/0.844 | 32.81/0.880 | 31.85/0.846 | 35.73/0.944 |

| lotus | 25.70/0.412 | 33.23/0.796 | 34.08/0.843 | 32.38/0.786 | 37.12/0.945 | |

| dolphin | 25.52/0.348 | 33.94/0.787 | 35.00/0.855 | 33.06/0.779 | 38.44/0.956 | |

| 100 | beauty | 22.53/0.402 | 27.74/0.645 | 28.06/0.676 | 28.18/0.660 | 33.01/0.917 |

| lotus | 22.68/0.283 | 28.35/0.542 | 28.45/0.562 | 28.49/0.542 | 35.06/0.925 | |

| dolphin | 22.51/0.227 | 28.41/0.500 | 28.66/0.539 | 28.58/0.544 | 36.70/0.939 | |

4.3 Deblurring

In our deblurring experiments, we consider three types of blur kernels. As mentioned above, the first two are artificial to respectively mimic the effect of the out-of-focus blur and motion blur, and the last one is the blur kernel from Levin et al.’s dataset [59]. For the sake of a fair comparison with other methods, we equally set the tolerance level ε = 10−3 and iteration number Nout = 50 in all experiments. As in the denoising experiments, we fix λ = 3 ⋅ MAXf and select the penalty parameter δ as (0.01, 0.1, 0.01)T. The values of δ and λ are kept constant, and the other parameter η is selected by the grid search scheme to obtain high-quality restored images in the following three cases:

Setting 1. Gaussian blur case: We set η = 18, 14, 6, and 2 according to the noise level MAXf = 350, 300, 200, and 100, respectively. The detailed comparison of five methods is provided in Table 2.

Setting2. linear motion blur case: We keep δ and λ remained and empirically set η = 8, 6, 4, 1, and the detailed results are summarized in Table 3.

Setting 3. ground truth blur case: In this case, we set η = 4, 3, 2, 0.2, and the detailed results are summaried in Table 4.

| MAXf | clean(f) | degraded(g) | restored( | ||||

|---|---|---|---|---|---|---|---|

| SB-FA | SAHTV | TVOGS | FT-ADMM | HTVp-OGS | |||

| 350 | beauty | 27.00/0.62 | 32.81/0.92 | 32.45/0.93 | 31.19/0.92 | 32.39/0.93 | 32.85/0.94 |

| lotus | 27.68/0.51 | 35.82/0.92 | 36.31/0.94 | 34.64/0.93 | 34.90/0.93 | 36.66/0.94 | |

| dolphin | 27.55/0.45 | 36.70/0.93 | 36.97/0.94 | 36.67/0.95 | 35.87/0.95 | 37.69/0.95 | |

| 300 | beauty | 26.47/0.59 | 32.58/0.92 | 32.19/0.92 | 31.16/0.92 | 32.33/0.93 | 32.60/0.93 |

| lotus | 27.08/0.47 | 35.43/0.91 | 36.02/0.93 | 34.61/0.93 | 34.84/0.93 | 36.37/0.94 | |

| dolphin | 26.93/0.41 | 36.09/0.92 | 36.64/0.94 | 36.50/0.95 | 35.83/0.94 | 37.37/0.95 | |

| 200 | beauty | 24.95/0.51 | 31.51/0.88 | 31.41/0.90 | 31.02/0.91 | 31.96/0.91 | 31.92/0.92 |

| lotus | 25.44/0.39 | 33.98/0.87 | 34.96/0.91 | 34.36/0.92 | 34.50/0.92 | 35.48/0.93 | |

| dolphin | 25.27/0.33 | 34.49/0.87 | 35.67/0.92 | 35.99/0.94 | 35.66/0.93 | 36.58/0.95 | |

| 100 | beauty | 22.22/0.37 | 28.50/0.75 | 29.78/0.85 | 30.42/0.90 | 30.66/0.87 | 30.57/0.90 |

| lotus | 22.55/0.27 | 29.98/0.70 | 32.44/0.84 | 33.28/0.90 | 32.86/0.85 | 33.87/0.92 | |

| dolphin | 22.39/0.22 | 30.28/0.68 | 33.19/0.85 | 34.53/0.91 | 33.78/0.86 | 35.29/0.94 | |

| MAXf | clean(f) | degraded(g) | restored( | ||||

|---|---|---|---|---|---|---|---|

| SB-FA | SAHTV | TVOGS | FT-ADMM | HTVp-OGS | |||

| 350 | beauty | 26.64/0.61 | 31.34/0.92 | 31.90/0.92 | 29.96/0.90 | 31.76/0.92 | 32.33/0.93 |

| lotus | 27.54/0.50 | 34.22/0.93 | 35.62/0.93 | 33.39/0.92 | 34.56/0.93 | 36.31/0.94 | |

| dolphin | 27.45/0.44 | 36.07/0.94 | 37.06/0.94 | 35.63/0.94 | 35.62/0.95 | 37.68/0.95 | |

| 300 | beauty | 26.13/0.58 | 31.32/0.92 | 31.71/0.92 | 29.98/0.90 | 31.58/0.92 | 32.12/0.93 |

| lotus | 26.94/0.47 | 34.21/0.92 | 35.33/0.93 | 33.39/0.92 | 34.27/0.93 | 36.04/0.94 | |

| dolphin | 26.85/0.41 | 36.01/0.94 | 36.72/0.94 | 35.54/0.94 | 35.49/0.93 | 37.46/0.95 | |

| 200 | beauty | 24.74/0.50 | 31.11/0.91 | 30.97/0.90 | 29.95/0.90 | 31.12/0.90 | 31.56/0.92 |

| lotus | 25.34/0.39 | 34.08/0.92 | 34.57/0.92 | 33.24/0.91 | 33.79/0.91 | 35.32/0.93 | |

| dolphin | 25.23/0.33 | 35.78/0.94 | 35.88/0.93 | 35.19/0.94 | 35.19/0.92 | 36.58/0.95 | |

| 100 | beauty | 22.10/0.37 | 30.39/0.88 | 29.88/0.87 | 29.67/0.89 | 29.82/0.84 | 30.37/0.90 |

| lotus | 22.79/0.34 | 31.48/0.84 | 31.51/0.83 | 31.17/0.84 | 30.77/0.80 | 31.79/0.85 | |

| dolphin | 22.37/0.21 | 34.56/0.91 | 33.81/0.88 | 33.92/0.91 | 33.23/0.84 | 35.34/0.94 | |

| MAXf | clean(f) | degraded(g) | restored( | ||||

|---|---|---|---|---|---|---|---|

| SB-FA | SAHTV | TVOGS | FT-ADMM | HTVp-OGS | |||

| 350 | beauty | 25.44/0.56 | 29.81/0.89 | 30.96/0.90 | 29.22/0.87 | 30.78/0.91 | 31.17/0.91 |

| lotus | 26.49/0.46 | 32.69/0.91 | 34.78/0.92 | 32.70/0.91 | 33.27/0.92 | 34.95/0.93 | |

| dolphin | 26.83/0.42 | 34.58/0.94 | 35.84/0.94 | 34.85/0.94 | 34.38/0.94 | 36.43/0.95 | |

| 300 | beauty | 25.06/0.53 | 29.80/0.89 | 30.74/0.90 | 29.22/0.87 | 30.66/0.90 | 30.97/0.91 |

| lotus | 26.03/0.43 | 32.66/0.91 | 34.45/0.92 | 32.67/0.91 | 33.24/0.91 | 34.62/0.92 | |

| dolphin | 26.31/0.39 | 34.47/0.94 | 35.57/0.93 | 34.79/0.94 | 34.38/0.94 | 36.18/0.94 | |

| 200 | beauty | 23.93/0.46 | 29.72/0.88 | 30.01/0.88 | 29.21/0.87 | 30.27/0.88 | 30.31/0.89 |

| lotus | 24.69/0.36 | 32.67/0.91 | 33.49/0.90 | 32.51/0.90 | 33.01/0.90 | 33.90/0.92 | |

| dolphin | 24.84/0.31 | 34.42/0.93 | 34.67/0.91 | 34.54/0.93 | 34.43/0.93 | 35.22/0.94 | |

| 100 | beauty | 21.62/0.33 | 29.30/0.87 | 28.74/0.83 | 28.96/0.87 | 29.26/0.84 | 29.20/0.87 |

| lotus | 22.14/0.24 | 32.19/0.89 | 31.43/0.84 | 31.91/0.89 | 32.04/0.86 | 32.55/0.90 | |

| dolphin | 22.17/0.20 | 33.77/0.92 | 32.19/0.86 | 33.57/0.91 | 33.42/0.88 | 34.18/0.93 | |

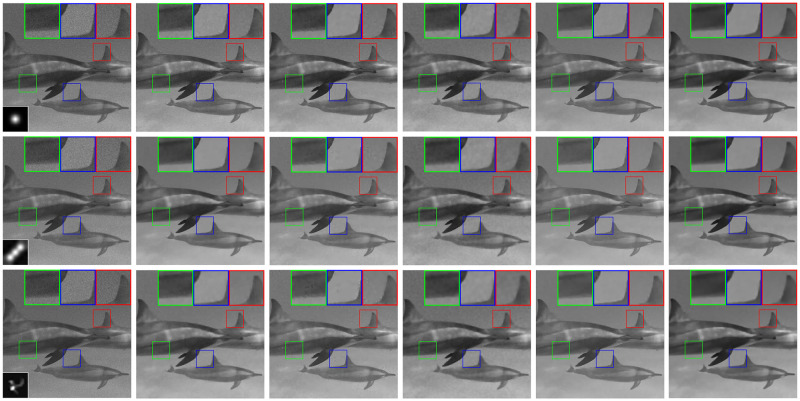

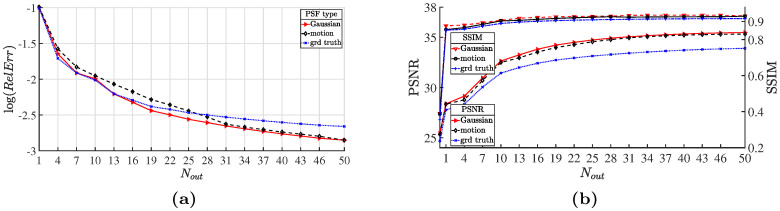

In Fig 4, we show the degraded “dolphin” images which are blurred by the corresponding kernels and further corrupted by the Poisson noise of noise level 200, as well as its restored versions obtained through our method and the competitive methods. Fig 5(a) depicts the RelErr curves for the sequence of temporary estimates of the “lotus” image obtained during the HTVp-OGS iterations. Besides, in Fig 5(b), we show that, as the iteration proceeds, the PSNR and SSIM values moderately increase. From the results of the above three experiments, we can see that in most cases, our method provides the best results in terms of the PSNR and SSIM values, and in extreme cases, we obtain a slightly lower PSNR value than others, but the SSIM value still keeps the best. It is worth noting that the transform-based methods (SB-FA and FT-ADMM) are quite time-consuming because they inherently involve some complicated nonlinear operations [3], while our method works in the gradient domain, making it take a relatively short running time.

Restored “dolphin” images and zoom-in regions from the degraded images with different blur kernels and the Poisson noise of level MAXf = 200.

first row: Gaussian blur; second row: motion blur; third row: ground truth blur; first column: degraded images (PSF is shown at the bottom-left corner); second column: restored images by SB-FA [38]; third column: restored images by SAHTV [5]; fourth column: restored images by TVOGS [4]; fifth column: restored images by FT-ADMM [37]; sixth column: restored images by HTVp-OGS.

Plot of performance along the HTVp-OGS iterations for restoring “lotus” images blurred by different kernels and corrupted by the Poisson noise of level MAXf = 200.

(a) relative error in log scale; (b) PSNR and SSIM values.

5 Conclusions

In this paper, we propose a new method to restore Poissonian images (including denoising and deblurring) by using a hybrid regularizer, which combines the overlapping group sparse total variation with the high-order nonconvex total variation. This regularizer allows us to exploit their advantages in order to alleviate the staircase artifacts while preserving the original sharp edges simultaneously. The model derived from the Bayesian perspective is effectively solved by the nonconvex and nonsmooth ADMM optimization framework. We adopt the MM and IRLS algorithm for solving the subproblems accompanied by the OGS and nonconvex second-order total variation priors, respectively. Numerical experiments demonstrate that the proposed method outperforms the other related methods in terms of the PSNR and SSIM values. The study on adaptively determining the optimal parameters according to the image content and noise level will be the future work.

Acknowledgements

The authors would like to thank Dr. Houzhang Fang and Dr. Haimiao Zhang for supplying us with the codes used in our numerical comparisons.

References

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

Poisson noisy image restoration via overlapping group sparse and nonconvex second-order total variation priors

Poisson noisy image restoration via overlapping group sparse and nonconvex second-order total variation priors