Competing Interests: The authors have declared that no competing interests exist.

Collective behavior, and swarm formation in particular, has been studied from several perspectives within a large variety of fields, ranging from biology to physics. In this work, we apply Projective Simulation to model each individual as an artificial learning agent that interacts with its neighbors and surroundings in order to make decisions and learn from them. Within a reinforcement learning framework, we discuss one-dimensional learning scenarios where agents need to get to food resources to be rewarded. We observe how different types of collective motion emerge depending on the distance the agents need to travel to reach the resources. For instance, strongly aligned swarms emerge when the food source is placed far away from the region where agents are situated initially. In addition, we study the properties of the individual trajectories that occur within the different types of emergent collective dynamics. Agents trained to find distant resources exhibit individual trajectories that are in most cases best fit by composite correlated random walks with features that resemble Lévy walks. This composite motion emerges from the collective behavior developed under the specific foraging selection pressures. On the other hand, agents trained to reach nearby resources predominantly exhibit Brownian trajectories.

Collective behavior is a common but intriguing phenomenon in nature. Species as diverse as locusts, and some families of fish or birds exhibit different types of collective motion in very different environments and situations. Although the general properties of swarms, schools and flocks have been widely studied (see e.g. [1] for a review), the emergence of global, coordinated motion from the individual actions is still a subject of study. Different approaches, ranging from statistical physics to agent-based models, have led to new insights and descriptions of the phenomenon. Statistical physics models are very successful at describing macroscopic properties such as phase transitions and metastable states [2–4], but in order to apply the powerful tools of statistical mechanics, these models normally simplify the individuals to particles that interact according to certain rules dictated by the physical model adopted, as for instance the Ising-type interaction of the spins in a lattice. A different type of models are the so-called self-propelled particle (SPP) models [5–8], which enable higher complexity in descriptions at the individual level but still allow one to employ the tools of statistical physics. They describe individuals as particles that move with a constant velocity and interact with other individuals via fixed sets of rules that are externally imposed. In SPP models, the description of the interactions is not restricted to physically accepted first principles, but can include ad hoc rules based on specific experimental observations.

In this work, we follow a different approach and model the individuals as artificial learning agents. In particular, we apply Projective Simulation (PS) [9], which is a model of agency that can incorporate learning processes via a reinforcement learning mechanism. The individuals are thus described as PS agents that interact with their surroundings, make decisions accordingly and learn from them based on rewards provided by the environment. This framework allows for a more detailed, realistic description in terms of the perceptual apparatus of the agent. One of the main differences with respect to previous models is that the interaction rules between agents are not imposed or fixed in advance, but they emerge as the result of learning in a given task environment. This type of agent-based models that employ artificial intelligence to model behavior are gaining popularity in the last few years. Artificial neural networks (ANN) have been used, for instance, in the context of navigation behaviors [10, 11] and reinforcement learning (RL) algorithms have been applied to model collective behavior in different scenarios, such as pedestrian movement [12] or flocking [13, 14].

In contrast to other learning models such as neural networks, PS provides a transparent, explicit structure that can be analyzed and interpreted. This feature is particularly useful in modeling collective behavior, since we can study the individual decision making processes, what the agents learn and why they learn it. This way, we can directly address the questions of how and why particular individual interactions arise that in turn lead to collective behaviors. Initial work by Ried et al. [15], where the authors use PS to model the density-dependent swarm behavior of locusts, laid the foundations of the present work.

Since the interaction rules are developed by the agents themselves, the challenge is to design the environment and learning task that will give rise to the individual and, consequently, collective behavior. In previous works, the agents are directly rewarded for aligning themselves with the surrounding agents [15] or for not losing neighbours [14]. Instead of rewarding a specific behavior, in this work we set a survival task that the agents need to fulfill in order to get the reward, and then analyze the emergent behavioral dynamics.

As a starting hypothesis, we consider the need to forage as an evolutionary pressure and design a learning task that consists in finding a remote food source. Due to this particular survival task, our work relates to the investigation of foraging theories and optimal searching behavior.

There is a vast number of studies devoted to the analysis of foraging strategies in different types of environments e.g., [16–19]. In the particular case of environments with sparsely distributed resources (e.g. patchy landscapes), there are two main candidates for the optimal search model: Lévy walks [20–22] and composite correlated random walks (CCRW) [23, 24]. The former are described by a single distribution of step lengths that is characterized by a power-law p(ℓ) ∼ ℓ−μ with exponent 1 < μ ≤ 3, whereas the latter consider that the movement is composed of two different modes, characterized by two exponential distributions with different decay rates. Although the mathematical models behind them are fundamentally different, they have some common features that make the movement patterns hard to distinguish [24–28]. In broad terms, both models can produce trajectories that are a combination of short steps (with large turning angles in 2D), which are useful for exploring the patch area, and long, straight steps, which are efficient to travel the inter-patch distances. Even though both models have theoretical [22, 23] and experimental (e.g. [29, 30]) support, it is not yet clear if animal foraging patterns can be described and explained by such models or if they are too complex to admit such simplifications.

Furthermore, regarding the Lévy walks, there is an ongoing debate on the question whether they emerge under certain animal foraging strategies. Currently there exist two main hypotheses, referred to as the evolutionary and the emergentist. The evolutionary hypothesis (also called Lévy flight foraging (LFF) hypothesis) states that certain species have evolved according to natural selection to develop an optimal foraging strategy consisting of Lévy walk movement patterns (see e.g. [31] and references therein). On the other side, the emergentist hypothesis argues that the LFF hypothesis is not sufficient to account for the complexity of animal behavior since it does not explain certain anomalies observed experimentally (see [32] and references therein). It argues that Lévy walks can emerge spontaneously as a consequence of the features of the environment, which lead to certain responses from the foraging organism. Thus, these responses are not part of an evolved strategy developed over the course of generations, but can arise from innate behaviors and lead to Lévy patterns spontaneously when the animal is confronted with certain environmental conditions.

Due to the fact that our learning task is directly related to foraging strategies, we link the present work to the aforementioned studies by analyzing the individual trajectories the agents produce as a consequence of the behavior developed in the different learning contexts.

The paper is organized as follows: an introduction to Projective Simulation and a detailed description of the model and the learning setup are given in Sec. 2. In Sec. 3, we present different learning tasks and analyze the resulting learned behaviors. In Sec. 4, we study the emergent group dynamics and individual trajectories within the framework of search models to determine if they can be described as Lévy walks or composite correlated random walks. Finally, we summarize the results and conclude in Sec. 5.

A wide range of models and techniques have been applied to the study of collective behavior. In this work, we apply Projective Simulation, a model for artificial agency [9, 33–37]. Each individual is an artificial agent that can perceive its surroundings, make decisions and perform actions. Within the PS model, the agent’s decision making is integrated into a framework for reinforcement learning (RL) that allows one to design concrete scenarios and tasks that the individuals should solve and then study the resulting strategies developed by the agents. We remark that the notion of strategy employed throughout this work does not imply that the agents are able to plan. We use the word “strategy” to refer to the behavior the agents develop given a certain learning task. In addition, each agent’s motor and sensory abilities can be modeled in a detailed, realistic way.

In our model of collective behavior, the interaction rules with other individuals are not fixed in advance; instead the agents develop them based on their previous experience and learning. The most natural interpretation of this approach is that it describes how a group of given individuals change their behavior over the course of their interactions, for example human children at play. However, our artificial learning agents can also be used to model simpler entities that do not exhibit learning in the sense of noticeable modifications of their responses over the course of a single individual’s lifetime, but only change their behavior over the course of several generations. In this case, a single simulated agent does not correspond to one particular individual, in one particular generation, but rather stands as an avatar for a generic individual throughout the entire evolution of the species. The environmental pressures driving behavioural changes over this time-scale can be easily encoded in a RL scenario, since the reward scheme can be designed in such a way that only the behaviors that happen to be beneficial under these pressures are rewarded. This allows us to directly test whether the environmental pressures are a possible causal explanation for the observed behavior or not. Our approach interprets the reinforcement of certain responses from an evolutionary perspective. It differs from genetic algorithms, extended classifier systems [38], and similar advanced machine learning methodology in that it does not model evolution in an explicit manner. Such machinery, e.g., the encoding of genes, mutations, and crossover, usually comes at the cost of a larger model complexity (number of free parameters; see [33]) and additional computational overhead. Alternatively, neural network models might be employed, but these are typically difficult to interpret and thus not useful in our context. Unlike genetic algorithms, Projective Simulation provides a model of agency that describes a stochastic decision-making process of each individual, which can be used beyond mere optimization by focusing on the resulting causal explanations.

Although other reinforcement learning algorithms may be used to model a learning agent, Projective Simulation is particularly suitable for the purpose of modeling collective behavior, since it provides a clear and transparent structure that gives direct access to the internal state of the agent, so that the deliberation process can be analyzed in an explicit way and can be related to the agent’s behavior. This analysis can help us gain new insight into how and why the individual interactions that lead to collective behaviors emerge.

Projective Simulation (PS) is a model for artificial agency that is based on the notion of episodic memory [9]. The agent interacts with its surroundings and receives some inputs called percepts, which trigger a deliberation process that leads to the agent performing an action on the environment.

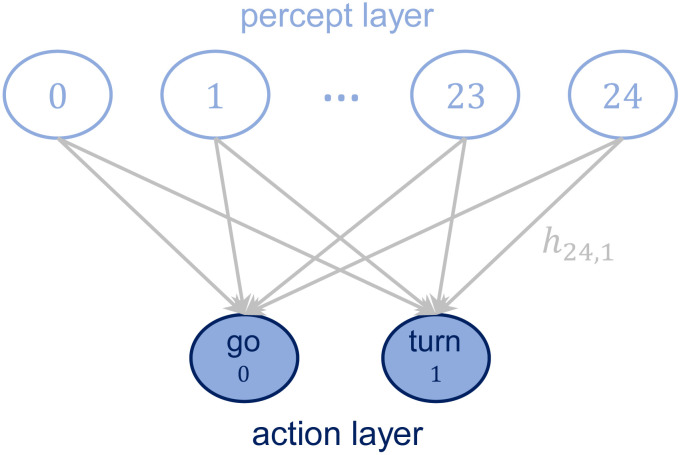

In the PS model, the agent processes the percepts by means of an internal structure called episodic and compositional memory (ECM), whose basic units are called clips and represent an episode of the agent’s experience. Mathematically, the ECM can be represented as a directed, weighted graph, where each node corresponds to a clip and each edge corresponds to a transition between two clips. All the edge weights are stored in the adjacency matrix of the graph, termed h matrix. For the purpose of this work, the most basic two-layered structure is sufficient to model simple agents. Percept-clips are situated in the first layer and are connected to the action-clips, which constitute the second layer (see Fig 1). Let us define these components of the ECM more formally.

The percepts are mathematically defined as N-tuples , where

Analogously, the actions are defined as

Structure of the ECM.

The ECM consists of two layers, one for the percepts and one for the actions. Percepts and actions are connected by edges whose weight hij determines the transition probability from the given percept to each action (see Sec. 2.2 for details on the model).

As an example, consider an agent that perceives both its internal state, denoted by

Fig 1 represents the structure of the ECM in our model, which consists of a total of 25 percepts and 2 actions (see Sec. 2.2 for a detailed description).

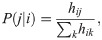

Let us introduce how the agent interacts with the environment and makes decisions via the ECM. When the agent receives a percept, the corresponding percept-clip inside the ECM is activated, starting a random walk that only ends when an action-clip is reached, which triggers a real action on the environment. The transition probability P(j|i) from a given percept-clip i to an action-clip j is determined by the corresponding edge weight hij as,

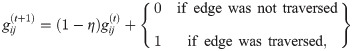

The structure of the ECM allows one to easily model learning by just updating the h matrix at the end of each interaction round. The h matrix is initialized with all its elements being 1, so that the probability distribution of the actions is uniform for each percept. Reinforcement learning is implemented by the environment giving a reward to the agent every time that it performs the correct action. The reward increases the h-values, and thus the transition probabilities, of the successful percept-action pair. Hence, whenever the agent perceives again the same percept, it is more likely to reach the correct action. However, in the context of this work, we are setting a learning task in which the agent should perform a sequence of several actions to reach the goal and get the reward. If the reward is given only at the last interaction round, only the last percept-action pair would be rewarded. Thus, some additional mechanism is necessary in order to store a sequence of several percept-action pairs in the agent’s memory. This mechanism is called glow and the matrix that stores the information about this sequence is denoted by g. The components gij, corresponding to the percept-action transition i → j, are initialized to zero and are updated at the end of every interaction round according to:

In the context of our learning task, the agent receives a reward from the environment at the end of the interaction round at which it reaches a goal. Then, the learning is implemented by updating the h matrix with the rule,

Since we model collective behavior, we consider a group of several agents, each of which has its own and independent ECM to process the surrounding information. Details on the specific learning task and the features of the agents are given in the following section.

We consider an ensemble of N individuals that we model as PS learning agents, which possess the internal structure (ECM) and the learning capabilities described in section 2.1. This description of the agents can be seen as a simplified model for species with low cognitive capacities and simple deliberation mechanisms, or just as a theoretical approach to study the optimal behavior that emerges under certain conditions.

With respect to the learning, we set up a concrete task and study the strategy agents develop to fulfill it. In particular, we consider a one-dimensional circular world with sparse resources, which mimics patchy landscapes such as deserts, where organisms need to travel long distances to find food. Inspired by this type of environments, we model a task where agents need to reach a remote food source to get rewarded. The strategy the agents learn via the reinforcement learning mechanism does not necessarily imply that the individual organisms should be able to learn to develop it, but can also be interpreted as the optimal behavior that a species would exhibit under the given environmental pressures.

Let us proceed to detail the agents’ motor and sensory abilities. The positions that the agents can occupy in the world are discretized {0, 1, 2…W}, where W is the world size (total number of positions). Several agents can occupy the same position. At each interaction round, the agent can decide between two actions: either it continues moving in the same direction or it turns around and moves in the opposite direction. The agents move at a fixed speed of 1 position per interaction round. For the remainder of this work, we consider the distance between two consecutive positions of the world to be our basic unit of length. Therefore, unless stated otherwise, all distances given in the following are measured in terms of this unit. We remark that, in contrast to other approaches where the actions are defined with respect to other individuals [39], the actions our agents can perform are purely motor and only depend on the previous orientation of the agent.

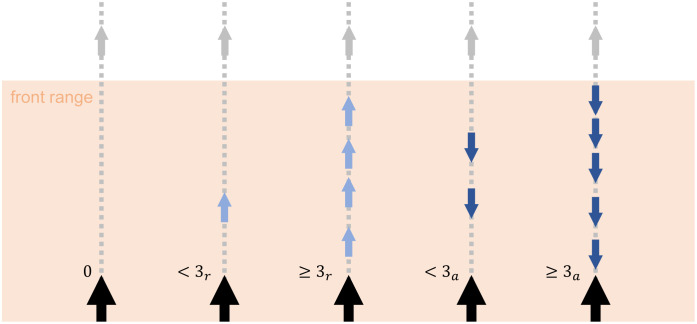

Perception is structured as follows: a given agent, termed the focal agent, perceives the relative positions and orientations of other agents inside its visual range (radius with center at the agent’s position) VR, termed its neighbors. The percept space S (see Sec. 2.1) is structured in the Cartesian product form S ∈ Sf × Sb, where Sf is the region in front of the focal agent and Sb the region at the back. More precisely, each percept s = (sf, sb) contains the information of the orientation of the neighbors in each region with respect to the focal agent and if the density of individuals in this region is high or low (see Fig 2). Each category of percepts can take the values sf, sb ∈ {0, <3r, ≥3r, <3a, ≥3a} (25 percepts in total), which mean:

0. No agents

<3r. There are less than 3 neighbors in this region and the majority of them are receding from the focal agent.

≥3r. There are 3 or more neighbors in this region and the majority of them are receding from the focal agent.

<3a. There are less than 3 neighbors in this region and the majority of them are approaching the focal agent.

≥3a. There are 3 or more neighbors in this region and the majority of them are approaching the focal agent.

Graphical representation of the percepts’ meaning.

Only the front visual range (colored region) is considered, which corresponds to the values that category sf can take. The focal agent is represented with a larger arrow than the frontal neighbors. The agent can only see its neighbors inside the visual range and it can distinguish if the majority are receding (light blue) or approaching (dark blue) and if they are less or more than three.

In the following discussions, we refer to the situation where the focal agent has the same orientation as the neighbors as a percept of positive flow (majority of neighbors are receding at the front and approaching at the back). If the focal agent is oriented against its neighbors (these are approaching at the front and receding at the back), we denote it as a percept of negative flow. Note that the agents can only perceive information about the neighboring agents inside their visual range, but they are not able to see any resource or landmark present in the surroundings. This situation can be found in realistic, natural environments where the distance between resources is large and the searcher has no additional input while moving from one patch to another. Furthermore, the important issue of body orientation is thereby taken into account in our model [32].

The interactions between agents are assumed to be sequential, in the sense that one agent at a time receives a percept, deliberates and then takes its action before another agent is given its percept. Technically, agents are given a label at the beginning of the simulation to keep track of the interaction sequence but we remark that they are placed at random positions in the world. There are two reasons for assuming a sequential interaction. For one, in a group of real animals (or other entities), different individuals typically take action at slightly different times, with perfect synchronization being a remarkable and costly exception. The second argument in favor of sequential updating is that it ensures that a given agent’s circumstances do not change from the time it receives its percept until the time when its acts. If the actions of all agents were applied simultaneously, a given focal agent would not be able to react to the actions of the other agents in the same round. Such a simplification would not allow us to take into account any sequential, time-resolved interactions between different agents of a group. In the real situation, while one focal agent is deliberating, other agents’ actions may change its perceptual input. Therefore, an action that may have been appropriate at the beginning of the round, would no longer be appropriate at this agent’s turn.

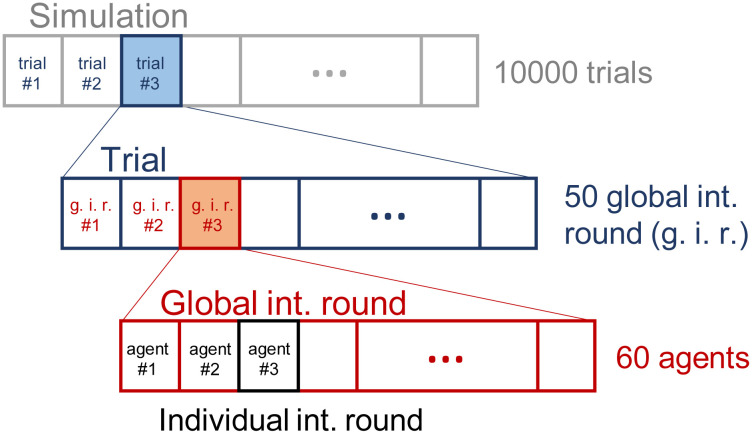

The complete simulation has the structure displayed in Fig 3, where:

With each ensemble of N = 60 agents, we perform a simulation of 104 trials during which the agents develop new behaviors to get the reward (RL mechanism). This process is denoted as learning process or training from this point on.

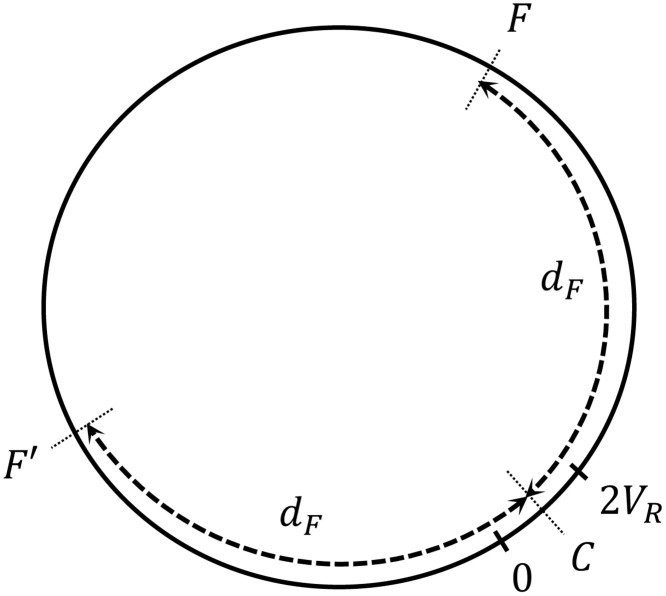

Each trial consists of n = 50 global interaction rounds. At the beginning of each trial, all agents of the ensemble are placed in random positions within the initial region (see Fig 4).

We define a global interaction round to be the sequential interaction of the ensemble, where agents take turns to perform their individual interaction round (perception-deliberation-action). Note that each agent perceives, decides and moves only once per global interaction round.

Structure of the simulation.

Each ensemble of agents is trained for 104 trials, where each trial consists of 50 global interaction rounds (g.i.r.). At each g.i.r., the agents interact sequentially (see text for details).

1D environment (world).

Agents are initialized randomly within the first 2VR positions. Food is located at positions F and F′. dF is the distance from the center of the initial region C to the food positions.

The learning task is defined as follows: at the beginning of each trial, all the agents are placed at random positions within the first 2VR positions of the world, with orientations also randomized. Each agent has a fixed number n of interaction rounds over the course of a trial to get to a food source, located at positions F and F′ (Fig 4). At each interaction round, the agent first evaluates its surroundings and gets the corresponding percept. Given the percept, it decides to perform one out of the two actions (“go” or “turn”). After a decision is made, it moves one position. If the final position of the agent at the end of an interaction round is a food point, the agent is rewarded (R = 1) and its ECM is updated according to the rules specified in Sec. 2.1. Each agent can only be rewarded once per trial. Note that the h matrices of the agents are only updated following Eq (3), and we do not consider any other transformation to explicitly model evolution as it could be done, in principle, using genetic algorithms to explicitly represent evolutionary mechanisms of mutation, crossover etc. (see also the discussion at the beginning of Sec. 2).

We consider different learning scenarios by changing the distances dF at which food is positioned. However, note that a circular one-dimensional world admits a trivial strategy for reaching the food without any interactions, namely going straight in one direction until food is reached. Thus, in order to emulate the complexity that a more realistic two-dimensional scenario has in terms of degrees of freedom of the movement, we introduce a noise element that randomizes the orientation of each agent every sr steps (it changes orientation with probability 1/2). Not all agents randomize the orientations at the same interaction round, which would lead to random global behavior. This randomization can be also interpreted biologically as a fidgeting behavior or even as a built-in behavior to escape predators [40]. Protean movement has been observed in several species [41–44] and there exist empirical studies that show that unpredictable turns [45] and complex movement patterns [46] decrease the risk of predation. In addition, if the memory of the organism is not very powerful, we can also consider that, at these randomization points, it forgets its previous trajectory and needs to rely on the neighbors’ orientations in order to stabilize its trajectory. The agent can do so, since the randomization takes place right before the agent starts the interaction round.

Under these conditions, we study how the agents get to the food when the only input information available to them is the orientation of the agents around them.

We consider different learning scenarios characterized by the distance dF (see Fig 4). We study how the dynamics that the agents develop in order to reach the food source change as the distance dF increases. In particular, we focus on two extreme scenarios: one where the resource is within the initial region (dF < VR) —agents are initialized within the first 2VR positions of the world—, and the other one where the resource is at a much larger distance. As a scale for this distance, we consider how far an agent can travel on average with a random walk, which is

The situation where dF < VR mimics an environment with densely distributed resources, whereas the regime with dF ≫ dRW resembles a resource-scarce environment where a random walk is no longer a valid strategy for reaching food sources.

The parameters of the model that are used in all the learning processes are given in Table 1. Providing that

| Agent | Environment | ||

|---|---|---|---|

| Description | Value | Description | Value |

| Visual range (VR) | 6 | Number of agents (N) | 60 |

| Reorient. freq. (sr) | 5 | World size (W) | 500 |

| Glow (η) | 0.2 | Int. rounds per trial (n) | 50 |

| Reward (R) | 1 | Number of trials | 104 |

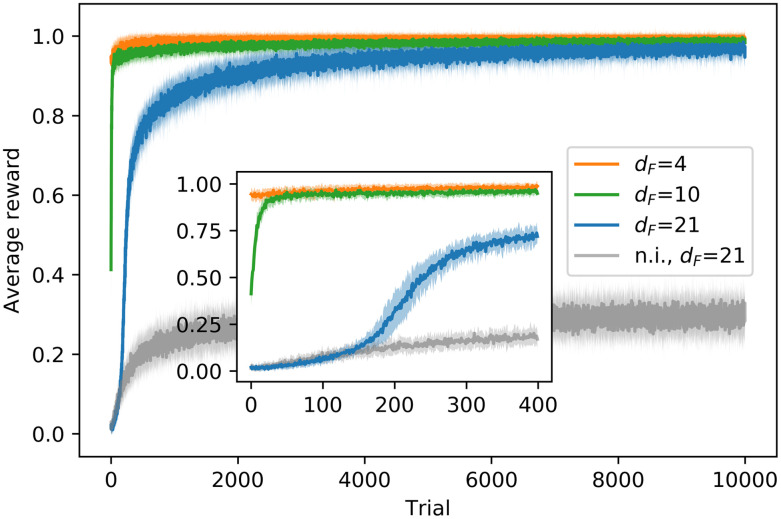

Learning curves for dF = 4, 10, 21 and dF = 21 for non-interacting (n.i.) agents.

The curve shows the percentage of agents that reach the food source and obtain a reward of R = 1 at each trial. For each task, the average is taken over 20 (independent) ensembles of 60 agents each and the shaded area indicates the standard deviation. Zooming into the initial phase of the learning process, the inset figure shows a faster learning in the task with dF = 10 than in the task with dF = 21. In the case of dF = 21, no agent is able to reach the food source in the first trial, and it takes the interacting agents approx. 200 trials to outperform the n.i. agents.

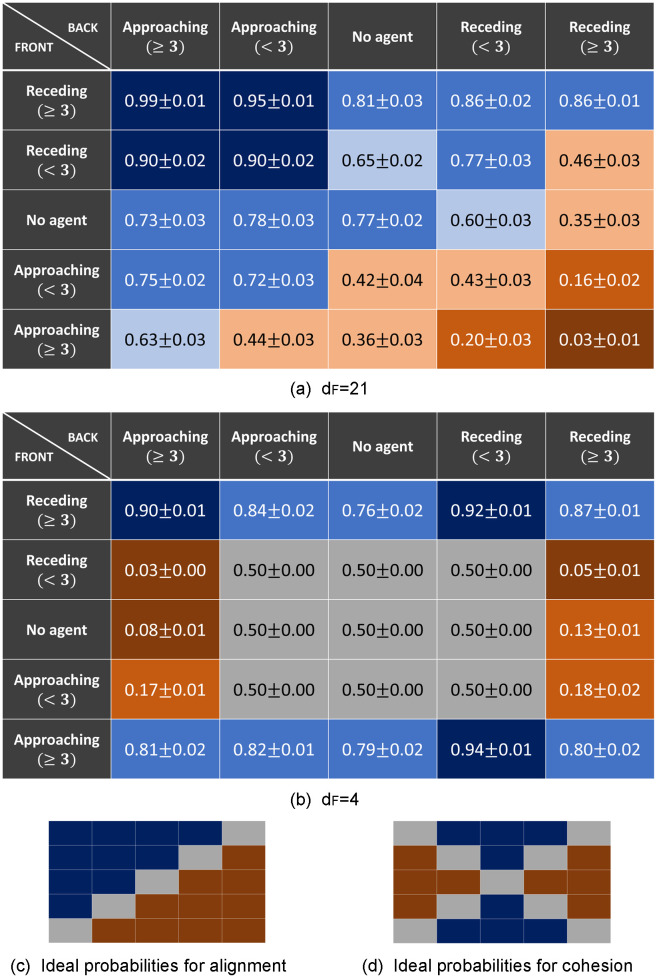

The behavior the agents have learned at the end of the training can be studied by analyzing the final state of the agents’ ECM, from where one obtains the final probabilities for each action depending on the percept the agents get from the environment (see Eq (1)). These final probabilities are given in Fig 6 for the learning tasks with dF = 4, 21.

Learned behavior at the end of the training process.

The final probabilities in the agents’ ECM for the action “go” are shown for each of the 25 percepts (5x5 table). (a) and (b) Final probabilities learned in the scenarios with dF = 21 and dF = 4 respectively. The average is taken over 20 ensembles (each learning task) of 60 agents each. Background colors are given to easily identify the learned behavior, where blue denotes that the preferred action for that percept is “go” and orange denotes that it is “turn”. More specifically, the darker the color is, the higher the probability for that action, ranging from grey (p ≃ 0.5), light (0.5 < p < 0.7) and normal (0.7 ≤ p < 0.9) to dark (p ≥ 0.9). Figures (c) and (d) show what the tables would look like if the behavior is purely based on alignment (agent aligns to its neighbors with probability 1) or cohesion (agent goes towards the region with higher density of neighbors with probability 1), respectively. See text for details.

Tables of Fig 6 show the probability of taking the action “go” for each of the 25 percepts. We focus on the learning tasks with dF = 4, 21, which represent the two most distinctive behaviors that we observe.

Let us start with the case of dF = 21 (Fig 6(a)), which corresponds to a task where the food is located much further away than the distance reachable with a random walk. In this case, highly aligned swarms emerge as the optimal collective strategy for reaching the food (see also Sec. 3.2 and figures therein), since the orientations of the surrounding neighbors allow the focal agent to stabilize its orientation against the periodic randomization. The individual responses that lead to such collective behavior can be studied by looking at table (a): the diagonal corresponds to percepts with a clear reaction leading to alignment, i.e. to keep going when there is a positive flow of neighbors and to turn if there is a negative flow. More specifically, one can see that when the agent is in the middle of a swarm and aligned with it, the probability that it keeps going is 0.99 for dense swarms [percept (≥3r, ≥3a)] and 0.90 for sparse swarms [percept (<3r, <3a)]. In the same situations, the agent that is not aligned turns around with probability 0.97 for dense swarms [percept (≥3a, ≥3r)] and 0.57 for sparse swarms [percept (<3a, <3r)]. Outside the diagonal, one observes that the probability of turning is high when a high density of agents are approaching the focal individual from the front (last row) and the agents in the back are not approaching. We can also analyze the learned behavior at the back edge of the swarm, which is important to keep the cohesion of the swarm. When an agent is at the back of a dense swarm and aligned with it [percept (≥3r, 0)], the probability of keeping the orientation is 0.81. If instead, the agent is oriented against the swarm [percept (0, ≥3r)] the probability of turning around to follow the swarm is 0.65. This behavior is less pronounced when the swarm is not so dense [percepts (<3r, 0), (0, <3r)], in fact, when a low density of neighbors at the back are receding from the focal agent [percept (0, <3r)], the focal agent turns around to rejoin the swarm with probability 0.4, which results in this agent leaving the swarm with higher probability. If the agent is alone [percept (0, 0)], it keeps going with probability 0.77.

A very different table is observed for dF = 4 (Fig 6(b)). In this task, the food source is located inside the initial region where the agents are placed at the beginning of the trials, so the agents perceive, in general, high density of neighbors around them. For this reason, they rarely encounter the nine percepts encoding low density —that correspond to the ones at the center of the table, with grey background (Table (b) in Fig 6)— throughout the interaction rounds they perform until they get the reward. The corresponding probabilities are the initialized ones, i.e. 1/2 for each action. For the remaining percepts, we observe that the agents have learned to go to the region with higher density of neighbors, which leads to very cohesive swarms (see also Sec. 3.2.2). Since the food source is placed inside the initialization region in this case —which is also within the region agents can cover with a random walk—, there is a high probability that there are several agents already at the food source when an agent arrives there, so they learn to go to the regions with higher density of agents. This behavior can be observed, for instance, for percepts in the first column (high density at the back) and second, third and fourth row (low/no density at the front), where the agents turn around with high probability. In addition, we observe that there is a general bias towards continuing in the same direction, which can be seen for example in percepts with the same density in both regions (e.g. percepts at the corners of the table). The tendency to keep walking is always beneficial in one-dimensional environments to get to the food source (non-interacting agents learn to do so deterministically, as argued for Fig 5). In general, we observe that, in order to find the resource point at dF = 4, agents do not need to align with their neighbors because the food is close enough that they can reach it by performing a Brownian walk.

Fig 6(c) and 6(d) show what the tables would look like if the agents had deterministically (with probability 1) learned just to align with the neighbors (c) or just to go to the region inside the visual range with higher density of neighbors (d). In these figures, percepts for which there is no pronounced optimal behavior have grey background.

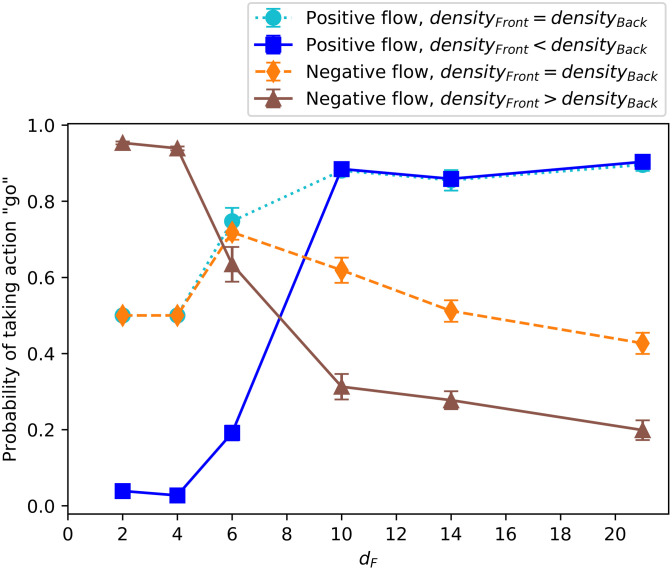

In Fig 7, we select four representative percepts that show the main differences in the individual behaviors and plot the average probability of taking the action “go” at the end of a wide range of different learning scenarios where the distance to the food source is increasingly large. We observe that there are two clear regimes with a transition that starts at dF = 6. This is the end of the initial region (see Fig 4, with VR = 6 in our simulations) where the agents are positioned at the beginning of each trial (see S1 Appendix for details on why this transition occurs at dF = 6). The main difference between regimes is that, when the food is placed near the initial positions of the agents, they learn to “join the crowd”, whereas, if the food is placed farther away, they learn to align themselves and “go with the flow”. More specifically, for dF < 6, the orientations of the surrounding neighbors do not play a role, but the agents learn to go to the region (front/back) with higher number of neighbors, which leads to unaligned swarms with high cohesion. On the contrary, for the tasks with dF > 6, the agents tend to align with their neighbors. This difference in behavior can be observed, for instance, in the dark blue (squares) curve of Fig 7, which corresponds to the percept “positive flow and higher density at the back”. We observe that for dF = 2, 4, the preferred action is “turn” (the probability of taking action “go” is low), since there are more neighbors at the back. However, for dF = 10, 14, 21, the agents tend to continue in the same direction, since there is a positive flow (neighbors have the same orientation as the focal agent). Analogously, the brown curve (triangles) shows the case where there is a negative flow and higher density at the front, so agents trained to find nearby food (dF = 2, 4) have high probability of going, whereas agents trained to find distant food (dF = 10, 14, 21) have high probability of turning.

Final probability of taking the action “go” depending on the learning task (increasing distance to food source dF) for four significant percepts.

The percepts are (< 3r, < 3a), (< 3r, ≥ 3a), (< 3a, < 3r), (≥ 3a, < 3r), respectively (see legend). The average is taken over the agents’ ECM of 20 independently trained ensembles (1200 agents) at the end of the learning process. Each ensemble performs one task per simulation (dF does not change during the learning process).

We remark that, even though the learning task is defined in terms of the distances dF, the results from this section and S1 Appendix show that the main features of these two types of dynamics do not only depend on the choice of the absolute value of dF, but also on the location where the food is placed relative to the initial distribution of the agents. Therefore, we observe that the two regimes correspond to the situations when (i) the food is placed within the initial region where the other agents are also situated at the beginning and (ii) when the food is placed far away from the initial region. In case (i) agents learn to rely on the information about the positions of the neighbors and in (ii) they learn to rely on the information about their orientations. Note that for instance agents trained with dF = 4 have low probability of taking the action “go” (which leads to alignment) if the initial region is 2VR (Fig 7), whereas they take the action “go” with higher probability if the initial region is reduced to VR (see Fig 1 in S1 Appendix).

In general, we observe that agents with the same motor and sensory abilities can develop very different behaviors in response to different reward schemes. Agents start with the same initial ECM in all the learning scenarios, but depending on the environmental circumstances, in our case the distance to food, some responses to sensory input happen to be more beneficial than others in the sense that they eventually lead the agent to get a reward. For instance, agents that happen to align with their neighbors are the ones that reach the reward when the food is far away, so this response is enhanced in that particular scenario, but not in the one with nearby food.

In this section, we study the properties of the collective motion that emerges from the learned individual responses analyzed in the previous section. We focus on two main properties of the swarms, namely alignment and cohesion. Figs 8 and 9 show the trajectories of the agents of one ensemble before (Fig 8) any learning process and at the end of the learning processes with dF = 4, 21 (Fig 9). One can see that the collective motion developed in the two scenarios differs greatly in terms of alignment and cohesion. Thus, we quantify and analyze these differences in the following.

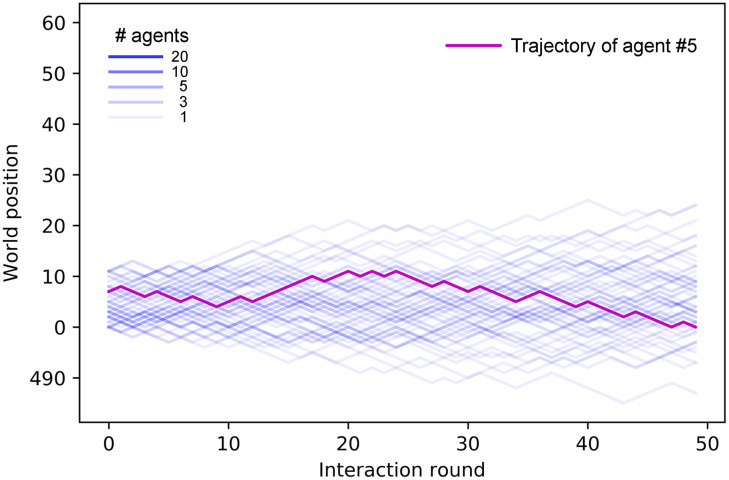

Trajectories (position vs. time) of an ensemble of 60 agents in one trial prior to any learning process.

The vertical axis displays the position of the agent in the world and the horizontal axis the interaction round (note that the trial consists of n = 50 rounds). Each line corresponds to the trajectory of one agent. However, some agents’ trajectories overlap, which is indicated by the color intensity. The trajectory of one particular agent is highlighted for clarity.

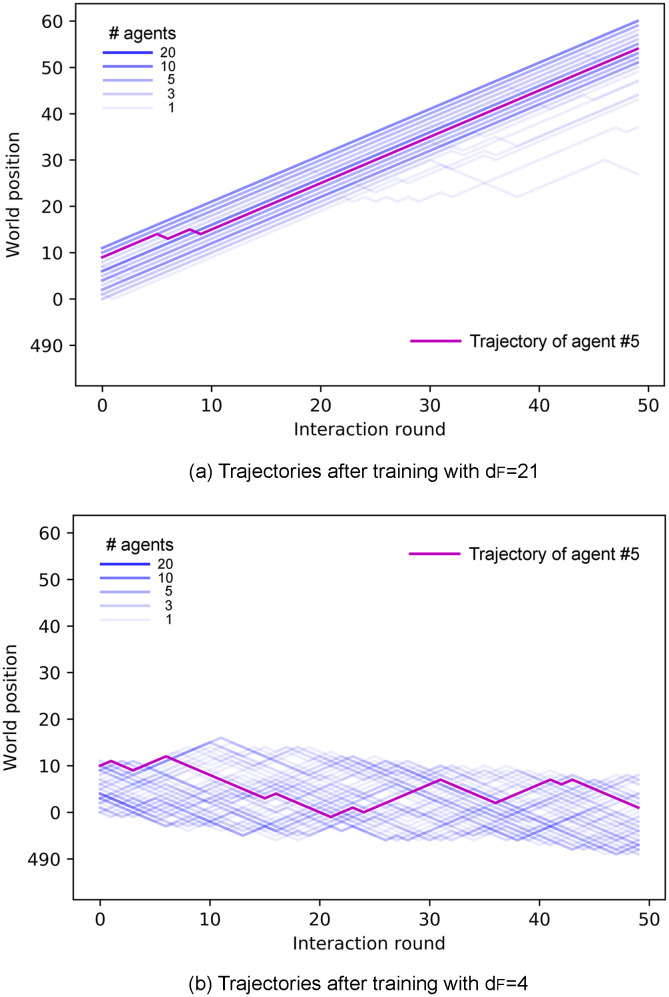

Trajectories of all agents of an ensemble in the last trial of the learning process for (a) dF = 21 and (b) dF = 4.

Ensembles of agents trained to find distant food form aligned swarms (a), whereas agents trained to find nearby food form cohesive, unaligned swarms (b). With the same number of interaction rounds, aligned swarms (a) cover larger distances than cohesive swarms (b). In addition, observe that trajectories in panel (b) spread less than in Fig 8.

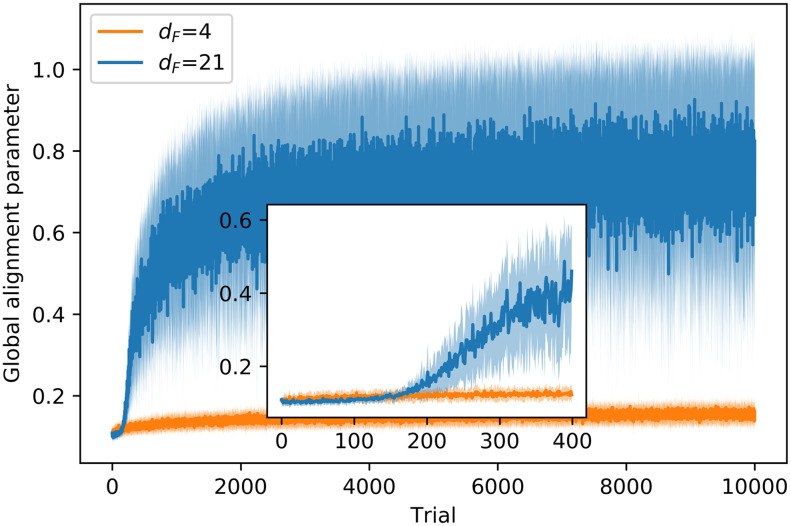

The emergence of aligned swarms as a strategy for reaching distant resources is studied by analyzing the order parameter, defined as

Fig 10 shows how agents that need to find nearby food do not align, whereas those whose task is to find distant resources learn to form strongly aligned swarms as a strategy for getting the reward, as can be seen from the increase in the order parameter over the course of the training. The inset in Fig 10 shows that agents with the reward at dF = 21 start to align with the neighbors from trial 200, which leads to the conclusion that increasing the alignment is the behavior that allows them to get to the reward (note that the agents start to be rewarded also from trial 200, as can be seen in the inset of Fig 5). The large standard deviation in the dF = 21 case is due to the fact that, in some trials, agents split in two strongly aligned groups that move in opposite directions (see Fig 3 (a) in S1 Appendix for details).

Evolution of the global alignment parameter through the learning processes with dF = 4,21.

At each trial, there is one data point that displays the average of the order parameter, first over all the (global) interaction rounds of the trial and then over 20 different ensembles of agents, where each ensemble learns the task independently. Shaded areas represent one standard deviation.

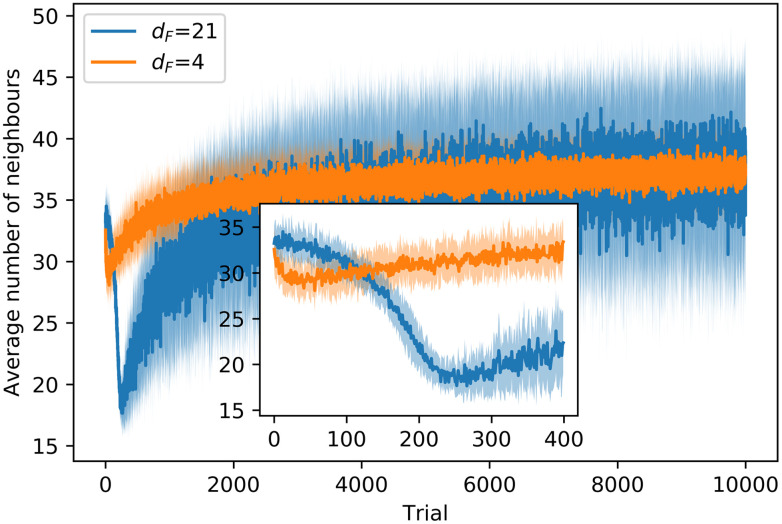

In this section, we study the cohesion and stability of the different types of swarms. In particular, we quantify the cohesion by means of the average number of neighbors (agents within visual range of the focal agent),

Fig 11 shows the evolution of the average number of neighbors through the learning processes with dF = 4, 21. In the training with dF = 21, we observe a decay in M in the first 200 trials, due to the fact that agents start to learn to align locally (see S1 Appendix and Fig 4 therein for details), but the global alignment is not high enough to entail an increase in the average number of neighbors. Therefore, as agents begin to move in straight lines for longer intervals (instead of the initial Brownian motion), they tend to leave the regions with a higher density of agents and M drops. From trial 200 onwards, agents start to form aligned swarms —global alignment parameter increases (see inset of Fig 10)— to get to the food, which leads to an increase in M (see inset of Fig 11). In the training with dF = 4, agents learn quickly (first 50 trials) to form cohesive swarms, so M increases until a stable value of 36 neighbors is attained.

Evolution of the average number of neighbors around each agent through the learning processes with dF = 4,21.

At each trial, there is one data point that displays the average of M, first over all the (global) interaction rounds of the trial and then over 20 different ensembles of agents, where each ensemble learns the task independently. Shaded areas represent one standard deviation.

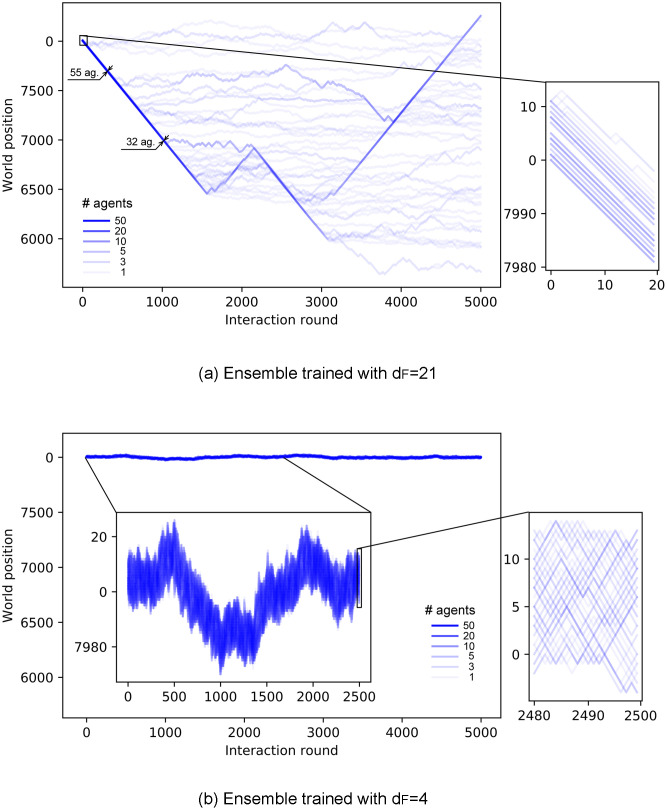

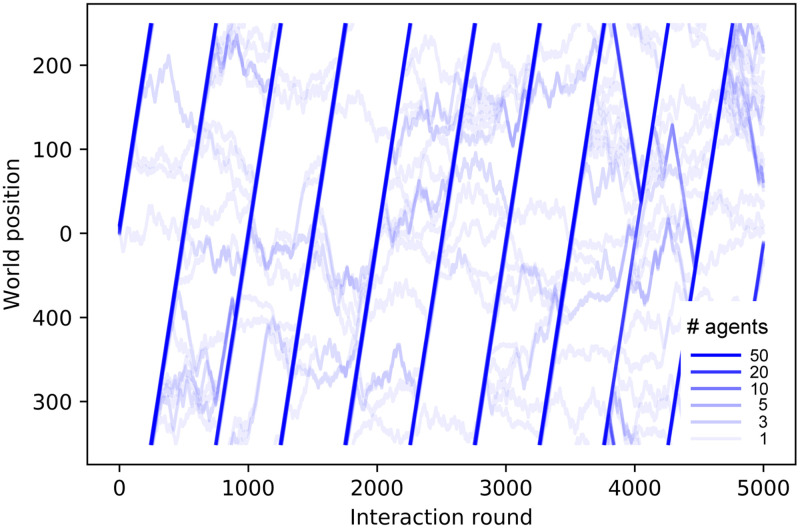

Up to this point, all the analyses have been done with trials of 50 interaction rounds. However, this is insufficient for assessing the stability of the swarm. For this purpose, we take the already trained ensembles and let them walk for longer trials —the agents do not learn anything new in these simulations, i.e. their ECMs remain unchanged— so that we can analyze how the cohesion of the different swarms evolves with time. We place the agents (one ensemble of 60 agents per simulation) in a world that is big enough so that they cannot complete one cycle within one trial. This resembles infinite environments insofar as agents that leave the swarm have no possibility of rejoining it. This allows us to study the stability of the swarm cohesion and the conditions under which it disperses.

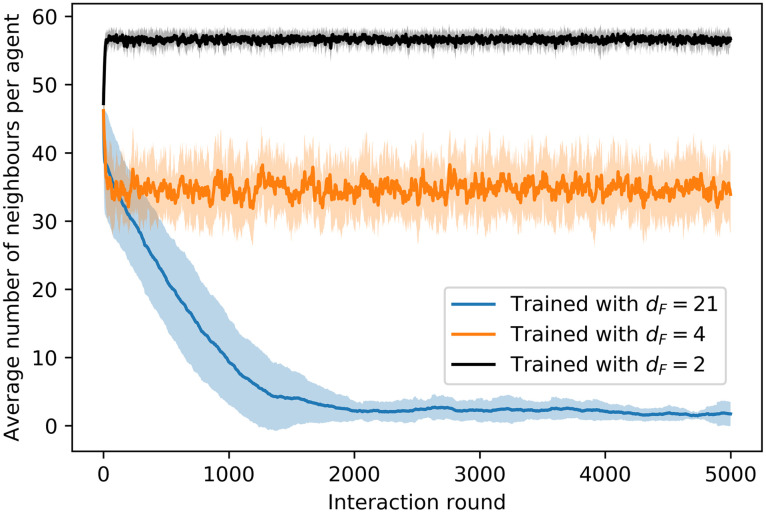

Fig 12 shows the trajectories of ensembles of agents trained with different distances dF. In the case with dF = 21 (Fig 12(a)), there is a continuous drop of agents from the swarm until the swarm completely dissolves. On the other hand, agents trained with dF = 4 (Fig 12(b)) present higher cohesion and no alignment (see inset of Fig 12(b)). Note that this strong cohesion makes individual trajectories spread less than the Brownian motion exhibited by agents prior to the training (see Fig 8). The evolution of the average number of neighbors throughout the simulation is given in Fig 13, where we compare the cohesion of ensembles of agents trained with dF = 2, 4, 21. In the latter case, the agents leave the swarm continuously, so the average number of neighbors decreases slowly until the swarm is completely dissolved. For dF = 2 (dF = 4) the individual responses are such that the average number of neighbors increases (decreases) in the first 30 rounds until the swarm stabilizes and from then on M stays at a stable value of 57 (35) neighbors. The average number of neighbors is correlated to the swarm size, which we measure by the difference between the maximum and minimum world positions occupied by the agents (modulo world size W = 500). As one can see in Fig 12(b), all agents remain within the swarm. If the swarm size increases, the average number of neighbors decreases, since the agents are distributed over a wider range of positions. The swarm stabilizes at a given size depending on the individual responses learned during the different trainings. For instance, swarms formed by agents trained with dF = 2 stabilize at swarm sizes of approximately 9 positions, whereas those trained with dF = 4 stabilize at larger swarm sizes (around 17 positions, see e.g. inset of Fig 12(b)), which explains the lower value of M observed in Fig 13 for dF = 4.

Trajectories of an ensemble of 60 agents, in a world of size W = 8000, shown over 5000 interaction rounds.

(a) Agents trained with dF = 21 form a swarm that continuously loses members until it dissolves completely. (b) Agents trained with dF = 4 form a highly cohesive swarm for the entire trial. The centered inset of this plot shows the first 2500 rounds, with a re-scaled vertical axis to observe the movement of the swarm. Insets on the right zoom in to 20 interaction rounds so as to resolve individual trajectories.

Evolution of the average number of neighbors throughout the trial of 5000 interaction rounds.

Average is taken over 20 ensembles of 60 agents each, where for each ensemble the simulation is performed independently. Shaded areas indicates one standard deviation.

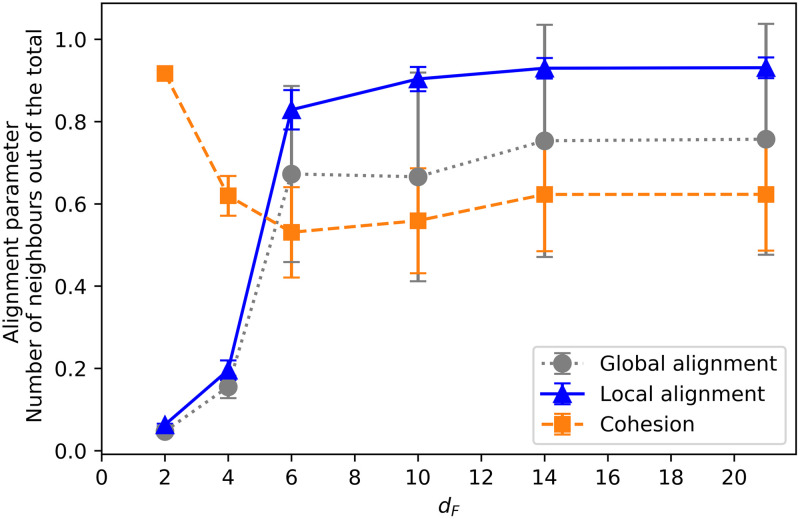

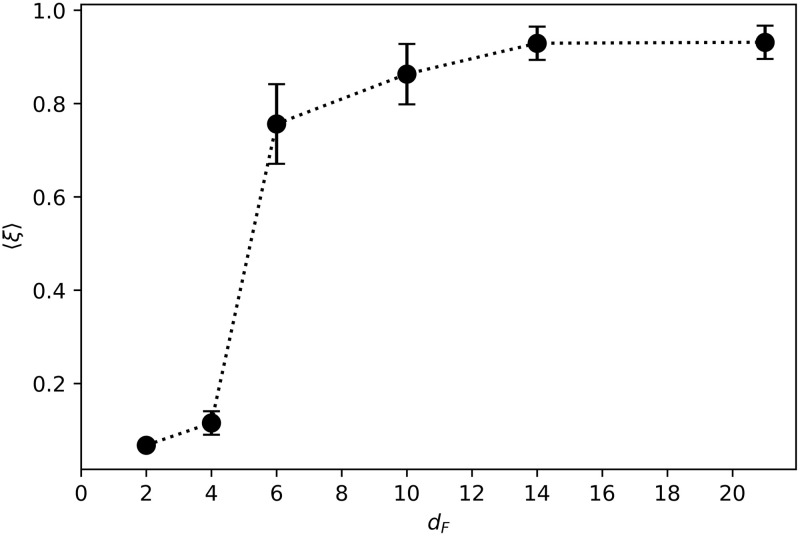

Finally, we compare how the alignment and cohesion of the swarms change as a function of the distance at which the resource is placed in the training. Fig 14 shows the average local and global alignment parameters, together with the average number of neighbors (at the end of the training) as a function of the distance dF with which the ensembles were trained. We observe that the farther away the resource is placed, the more strongly the agents align with their neighbors (local alignment) in order to reach it. This is directly related to the individual responses analyzed in Fig 7, where one can see that for dF ≥ 6 the agents react to positive and negative flow by aligning themselves with their neighbors. Specifically, the observed collective dynamics can be explained in terms of individual responses as follows. The probability of turning around when there is a negative flow and there are not a lot of neighbors (orange-diamonds curve in Fig 7) becomes higher as the dF increases, from ≃0.3 at dF = 6 to 0.6 at dF = 21. The change in the other individual alignment responses (in particular, the other curves in Fig 7) is not so large in the region where dF > 6, which suggests that the increase in the local alignment and cohesion we observe for dF > 6 is mostly due to the strength of the tendency the agents have to turn around when there is a negative flow, even when there are not a lot of neighbors. In addition, the lower values of the global alignment parameter observed in the grey (circles) curve in Fig 14 for dF ≥ 6 correspond to the behavior analyzed in Sec. 3.2.1, where it is shown that strongly aligned swarms split into two groups in some of the trials (see S1 Appendix for details). With respect to the average number of neighbors, we observe that almost all the agents are within each other’s visual range when dF = 2. As dF increases, swarms become initially less cohesive, but once dF > 6, they become strongly aligned and consequently once again more cohesive (see discussion in Sec. 3.2.2 and S1 Appendix for details).

Average number of neighbors (in percentage), global and local alignment parameter as a function of the distance dF.

Note that dF is the distance to the point where food is placed during the training. Each point is the average of the corresponding parameter over all interaction rounds (50) of one trial, and over 100 trials. 20 already trained ensembles are considered.

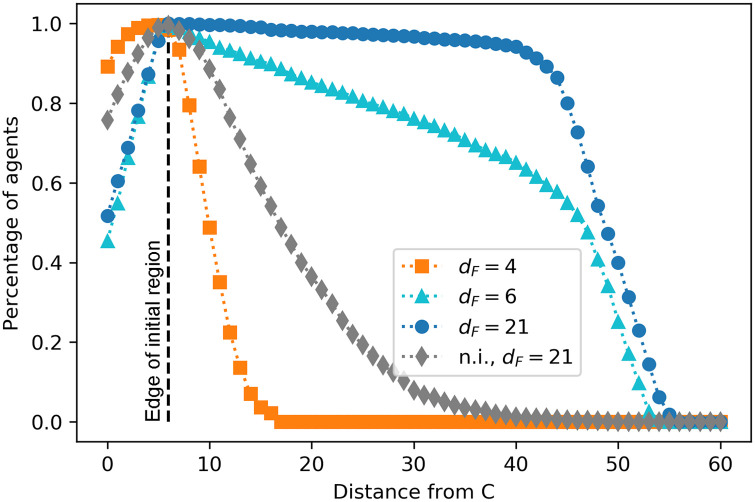

In this section, we study how efficient each type of collective motion is for the purpose of foraging. First, we perform a test where we evaluate how the trained ensembles explore the different world positions. For this test, we analyze which positions in the world are visited by which fraction of agents. The results are given in Fig 15. We observe that, for positions within the initial region, agents trained with dF = 4 perform better than the others, since they do a random walk that allows them to explore all these positions exhaustively (as evidenced by high percentages of agents that explored positions before the edge of the initial region in Fig 15). On the other hand, agents trained with dF = 6, 21 perform worse when exploring nearby regions, since they form aligned swarms and move straight in one direction. This behavior prevents the agents that are initialized close to the edge of the initial region from exploring the positions inside it. The closer the position is to the edge of the initial region, the more agents visit it because they pass through it when traveling within the swarm. Thus, we conclude that the motion of these swarms is not the optimal to exploit a small region of resources that are located close to each other (a patch).

Percentage of agents that visit the positions situated at a distance from C given on the horizontal axis.

Since C is located at world position 6 (see Fig 4), a distance of e.g. 10 on the horizontal axis refers to the world positions 16 and 496. The already trained ensembles walk for one trial of 50 interaction rounds. For each of the four trainings (see legend), the performance of 20 ensembles is considered.

Non-interacting (n.i.) agents trained with dF = 21 perform slightly better at the intermediate distances than agents trained with dF = 4, since they typically travel five steps in a straight line before being randomly reoriented, thereby covering an expected total of 16 positions in one trial (see Sec. 3). Both curves (grey diamonds and orange squares) show a faster decay in this region than the other two cases (dF = 6, 21), which is due to the fact that agents do not walk straight for long distances in these two types of dynamics, since they do not stabilize themselves by aligning.

Agents trained with dF = 21 reach the best performance for longer distances. In particular, their performance is always better than the performance of agents trained with dF = 6, showing that the strategy developed by agents trained with dF = 21, namely strong alignment, is the most efficient one for traveling long distances (distance from patch to patch). Agents trained with dF = 6 do not align as strongly (see local alignment curve in Fig 14) and there are more agents that leave the swarm before reaching the furthest positions (see also Fig 3 in S1 Appendix), which explains the lower performance at intermediate/long distances (the light blue curve (triangles) has a linear decrease that is stronger in this region than the dark blue curve (circles)). Note that the maximum distance reached by agents is 56; this is simply due to the fact that each trial lasts 50 rounds and the initial positions are within C±6 (see Fig 4).

In addition, we study the swarm velocity for the different types of collective motions. To do so, we compute the average net distance traveled per round. Considering that the swarm walks for a fixed number of rounds (50), we define the normalized swarm velocity as,

Fig 16 displays the swarm velocity as a function of the distance dF at which food was placed during the learning process. Agents trained to find distant resources (e.g. dF = 14, 21) are able to cover a distance almost as large as the number of rounds for which they move. However, while the ensembles trained to find nearby resources (e.g. dF = 2, 4) form very cohesive swarms, they are less efficient in terms of net distance traveled per interaction round. We observe that the transition between the two regimes happens at dF = 6 —corresponding to the end of the initialization region—, which is consistent with the transitions observed in Figs 7 and 14 (see discussion in S1 Appendix for more details).

Swarm velocity 〈ξ〉 as a function of the training distance dF.

Each point is the average over the agents of 20 independently trained ensembles that have performed 50 independent trials each.

In this section, we analyze the individual trajectories that result from the different types of swarm dynamics. In order to gather enough statistics, we consider ensembles of agents that have been trained under various conditions, as described above, and let them walk for longer trials so that the individual trajectories are long enough to obtain reliable results. During this process, the agents do not learn anything new anymore; that is, the agents’ ECMs remain as they are at the end of the training. Thus, we study the trajectories that emerge from the behavior at the end of the learning process, which can be interpreted as the behavior developed on the level of a population in order to adapt to given environmental pressures. The individuals’ capacity for learning does not play a role in this analysis.

We focus on the two most representative types of swarms we have observed, i.e. the swarms that emerge from the training with close resources (e.g. dF = 4), characterized by strong cohesion; and the swarms that result from the training with distant resources (e.g. dF = 21), characterized by strong alignment. For easier readability, in the following we will refer to the swarms formed by agents trained with dF = 4 as cohesive swarms, and to the swarms formed by agents trained with dF = 21 as aligned swarms.

In the simulations for this analysis, we let each ensemble of agents perform 105 interaction rounds —where each agent moves one position per interaction round— in a world of size W = 500 and analyze the individual trajectories. An example of such individual trajectories for the case of agents trained with dF = 21 is given in Fig 17. We observe that some agents leave the swarm at certain points; however, due to the ‘closed’ nature of our world model, they have the possibility of rejoining the swarm once it completes a cycle and starts a new turn around the world. Due to these environmental circumstances, the agents exhibit two movement modes: when they are alone and when they are inside the swarm. By looking at Fig 17, one can see how agents exhibit directional persistence when they move within the swarm, since they have learnt to align themselves with their neighbours as a strategy for stabilizing their orientations. However, trajectories become more tortuous as agents leave the swarm and walk on their own. Note that it is only possible for individuals to leave the swarm [47] because of the weaker cohesion exhibited by aligned swarms (see Sec. 3.2.2). This bimodal behavior can occur in nature (see e.g. collective motion and phase polyphenism in locusts [48, 49]), where individuals may benefit from collective alignment, for instance, to travel long distances in an efficient way, but they move independently to better explore nearby resources (see Sec. 3.3 for details on exploration efficiency of the different collective dynamics).

Trajectories of one ensemble of 60 agents that were trained with dF = 21.

The world size is W = 500. Color intensity indicates the number of agents following the same trajectory, i.e. moving within the swarm. Some agents leave the swarm and then rejoin it when the swarm completes the cycle and starts a new turn. Only the first 5000 interaction rounds (of a total of 105) are shown.

In the following sections, we characterize the trajectories and assess how well the agents’ movement patterns fit to well-known foraging models such as Lévy walks or composite correlated random walks.

This work is directly related to foraging theory, since the task we set for the learning process is to find food in different environmental conditions. For this reason, we will analyze our data to determine whether the movement patterns that emerge from this learning process support any of the most prominent search models. For environments with scarce resources (e.g. patchy landscapes), these models are the Lévy walks [22] and the composite correlated random walks (CCRW) [23].

In order to analyze the trajectories and determine which type of walk fits them best, the distribution of step lengths is studied, where a step length is defined as the distance between two consecutive locations of an organism. Intuitively, the optimal strategy for navigating a patchy landscape allows for both an exhaustive exploration inside patches and an efficient displacement between patches, employing some combination of short and long steps. Lévy walks have a distribution of step lengths in which short steps have higher probability of occurrence but arbitrarily long steps can also occur due to its power-law (PL) tail. In two- and three-dimensional scenarios, the direction of motion is taken from a uniform distribution from 0 to 2π, which implies that Lévy walks do not consider directionality in the sense of correlation in direction between consecutive steps [32]. On the other hand, CCRW and the simpler version thereof, composite random walks (CRW), consist of two modes, one intensive and one extensive, which are mathematically described by two different exponential distributions of the step lengths. The intensive mode is characterized by short steps (with large turning angles in 2D) to exploit the patch, whereas the extensive mode —whose distribution has a lower decay rate— is responsible for the inter-patch, straight, fast displacement. CCRW in addition allow for correlations between the directions of successive steps.

Even though the models are conceptually different, the resulting trajectories may be difficult to distinguish [24, 50, 51], even more if the data is incomplete or comes from experiments where animals are difficult to track. In the past years, many works have been published that try to provide techniques to uniquely identify Lévy walks [52–54] and to differentiate between the two main models [24, 55, 56]. For instance, some of the experiments that initially supported the hypothesis that animals perform Lévy walks [25, 26, 57] were later reanalyzed to support the conclusion that more sophisticated statistical techniques are, in general, needed [27, 28, 55, 58]. Apart from that, there exist several studies that relate different models of collective dynamics to the formation of Lévy walk patterns under certain conditions [59, 60]. For instance, it has been shown [61] that Lévy walk movement patterns can arise as a consequence of the interaction between effective leaders and a small group of followers, where none of them has information about the resource.

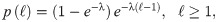

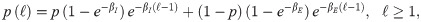

In our study, we consider the three models we have already mentioned (PL, CCRW and CRW), together with Brownian motion (BW) as a baseline for comparison. Since our model is one-dimensional, a distribution of the step lengths is sufficient to model the trajectories we observe, and no additional distributions, such as the turning angle distributions, are needed. In addition, the steps are unambiguously identified: a step has length ℓ if the agent has moved in the same direction for ℓ consecutive interaction rounds. Finally, since space in our model is discretized, we consider the discrete version of each model’s probability density function (PDF). More specifically, the PDFs we consider are,

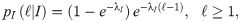

Brownian motion (BW):

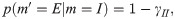

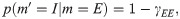

Composite random walk (CRW):

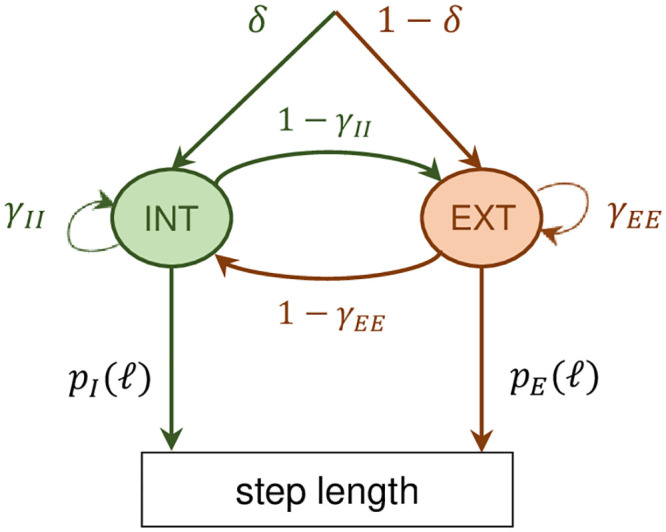

Composite correlated random walk (CCRW):

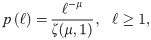

Power-law (PL):

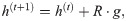

Hidden Markov model for the CCRW.

There are two modes, the intensive and the extensive, with probability distributions given by pI and pE (see text for details). The probability of transition from the intensive (extensive) to the extensive (intensive) mode is given by 1 − γII (1 − γEE), where γII and γEE are the probabilities of remaining in the intensive and extensive mode respectively. δ is the probability of starting in the intensive mode.

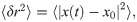

In this section, we study the general characteristics of the trajectories of both types of swarm dynamics. We start by analyzing how diffusive the individual trajectories are depending on whether the agents belong to an ensemble trained with dF = 21 (dynamics of aligned swarms) or dF = 4 (dynamics of cohesive swarms). More specifically, we analyze the mean squared displacement (MSD), defined as,

Fig 19 shows that the dynamics of aligned swarms leads to superdiffusive individual trajectories (ballistic, with α = 2), whereas the trajectories of agents that belong to cohesive swarms exhibit close-to-normal diffusion. The anomalous diffusion (superdiffusion) exhibited by the agents trained with dF = 21 (curve with blue circles in Fig 19) favors the hypothesis that the swarm behavior may induce Lévy-like movement patterns, since Lévy walks are one of the most prominent models describing superdiffusive processes. However, CCRW can also produce superdiffusive trajectories [23, 24]. In contrast, agents trained with dF = 4 do not align with each other and the normal diffusion shown in Fig 19 is indicative of Brownian motion.

Mean squared displacement (MSD).

Log-log (base 2) plot of the MSD as a function of the time interval for two types of trajectories: trajectories performed by agents trained with dF = 21 (blue curve, circles) and by agents trained with dF = 4 (orange curve, triangles). We observe that the former present ballistic diffusion, whereas the latter exhibit close-to-normal diffusion. 600 individual trajectories (10 ensembles of 60 agents) are considered for each case.

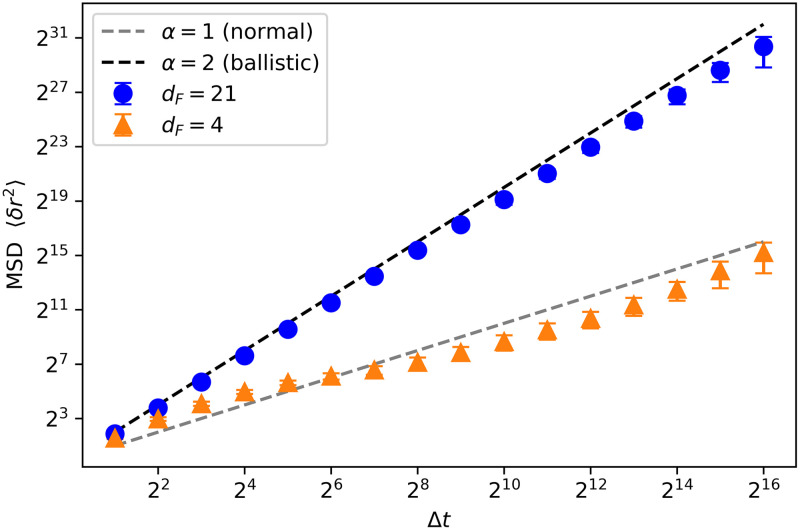

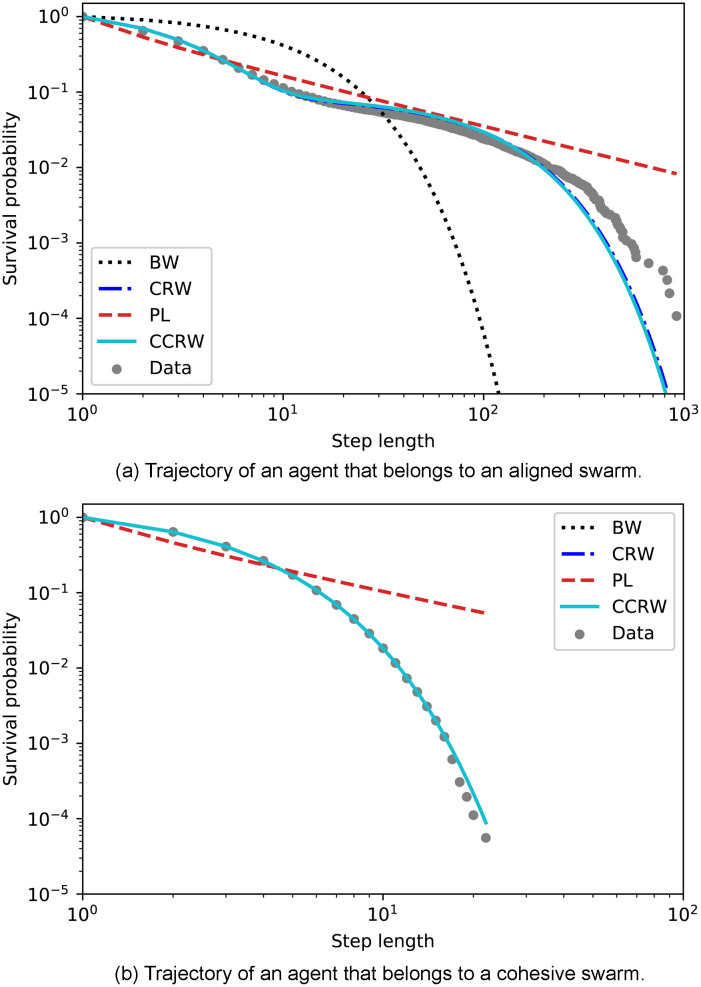

The analysis presented above already shows a major difference between the two types of swarm dynamics but it is in general not sufficient to determine which theoretical model (Lévy walks or CCRW) best fits the data from aligned swarms. According to [24], one possible way to distinguish between composite random walks and Lévy walks is to look at their survival distributions, which is the complement of the cumulative distribution function, giving the fraction of steps longer than a given threshold. Lévy walks would exhibit a linear log-log relationship when this type of distribution is plotted, whereas CCRW exhibit a non-linear relation. Fig 20 compares the survival distributions of two trajectories, one from each type of swarm, to those predicted by the best-fitting models of each of the four classes. The maximum length observed in the dF = 4-trajectory is of the order of 10, whereas in the case of the dF = 21-trajectory, it is one order of magnitude larger. The most prominent features one infers from these figures are that all models except PL seem to fit the data of the dF = 4-trajectory, and that Brownian motion is clearly not a good model to describe the dF = 21-trajectory. In addition, Fig 20(a) is curved and seems to be better fit by the CCRW. However, when other trajectories of agents trained with dF = 21 are plotted in the same way, we see that data seems to better follow the straight line of the PL rather than the CCRW (see for example Fig 4 in S2 Appendix).

Survival probability as a function of the step length.

The survival probability is the percentage of step lengths larger than the corresponding value on the horizontal axis. Each panel depicts the data from the trajectory of one agent picked from (a) aligned swarms and (b) cohesive swarms, so that this figure represents the most frequently observed trajectory for each type of dynamics. The survival distributions of the four candidate models are also plotted. The distributions for each model are obtained considering the maximum likelihood estimation of the corresponding parameters (see Sec. 4.3 for details). The curve for the CCRW model is obtained by an analytic approximation of the probabilities of each step length, given the maximum likelihood estimation of its parameters. Since the order of the sequence of step lengths is not relevant for this plot, we estimate the probabilities of each step length as

While visual inspection may be an intuitive way of assessing model fit, and one that is easy to apply at small scales, it would be preferable to use a method that yields quantitative and objective, repeatable assessments of how well various models fit a given data set. Moreover, we generated 600 individual trajectories per type of swarm, in order to support statistically meaningful conclusions, and at this scale visual inspection quickly becomes infeasible. For this reason, we now turn to a more rigorous statistical analysis of the individual trajectories.

In order to determine which of the mentioned models best fits our data, we perform the following three-step statistical analysis for each individual trajectory: (i) first, we optimize each family of models to get the PDF that most likely fits our data via a maximum likelihood estimation (MLE) of the model parameters. (ii) Then, we compare the four different candidate models among them by means of the Akaike information criterion (AIC) [64] and (iii) finally, we apply an absolute fit test for the best model. We repeat this analysis for agents trained with dF = 4 and dF = 21, yielding a total of 600 individual trajectories per type of training (10 ensembles of aligned swarms and 10 of cohesive swarms, where each ensemble has 60 agents). The simulation of 105 interaction rounds is performed for each ensemble independently. In order to do the statistical analysis, each individual trajectory is divided into steps, which are defined in our case as the distance the agent travels without turning. We obtain sample sizes that range from 4000 to 17000 steps for trajectories of agents trained with dF = 21 and from 20000 to 40000 steps in the case with dF = 4.

The following provides more detail on the analysis, starting with the MLE method, which consists in maximizing the likelihood of each model candidate with respect to its parameters. The likelihood function is generally defined as,

Table 2 shows the MLE parameters we have obtained for each model and for each swarm type. We observe that, in the dF = 21 case, the decay rates of the exponential distributions (

| Model | dF = 21 | dF = 4 | |

|---|---|---|---|

| BW |

| 0.083 ± 0.032 | 0.305 ± 0.052 |

| CRW |

| 0.37 ± 0.15 | 0.6 ± 1.1 |

| 0.0126 ± 0.0069 | 0.302 ± 0.048 | |

| 0.879 ± 0.040 | 0.196 ± 0.059 | |

| PL |

| 1.59 ± 0.10 | 1.657 ± 0.066 |

| CCRW |

| 0.23 ± 0.31 | 0.12 ± 0.13 |

| 0.37 ± 0.15 | 0.36 ± 0.21 | |

| 0.0134 ± 0.0066 | 0.300 ± 0.047 | |

| 0.839 ± 0.078 | 0.749 ± 0.060 | |

| 0.013 ± 0.020 | 0.09 ± 0.26 | |

600 trajectories are analyzed for each type of swarm.

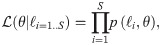

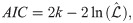

Once the value of the maximum likelihood

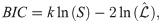

Fig 21 shows the results of the Akaike weights obtained for each of the 600 trajectories analyzed for each type of swarm. In the case of the aligned swarms (Fig 21(a)), we observe that the BW model is discarded in comparison to the other models, since its Akaike weight is zero for all trajectories. 85% of trajectories have Akaike weight of 1 for the CCRW model [66] and 0 for the rest of the models, whereas 14% of trajectories have Akaike weight of 1 for the PL model and 0 for the rest.

Violin plots that represent the Akaike weights obtained for each model.

(a) Akaike weights of trajectories of agents trained with dF = 21 (aligned swarms). (b) Akaike weights of trajectories of agents trained with dF = 4 (cohesive swarms). 600 individual trajectories —per type of swarm— were analyzed for each plot. The ‘•’ symbol represents the median and the vertical lines indicate the range of values in the data sample (e.g. PL model in figure (a) has extreme values of 0 and 1). Shaded regions form a smoothed histogram of the data (e.g. the majority of Akaike weights of the CCRW model in figure (a) have value 1, and there are no values between 0.2 and 0.8). See text for more details.

On the other hand, the cohesive swarms (Fig 21(b)) show high Akaike weights for all models except the PL. 92% of trajectories have Akaike weights 0.87, 0.12 and 0.01 for the BW, CRW and CCRW models, respectively. The remaining 8% of trajectories have wCCRW = 1.

In order to exclude the worry that the CCRW and CRW are chosen even though they have more parameters than the power-law or the BW, we also consider the Bayesian information criterion (BIC), which penalizes more strongly the number of parameters of the model. The BIC value is given by,

Percentage of trajectories that are best fit by each model according to the BIC criterion.

A model is considered to best fit the data of a given trajectory if it has the lowest BIC value and its difference with respect to the rest of the models is larger than 10. 600 individual trajectories —per type of swarm— were analyzed for each histogram.

Fig 22 leads to the same conclusions regarding the aligned swarms, i.e. 85% of the trajectories are best fit by CCRW and 14% are best fit by PL. However, for the cohesive swarms, the BW is the best model to fit the data of 90% of the trajectories according to the BIC criterion. Therefore, we conclude that the dynamics shown by the cohesive swarms lead to individual Brownian motion. Regarding the aligned swarms, our results are in agreement with previous works that claim that “selection pressures give CCRW Lévy walk characteristics” [67]. The majority of individual trajectories are best fit by CCRW with two exponential distributions whose means are

Finally, we study the goodness-of-fit (GOF) of the different models. For models that deal with i.i.d. variables (BW, CRW, PL), it is enough to perform a likelihood ratio test, whose p-value indicates how well the data is fit by the model. Within our framework, a low p-value, namely p < 0.05, means that the model can be rejected as a description for the observed data with a confidence of 95%. The closer p is to 1, the better the model fits the data. In the case of CCRW, a more involved method is needed due to the correlation in the data. Specifically, we first compute the uniform pseudo-residuals (see [62]) and then we perform a Kolmogorov-Smirnov (KS) test to check for uniformity of the mid-pseudo-residuals. Details on the methods used in both GOF tests are given in S2 Appendix. Even though a visual inspection of Fig 20 suggests that the CRW, PL, CCRW models fit the data reasonably well, a quantitative analysis gives p-values of p < 0.01 for most of the trajectories fitted by the BW and PL models. Some trajectories fitted by the CRW model give better fittings, e.g. the best p-values are p = 0.97 and p = 0.36 for a trajectory of an agent trained with dF = 4 and dF = 21, respectively. In the CCRW case, we give the average value of the KS distance obtained in the 600 trajectories, which is DKS = 0.134±0.016 and DKS = 0.189±0.046 for dF = 4 and dF = 21-trajectories respectively (note that a perfect fit gives DKS = 0 and the worst possible fitting gives DKS = 1). More details on the GOF tests and their results are given in S2 Appendix.

A closer inspection reveals that this relatively poor fit is mostly due to irregularities in the tails of the observed distributions. However, more importantly, we note that the trajectories were in fact not drawn from a theoretical distribution chosen for its mathematical simplicity, but result from individual interactions of agents that have learned certain behaviors. In this regard, with respect to the sometimes low goodness-of-fit values, our simulations lead to similar challenges as the analysis of experimental data from real animals (see e.g. [56]). Nonetheless, the above analysis does provide a more robust account of key features of the collective dynamics.

We have studied the collective behavior of artificial learning agents, more precisely PS agents, that arises as they attempt to survive in foraging environments. More specifically, we design different foraging scenarios in one-dimensional worlds in which the resources are either near or far from the region where agents are initialized.

This ansatz differs from existing work in that PS agents allow for a complex, realistic description of the sensory (percepts) and motor (actions) abilities of each individual. In particular, agents can distinguish how other agents within visual range are oriented and if the density of agents is high or low in the front and at the back of their visual area. Based on this information, agents can decide whether to continue moving in their current direction or to turn around and move in the opposite direction. Crucially, there are no fixed interaction rules, which is the main difference that sets our work apart from previous approaches, like the self-propelled particle (SPP) models or other models from statistical physics. Instead, the interactions emerge as a result of the learning process agents perform within a framework of reinforcement learning. The rewards given as part of this learning process play a role analogous to environmental pressures in nature, by enhancing the behaviors that led the agent to be rewarded. Therefore, by varying the task and reward scheme and studying the resulting behaviors, our approach allows us to test different causal explanations for specific observed behaviors, in the sense of environmental pressures proposed to have led to these behaviors.

In this work, we have considered scenarios where the food is situated inside or far from the region where agents are initialized and we have observed that the initially identical agents develop very different individual responses —leading to different collective dynamics— depending on the distance they need to cover to reach the reward (food source). Agents learn to form strongly aligned swarms to get to distant food sources, whereas they learn to form cohesive (but weakly aligned) swarms when the distance to the food source is short.

Since we model each individual as an artificial learning agent, we are able not only to study the collective properties that arise from the given tasks, but also to analyze the individual responses that agents learn and that, in turn, lead to the swarm formation. Thus, we observe for instance that the tendency to align with the neighbors in the dF = 21 case increases with the density of neighbors surrounding the agent. In the case of a training with dF = 4, we observe that the individuals tend to move to the region with higher number of neighbors, which leads to high cohesion at the collective level.

We note that the task faced by our artificial agents, of reaching a food source, is closely related to the behaviors studied in the context of foraging theory. For this reason, we compare the individual trajectories that result from the learning process to the principal theoretical models in that field. We show that most of the individual trajectories resulting from the training with distant resources —which leads to strongly aligned swarms— are best fitted by composite correlated random walks consisting of two modes, one intensive and one extensive, whose mean step lengths are

In contrast, agents that were trained to find nearby resources and follow the dynamics of cohesive swarms present normal-diffusive, Brownian-like trajectories that do not exhibit two movement modes but just one.

One crucial point of this analysis is that our simulated agents move in a multi-agent context and their movement patterns are therefore determined by the swarm dynamics they have developed through the learning process. In particular, we provide a new perspective and additional insight on the studies mentioned above regarding Lévy walks and CCRW, since the individual trajectories that are best fit by these two models arise from a collective motion with very specific features such as strong alignment and decaying cohesion. This, together with the fact that the individual responses emerge as a result of the learning process, provides an example of how trajectories with features that resemble Lévy walks can emerge from individual mechanisms that are not generated by a Lévy walk process. In this sense, our work provides an unusual example to consider within the emergentist versus evolutionary debate on Lévy walks (see e.g. [31, 32]). In particular, our work supports the former view point insofar as the agents do not have built-in interaction rules that come from a certain mathematical model such as that of the Lévy walk, but nevertheless exhibit trajectories that resemble Lévy walks as a result of the swarm dynamics that emerged from certain foraging environmental pressures.

To conclude, we have applied a model of artificial agency (PS) to different foraging scenarios within the framework of collective motion. We have shown that, without any prior hard-wired interaction rules, the same agents develop different individual responses and collective interactions, depending on the distance they need to travel to reach a food source. Agents form strongly aligned swarms to stabilize their trajectories and reach distant resources, whereas they form cohesive, unaligned swarms when the resources are near. In addition, we have shown that Lévy-like trajectories can be obtained from individual responses that do not have a simple theoretical model as the underlying process, but instead are generated and arise from the interplay of a fine-grained set of learned individual responses and the swarm behavior that emerges from them at a collective level.

This work provides a new framework for the study of collective behavior, which supports more detailed and realistic representations of individuals’ sensory and motor abilities and different types of environmental pressures. It would be interesting to apply this approach to the more complex collective behaviors that arise in two- and three-dimensional environments. Furthermore, the PS model allows for a variety of new scenarios to explore in the context of behavioral biology, since different reward schemes can easily be implemented and studied.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48