Competing Interests: The authors have declared that no competing interests exist.

- Altmetric

- Introduction

- Materials and methods

- Literature review

- Proposed method

- Proposed algorithm and results

- Computational results

- Fair run and descriptive statistics

- Results from QA simulation: Initial random tour cost variable

- Results from SA simulation: Initial random tour cost variable

- Conclusion

- Supporting information

Distributed Systems architectures are becoming the standard computational model for processing and transportation of information, especially for Cloud Computing environments. The increase in demand for application processing and data management from enterprise and end-user workloads continues to move from a single-node client-server architecture to a distributed multitier design where data processing and transmission are segregated. Software development must considerer the orchestration required to provision its core components in order to deploy the services efficiently in many independent, loosely coupled—physically and virtually interconnected—data centers spread geographically, across the globe. This network routing challenge can be modeled as a variation of the Travelling Salesman Problem (TSP). This paper proposes a new optimization algorithm for optimum route selection using Algorithmic Information Theory. The Kelly criterion for a Shannon-Bernoulli process is used to generate a reliable quantitative algorithm to find a near optimal solution tour. The algorithm is then verified by comparing the results with benchmark heuristic solutions in 3 test cases. A statistical analysis is designed to measure the significance of the results between the algorithms and the entropy function can be derived from the distribution. The tested results shown an improvement in the solution quality by producing routes with smaller length and time requirements. The quality of the results proves the flexibility of the proposed algorithm for problems with different complexities without relying in nature-inspired models such as Genetic Algorithms, Ant Colony, Cross Entropy, Neural Networks, 2opt and Simulated Annealing. The proposed algorithm can be used by applications to deploy services across large cluster of nodes by making better decision in the route design. The findings in this paper unifies critical areas in Computer Science, Mathematics and Statistics that many researchers have not explored and provided a new interpretation that advances the understanding of the role of entropy in decision problems encoded in Turing Machines.

Introduction

Distributed Information Systems (DS) are growing in popularity across the software industry as it provides more computational and data transmission capacity for applications and become an essential infrastructure that is needed to address the increase in demand for data processing.

DS are used as a cost-efficient way to obtain higher levels of performance by using a cluster of low-capacity machines instead of a unique–single point of failure—large node. A DS is more tolerant to individual machine failures and provides more reliability than a monolithic system.

Parallel computation such as Cloud Computing and High-Performance Computing (HPC) are applications of distributed computing [1].

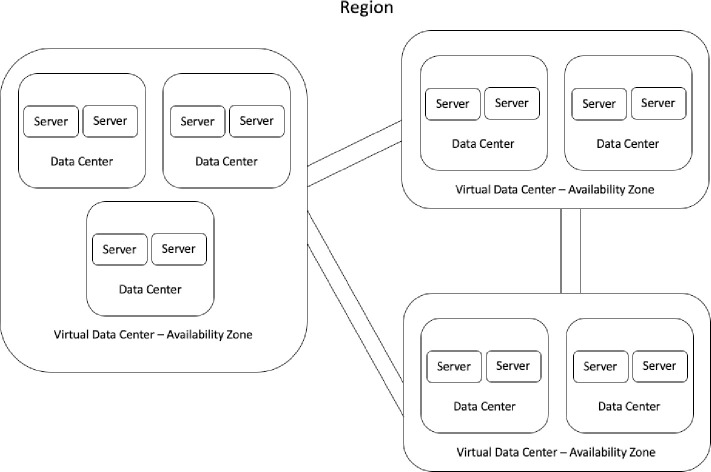

The Cloud Computing market is very consolidated as the cost to deploy, expand and operate a global infrastructure and network is very large. As of 2020 there are 3 major companies: Amazon AWS, Microsoft Azure and Google Cloud Platform. Companies can reduce their IT costs by orchestrating efficiently their workloads across different data centers by their respective weight impact, defined as a utility function with the Euclidian distance between nodes and its respective influence on network latency or the financial utilization time-rate cost for a given set of machines. The Fig 1 illustrate the core components of a cloud computing infrastructure service.

A Virtual Data Center (VDC) has at least 2 physical data centers composed by 2 server nodes.

A combination of 3 or more VDC’s is a geographic Region.

The components of a DS are located in many different machines over a network. The communication and orchestration of process are done by sending and receiving messages. The service exposed are defined by the aggregation of components and its interactions provide the software functionality. Systems such as Service-Oriented Architecture (SOA), peer-to-peer(P2P) and Micro-Services are examples of distributed applications.

Deploying and synchronizing components over many distributed cluster of nodes can be very complex due to multiple variables that can affect the quality of the solution such as network latency between data centers at business hours and at on-demand; cost of renting machines from different Computing Providers; shared servers resources utilization (“noisy neighbor effect”- at both virtual machines and bare metal); valorization of the dollar due to macro and micro economics factors; change in processing time due to model of nodes available for a given time period and operating complexity of the technology stack.

There are many algorithms proposed in the literature to solve the routing-scheduling problem such as 2opt, ant colony (AC), greedy algorithm, genetic algorithm (GA), neural networks, Cross Entropy, and simulated annealing (SA) but very limited work is found using Algorithmic Information Theory to find the boundaries of decision problems in Turing Machines. In this paper we propose a variation of the TSP by defining the decision problem for the candidate solution as a Shannon-Bernoulli process that follows a log-normal distribution for the cost distance variable, defined as a dependable utility function for the TSP.

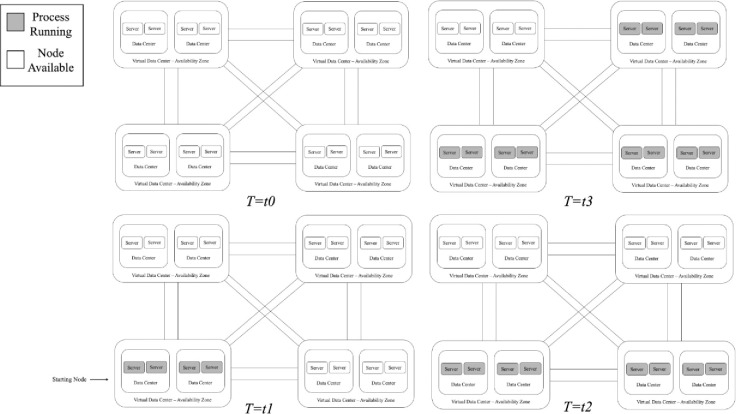

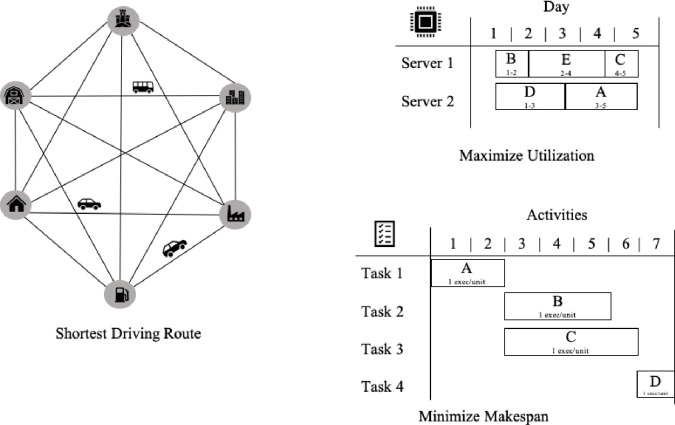

An orchestration job has to deploy efficiently S types of services, process or tasks in many different computing resources such as a cluster of containers or a pool of (physical or virtual) machine nodes connected over a distributed network with different weights (or costs) between each pair of nodes. This job is a process that needs to run on all M (unique) resources points. The cost to use each node can be defined as the round-trip latency between the nodes in the network or the financial cost associated to the proportional quantitative utilization rate for each resource in a given time period. As more Computational Capacity is added, choosing the shortest route to multiple target nodes will be more computationally complex. Fig 2 illustrate a service orchestration across a set of distributed clusters of machines connected over a common network.

Computing provider’s distributed over a network of machines with the respective published service’s and available nodes.

Materials and methods

The traveling salesman problem

was introduced by William Hamilton and Thomas Kirkman. It also known as the messenger problem. The problem asks given a list of cities and the distance between each pair of nodes what is the shortest route that visits all cities exactly once and returns to the original city at the end. There are several researches dedicated to address this routing problem and it has applications in mathematics, computer science, statistics and logistics.

The computational complexity of an algorithm shows how much resources are required to apply an algorithm such as how much time and memory are required by a Turing machine to complete execution and can be interpreted as a measure of difficulty of computing functions. A measurement of computation complexity is the big O notation. It can be defined as: Let two functions f and g such as f(n) is O(g(n)) if there are positive numbers c and N such that

The TSP problem is an important combinatorial optimization problem. As most of the decision problems, it is in the class of NP-hard problems.

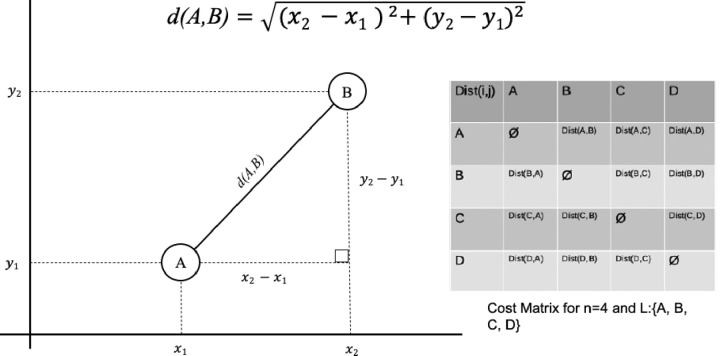

Consider a salesman traveling from city to city and some of them are connected. His goal is to visit each city exactly once and go back to the first city when he finishes. The salesman can choose any path as long as its valid (i.e. visit each city once and finish at the city it has started the tour) he also wants to minimize his cost by taking the shortest route. This problem can be described as a weighted graph G where each city is a node (or vertex) and is connected by a weighted edge only if the two cities are connected by any kind of road, and this road do not cross any other city. The utility function in the TSP is the Euclidian distance. Fig 3 demonstrate the cost matrix between each pair of nodes of a set defined by valid (non-repeating) permutations in a language L with symbols {A, B, C, D}. The cost/weight between points is calculated as a Euclidian distance in a 2D graph.

A sample cost matrix for 4 nodes and the Euclidian distance between each pair of nodes.

Table 1 Demonstrate a sample of valid and invalid strings created from L.

| Word w in Language L | Path Sequence for L: {A, B, C, D, E} | Is Hamiltonian? |

|---|---|---|

| Valid | A > B > C > D > E > A | Yes |

| Valid | D > B > C > A > E > D | Yes |

| Valid | D > B > A > C > E > D | Yes |

| Invalid | A > B > A > D > E > A | No |

The graph can be represented as a matrix where each cell value is defined as the respective cost (distance) w between nodes v and u. For N nodes the distance matrix is defined as D = w(v,u) for all (unique) pair of N. The goal of the TSP is to find a permutation π that minimizes the distance between nodes. For symmetric instances the distance between two nodes in the graph is the same in each direction, forming an undirected graph. For asymmetric instances the weights for the edges between nodes can be dynamic or non-existent.

The weigh value of the edge is defined as the distance of the tour (roads) between cities.

For symmetric TSP, as the number of nodes (or cities) increases in the graph G, the number of possible tours growth choice also increase and is factorial. If we consider N nodes, the function of the input size is

Computational complexity theory

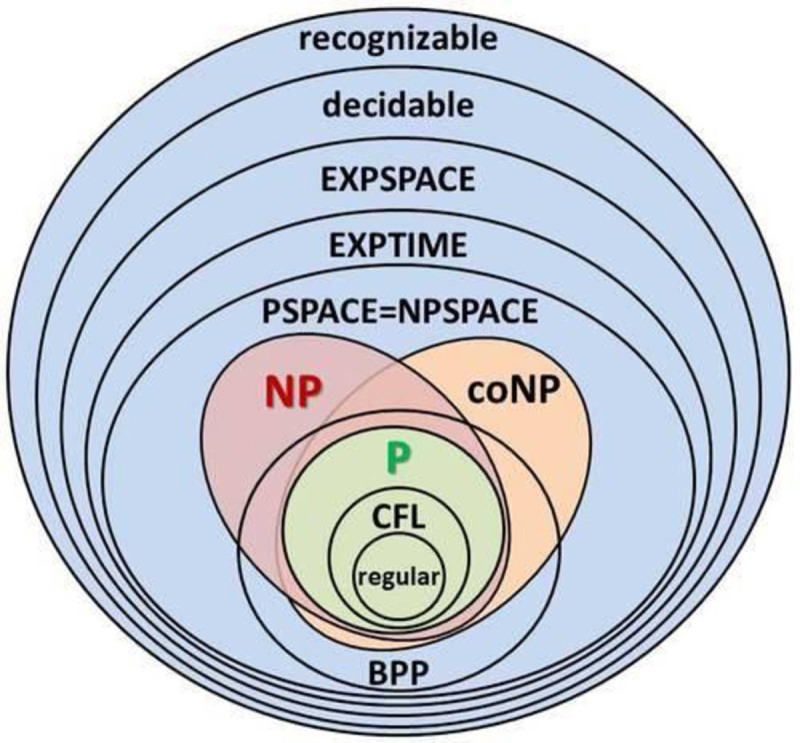

Problems in the NP class can be solved by a non-deterministic polynomial algorithm. Any given class of algorithms such as P, NP, coNP, regular etc. must have a lower bound that index the best performance any problem in the class can have. This bound can be described as the total amount of input items (or symbols) a machine must process before halting, and the respective output items produced following a Probability Distribution Function and a given finite Alphabet. A strategy to find a solution to the decision problem is to find a function that reduce or transform a problem from a domain in which there is no know solution to a constrained domain with a known solution.

This allows the algorithm to search the solution space and decide if any solution is a valid (yes-instances) or invalid (no-instances) and its computable by a polynomial-time algorithm.

This strategy allows us to map instances of the Hamiltonian circle problem to a decision version of the Traveling Salesman Problem and can be described as a decision problem to determine if exists a Hamiltonian circuit in a given complete graph with positive integer weights hose length is not greater than a given positive integer m. Each valid (yes-instance) in the TSP problem is mapped to a valid instance in the Hamiltonian problem space and this transformation can be done in polynomial time.

In Fig 4 reproduced from [2] we have a visual representation of computational complexities categories. The figure demonstrates the groups of Regular, P, coNP, NP, etc class of recognizable problems. In complexity theory, the class P contains all decision problems solvable by a deterministic Turing Machine in polynomial time. The Nondeterministic Polynomial time (NP) class of problems is a category of decision problems that is solvable in polynomial time by a non-deterministic Turing Machine.

Diagram representation for the many categories of computational complexity.

The coNP are the class of problems that have a polynomial-time algorithm mapping for “no-instance” solutions which can be used to verify that the proposed solution is valid but there is no such mapping for “yes-instances”. The P class is a subset of both coNP and NP. The bounded-error probabilistic polynomial time (BPP) is a class of problems solved by a probabilistic Turing Machine in polynomial time with an attached probability distribution function with a given error degree. BPP can be interpreted as the complexity class P with a randomness boundary factor.

Hamiltonian graph

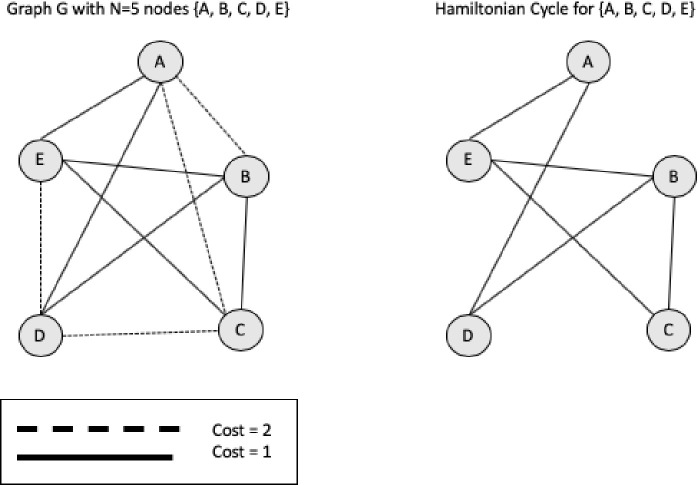

A Hamiltonian cycle (or circuit) can described as a "path" that contains all nodes and the elements in this set are not repeated, with exception to the final vertex. This means that a Hamiltonian cycle in G with start node v has all other nodes exactly once and them finishes at node v. A graph G is Hamiltonian if it has a Hamiltonian cycle. A Hamiltonian cycle with minimum weight is an optimal circuit and therefore is the shortest tour in the TSP Problem.

The Fig 5 provides an example of Hamiltonian circuit for a Graph G with 5 nodes {A, B, C, D, E}. The Table 2 shows the cost matrix for the super graph G.

Hamiltonian cycle from a super graph.

| Cost Matrix | A | B | C | D | E |

|---|---|---|---|---|---|

| A | 0 | 2 | 2 | 1 | 1 |

| B | 2 | 0 | 1 | 1 | 1 |

| C | 2 | 1 | 0 | 2 | 1 |

| D | 1 | 1 | 2 | 0 | 2 |

| E | 1 | 1 | 1 | 2 | 0 |

Although heuristics methods define special cases for the TSP problem and produces near-optimal solutions with short length (weight) Hamiltonian cycles it does not guarantee that the results are the shortest circuit possible. The algorithms to solve the TSP are grouped in 2 categories: exact (Brute-force, greedy) and approximation algorithms (Heuristics such as Simulated Annealing and Genetic Algorithm).

Nature inspired models

Researchers have proposed algorithms inspired by natural events and structures like the heating of metals and the growing behavior of biological organisms. Those methods do not iterate over the entire solution space but rather a portion in order to find the local minimum. They start with an initial random solution and tries to improve the solution quality over each interaction until some input Threshold parameter factor T is reached like a maximum number of interactions; maximum number of candidate solutions; no further improvements found after several iterations; the rate of decay in a dependable temperature probabilistic function or a minimum quality threshold is achieved.

Therefore, heuristics approximation methods can be interpreted as a non-deterministic way to address the error rate between the known solutions and the unknown solutions in polynomial time (i.e. Entropy reduction methods). Although such algorithms do not have to traverse the entire solution space it must decide–“outguess” or “bet”—when a random candidate solution with negative gain will be accepted (i.e. candidate with worst solution quality than current know best solution) in the hopes that eventually it would lead to the shortest distance (i.e. a better solution quality).

Nature-inspired models such as Genetic Algorithms (GA), Ant Colony (AC) and Simulated Annealing (SA) use prior information to improve the solution results and thus are biased towards this encoding. Alternatively, by modeling the TSP problem as a communication channel with a probability density function associated with the stochastic process that generates the solutions at random (following a Bernoulli process), thus we can bound the limits of the search space to a log-normal distribution.

The advantage of this method is that by relying on the statistical analysis of the solution space instead of the computational complexity of the problem we can have equal or better quality than the traditional algorithms without relying on computationally complex implementations that have a higher time and space constrains.

Therefore, this paper attempts to provide an algorithm to solve the TSP using for the decision rule the entropy measured for the solution cost distribution H(X) and by maximizing the expected value of the logarithm of cost/weight/distance variable, defined as the utility function g(X). This is equivalent to maximize the expected geometric growth rate.

Literature review

Solving hard problems

There are 3 categories of algorithms to solve NP-hard problems such as the TSP:

Exact Algorithms: Fast to converge to a solution only form small instances of the problem.

Heuristic Algorithms: Compromise quality but converge with acceptable time requirements. Produce sub-optimal results.

Special cases: Restrict the boundaries of the solution space domain to a subproblem for which there is an exact (or better) solution quality.

Exact Algorithms try all permutations in the domain and verify if each candidate string is the best solution. This algorithm uses a brute-force search method. The running time has a computational complexity of O(n!). As the number of nodes increases the complexity (or running time) increases with a factorial growth.

Combinatorial problems

Other approaches such as Branch and bound algorithms can be used to optimize combinatorial problems. The branch-and-bound method produces lists of candidate solutions by a search in the state space. The set of states forms a graph. Each sub pair of states is connected if the first and the second state are produced by an operator to transform the first state in the second state.

From Poole and Mackworth [3], there are two categories of state space search algorithms:

Uninformed: The algorithm does not have any prior information about the state distribution. The Breadth-First Search is an instance of this class.

Heuristic Search: The algorithm has encoded information about the solution distribution defined by a heuristic function. The A* search algorithm is an instance of this class. The A* is a path finding algorithm and its defined in terms of weighted graphs. It has a running time of O(bd).

Heuristic algorithms

are method for problem-solving using approximations to find suboptimal solutions, under some predefined degree of freedom. There are many heuristics designed to address the TSP as a combinatorial optimization such as genetic algorithms, simulated annealing, tabu search, ant colony optimization, swarm intelligence and cross-entropy method. There are two class of heuristics: Constructive Methods and Iterative Improvement.

Constructive heuristics

Starts with an empty solution and expands the current know partial solution at each execution time unit until the target complete solution is found. In the Nearest neighbor (NN) algorithm, the salesman starts at a random city and at each execution time it visits the nearest city (performing a local move) until all nodes have been visited. The method can be optimized by pre-processing (or filtering) the possible candidate solutions to clusters of best-quality arrangements of node distributions in a 2D graph. This optimization method works as a prefix code property. The method has a worst-case performance of O(N2).

Nearest Neighbor (NN) and Nearest Insertion (NI)

From Asani, Okeyinka and Adebiyi [4] the Convex-hull and Nearest Neighbor heuristics can be combined. Their results where compared with two benchmark algorithms Nearest Neighbor (NN) and Nearest Insertion (NI). Their experimental results show their approach produces better quality in terms of computation speed and shortest distance.

The Christofides and Serdyukov algorithm

Based in graph theory and combines a minimum spanning tree with a minimum-weight perfect matching where the distances between nodes in a super-graph is symmetric and follow the triangle inequality and thus form a metric space. The solution Christofides algorithm has the best worst-case scenario currently know with a quality tour of at most 1.5 the optimal string. The computational complexity is O(n3).

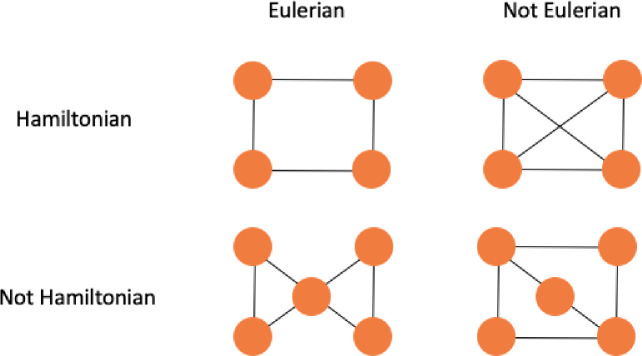

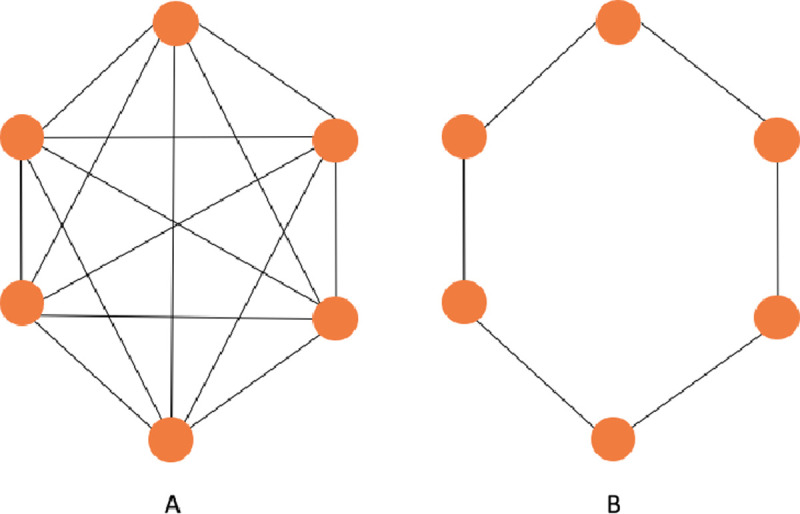

Given a Eulerian path we can find a Eulerian tour in O(n) time. The method finds a minimum spanning tree and duplicate all edges to create a Eulerian Graph. A graph G = (V(G), E(G)) is Eulerian if is both connected and has a closed trail and thus represents a tour with no repeated edges, containing all edges of the graph. In graph theory a Eulerian path is a string in a finite graph that visits every edge exactly once (no repeated edges) and it allows the revisiting of nodes, then returning to the starting vertex. In Fig 6 we have a comparison between Hamiltonian and Eulerian graphs.

Example of Hamiltonian and Eulerian graphs.

The Christofides and Serdyukov algorithm can be described as:

Find the minimum spanning tree

Create a map for the problem with the set of nodes of odd order

Find a Eulerian tour path for the map

Convert to the TSP: If a node is visited twice create a shortcut from the node before the current and next node.

Iterative improvement

Algorithms such as the Pairwise exchange method implemented by the 2-opt algorithm remove two edges at each interaction and reconnected the edges by a new shorter path.

k-opt method

The 2-opt and 3-opt are a special case of the k-opt method. The method can be optimized by a preprocessing using the greedy algorithm. For a random input the average running time complexity is O (n log(n)).

2opt, k-opt

Croes proposed the 2-opt algorithm [5], a simple local-search heuristic, to solve the optimization problem for the TSP. It works by removing two edges from the tour and reconnects the two paths created. The new path is a valid tour since there is only one way to reconnect the paths. The algorithm continues removing and reconnecting until no further improvements can be found. k-opt implementations are instances of 2-opt function but with k > 2 and can lead to small improvements in solution quality. However, as k increases so does the time to complete execution.

In his work [6] proposed the Tabu Search method and it can be used to improve the performance of several local-search heuristics such as 2opt. As neighborhood searches algorithms like 2opt can sometimes converge to a local optimum, the Tabu search keeps a list of illegal moves to prevent solutions that provide negative gain to be chosen frequently. In 2opt the two edges removed are inserted in the Tabu list. If the same pair of edges are created again by the 2opt move, they are considered Tabu. The pair is kept in the list until its pruned or it improves the best tour. However, using Tabu searches increases computational complexity to O(n3), as additional computation is required to insert and evaluate the elements in the list.

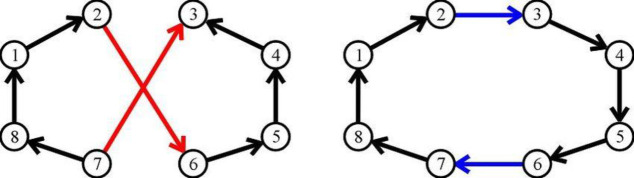

The Fig 7 show the 2-opt moves from [7].

Generating 2-opt moves.

[8] compared several heuristic strategies for the TSP problem such as Greedy, Insertion, SA, GA, etc. He investigated the performance tradeoff between solution quality and computational time. He classifies the heuristics in two class: Tour construction algorithms and Tour Improvement algorithms. All algorithms in the first group stops when a solution is found such as brute-force and Greedy Algorithm. In the second group, after a solution is found by some heuristics, it tries to improve that solution (up to a certain computation and/or time constraints) such as implemented by 2opt, Genetic Algorithm and Simulated Annealing. He concluded by showing that the computational time required is proportional to the desired solution quality.

Simulated Annealing (SA)

Simulated Annealing are heuristics with explicit rules to avoid local minimal. It can be described as a local random search that temporarily accepts moves with negative gain (i.e. were produced by solutions with worst quality than current). These methods simulate the behavior of the cooling process of metals into a minimum energy crystalline structure.

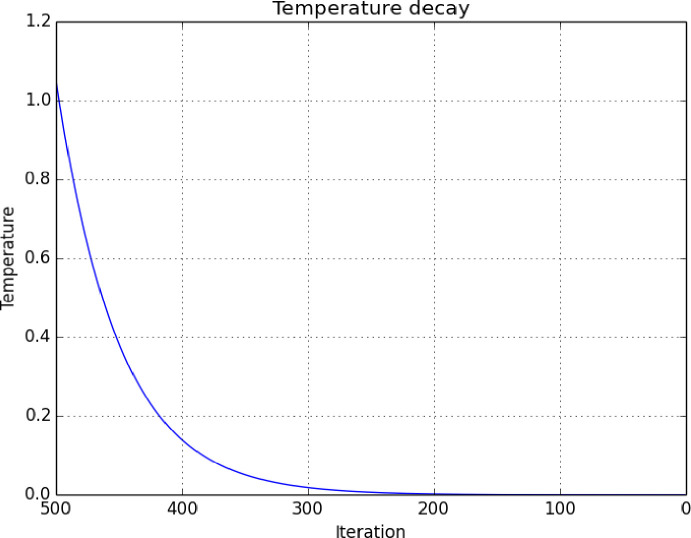

This concept is analogous to the search of global maximum and minimum. The probability of accepting a solution is set by a dependable probability function of a temperature parameter variable t. As the temperature decreases over time the probability changes accordingly. Fig 8 demonstrates the simulated decay in the temperature function over the number of interactions in an algorithm.

Temperature decay relative to the iterations of the SA algorithm.

The acceptance probability is defined as p(x) = 1 if f (y) ≤ f (x) and when otherwise

[9] research combines the Simulated Annealing method with the Gene Expression Programing to improve solution diversification and state search. Their results show a better performance that other methods such as ant-colony, naive SA, naive GA, etc.

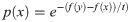

The SA algorithm specifies the neighborhood structure and the cooling function.

Fig 9 from [9] represents the SA algorithm flowchart.

Simulated annealing algorithm flowchart.

[10] research explores the optimization technique in Simulated Annealing and the solution impact by different temperature schedule designs. It concludes that the quality of finite time SA implementations is a result of relaxations dynamics similar to the analogy with the vitrification process of amorphous materials (non-crystalline) or disordered systems (high entropy).

Their results can be generalized in terms of entropy distributions. By the thermodynamics analogy, we know the position of particles in higher temperatures have a higher degree of uncertainty and thus there is more entropy as each particle is bouncing randomly. As the temperature decreases the particles forms bounds between them and the overall state distribution forms a glassy or crystal structure. As there is less uncertainty about the average state value for each particle there is less entropy and thus more information about the optimal state distribution is known.

[11] work provide a numerical analysis of the simulated annealing applied to the TSP. The cost distribution is compared to the control parameter of the cooling method. It concludes that the average-case performance can be defined by assuming the deviation between the final total cost and the optimal solution is distributed by a gamma distribution. This behavior is also observed in our research and this model is explained by the Kolmogorov Complexity for the Bernoulli string that represents a random solution candidate. The entropy for this distribution can be calculated and is the maximum entropy probability distribution. This is the sufficient statistics needed to represent the state set.

Metropolis algorithm and heuristic optimizations

Let f(X) be a function with output proportional to a given target distribution function r. The function r is the proposal density. At each iteration of the algorithm it attempts to move around the sample space. For each move it decides sometimes to accept a given random solution or stay in place. The probability of the solution of the new proposed candidate is with respect to the current know best solution. If the proposed solution is more probable than the know existing point, we automatically accept the new move. Else if the new proposed solution is less probable, we will sometimes reject the move and the more the decrease the probability, less likely we will accept the new move. Most of the values returned will be around the P(X) but eventually solutions with lower probability will be accepted. This characterizes can be interpreted as a generalization of the methods proposed by Simulated Annealing and Genetic Algorithms.

Other heuristics such as 2-opt, 3-opt, inverse, swap methods can be used to generate candidate solutions. Several researches such as [8] have been made to study the performance of different SA operators to solve the TSP problem [12]. proposed a list-based SA algorithm using a list-based cooling method to dynamically adjust the temperature decreasing rate. This adaptive approach is more robust to changes in the input parameters. Their research provide an improved Simulated Annealing method that uses a dynamic list to simplify the parameter settings. This method is used to control the cooling rate for the decrease temperature used by the Metropolis Rule. The list is updated iteratively following the solution space topology. This cooling schedule can be defined as a special geometric cooling method with variable coefficients. This characteristic gives the algorithm more resistance to different input parameters values while producing good sub-optimal results.

In his work [13] proposed a biological inspired bee system to optimize the routing in Railway systems. They conclude that the average solution results are better or equivalent than the traditional SA and GA methods.

The quality of the solution can be improved by allowing more time for the algorithm to run. [14] observed that the performance of 2-opt and 3-opt algorithms can be improved by keeping an ordered list of the closest neighbors for each city-node and thus reducing the amount of solutions to search but requiring more memory to keep the list of states.

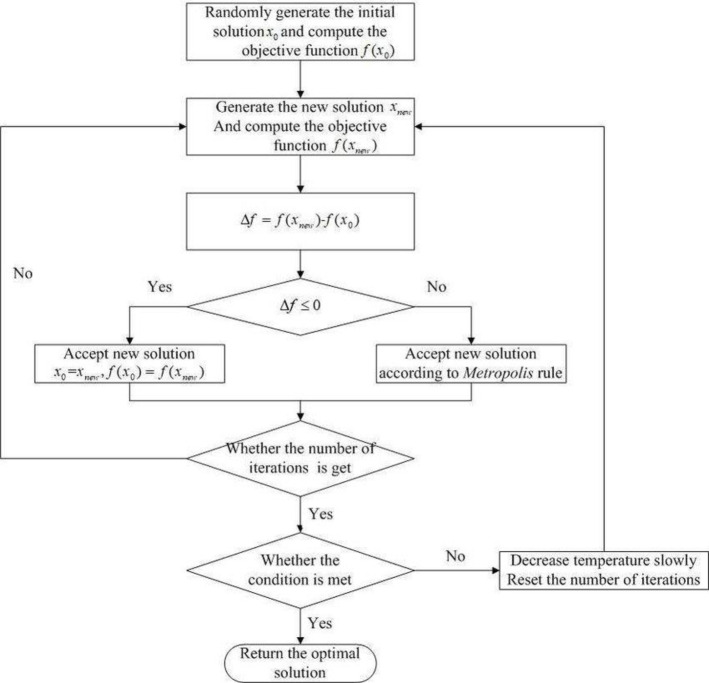

Genetic Algorithm (GA)

Genetic Algorithms was first introduced by [15] based on natural selection theory, as a stochastic optimization method in random searches for good (near-optimal) solutions. This approach is analogous to the "survival of the fittest" principle presented by Darwin. This means that individuals that are fitter to the environment are more likely to survive and pass their genetic information features to the next generation.

In TSP the chromosome that models a solution is represented by a "path" in the graph between cities. GA has three basic operations: Selection, Crossover and Mutation. In the Selection method the candidate individuals are chosen for the production of the next generation by following some fittest function In the TSP This function can be defined as the length (weight) of the candidate solutions tour. In Fig 10 we have a representation of genes and chromosomes.

Chromosome for a sample of individual candidate solutions.

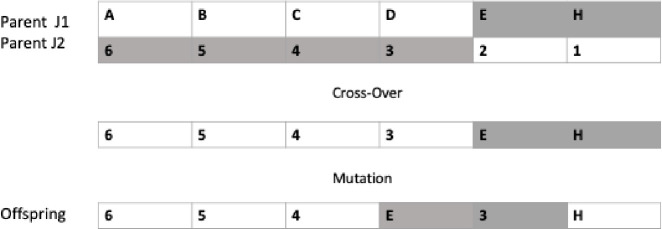

In Fig 11 is demonstrated an example of two parents under the Mutation and Crossover operators to generate a new offspring.

Offspring representation for the genetic algorithm mutation and crossover operators.

Next those individuals are chosen to mate (reproduction) to produce the new offspring. Individuals that produce better solutions are more fit and therefore have more chances of having offspring. However, individuals that produces worst solutions should not be discarded since they have a probability to improve solution in the future. In other words, the heuristic accepts solutions with negative gain hoping that eventually it may lead to a better solution.

Several researches have studied the performance trade off of selection strategy and how the input parameters affect the quality of solution and the computational time [16]. in his work explores different selection strategies to solve the TSP and compare the performances quality and the number of generations required. It concludes that tournament selection is more appropriate for small instance problems and rank-based roulette wheel can be used to solve large size problems.

[17] compared the quality of the solution and the convergence time on many selection methods such as proportional, tournament and ranking. They conclude that ranking and tournament have produced better results that proportional selection, under certain conditions to convergence. In his work [18] explored proportional roulette wheel and tournament method. He concluded tournament selection is more efficient than proportional roulette selection. Their work presents a simple genetic algorithm that combines roulette wheel and tournament selection. Their results suggest that this approach converges faster than roulette wheel selection.

This conclusion is related to the findings in our research and can be explained by Information Theory. The tournament selections mechanism helps to avoid the algorithm to waste execution iterations with solutions that have more noise (i.e. local minimum candidates–suboptimal solutions) and also by providing more useful information by giving a chance for all candidate to eventually produce optimal solutions, through the roulette wheel method.

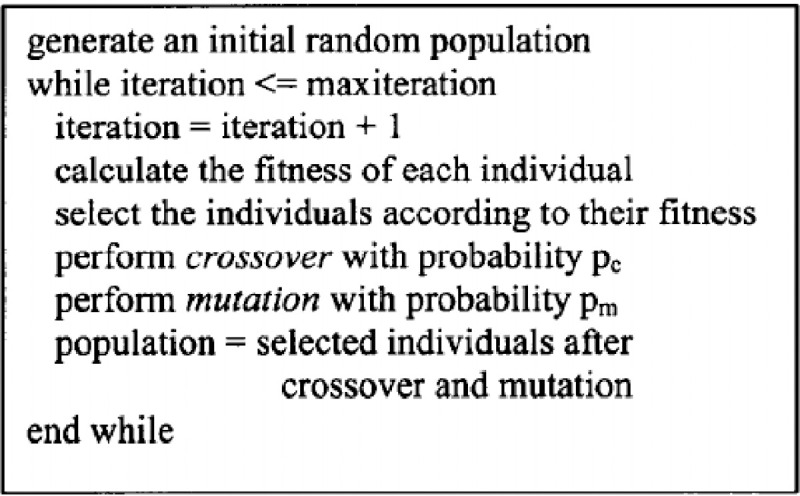

The Fig 12 contains the pseudo-code for a Genetic Algorithm from [19].

Basic genetic algorithm.

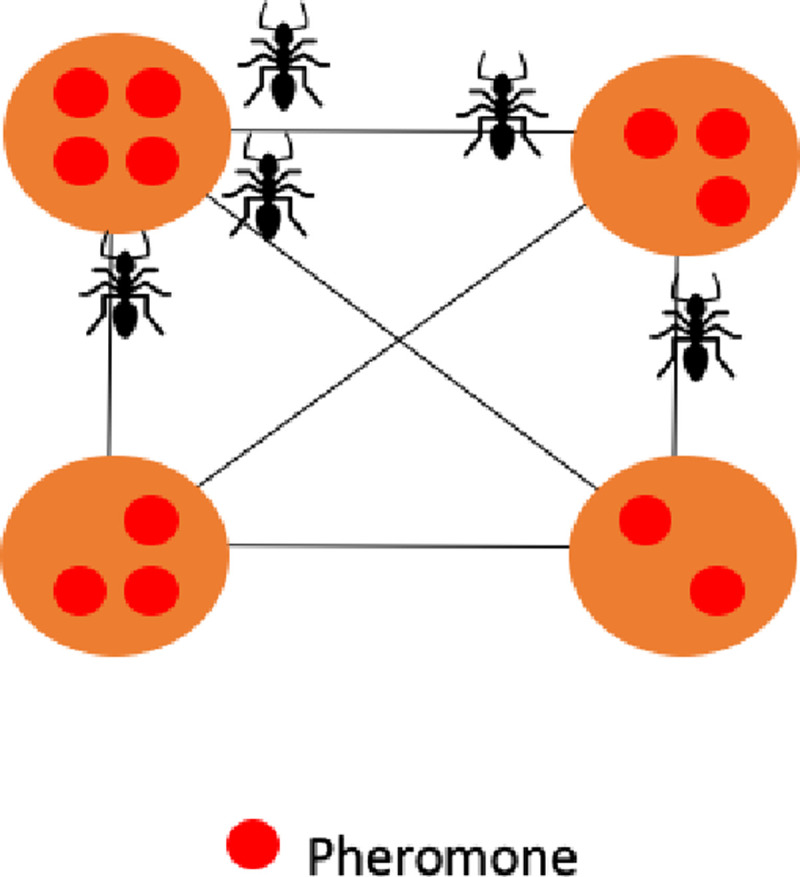

Ant Colony (AC) optimization

Ant colony (AC) optimization is a method that models the behavior of ants to find the shortest route in the nest. The choice for a given path by each ant is defined by the distribution of pheromones left by other ants when in transit.

The method is described as:

Initialize: Create initial distribution of pheromone in the region

For i from (0, n)

For each ant

Evaluate the objective function Update know best solution tour Update pheromone intensity distribution Simulate decay pheromone intensity

Stop Criterion Achieved?

Yes: Local minimum found Continue search until maximum iteration n is reached.

In the work “Ant supervised by PSO and 2-Opt algorithm, AS-PSO-2Opt, applied to Traveling Salesman Problem” from Kefi, Rokbani, Krömer and Alimi [20] there is a optimization of the 2-opt method using a post-processing for the solution paths to help avoid local minimum. Their results perform better than other benchmark algorithms such as Genetic Algorithm and Neural Networks.

In Fig 13 we have a representation of ant nest and pheromone distribution across a 2D region.

Ant colony pheromone representation.

Cross-entropy (CE) method

Is a combinatorial optimization method with noise. The method approximates the optimal utility function estimator with two targets:

Create a sample from a probability density function.

Minimize the cross-entropy between the distribution and the density function to improve the candidate solution quality for the next iteration.

The CE algorithm can be described as:

Init: Set parameter μ as average and σ as the standard deviation

For i in (0, N)

Create random sample S with size n using a normal distribution N (μ, σ) with parameters μ and σ Evaluate the Noise (entropy) distribution for the sample S Select the best Z% candidates of the solution sample to form a new subset T ⊆ S Update μ and σ with the probability distribution function of T, assuming a normal distribution

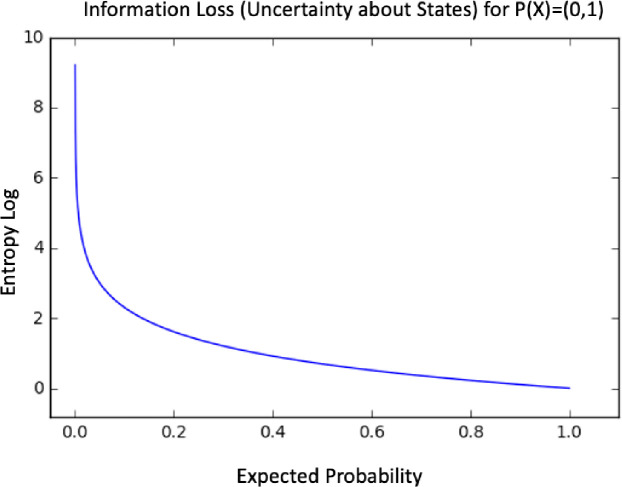

The algorithm works by reducing the entropy using a maximum like hood estimator P(X). In Fig 14 we have a representation for the entropy function P(X).

Entropy decrease process.

In “Solve Constrained Minimum Spanning Tree By cross-entropy (CE) method” [21] propose a parallel, randomized method to find a spanning tree using the lowest total cost relative to the cost weight under constrained weight boundaries.

Special cases are a class of algorithms that restricts the limits in the problem to find the optimal solution inside a give boundary. A metric TSP defines the distance between nodes under the triangle inequality and replaces the Real value Euclidian for the Manhattan distance. The Euclidian TSP is a special case for the metric TSP using integer numbers for the Euclidian distance.

The Euclidian TSP has a Euclidian minimum spanning tree associated with the minimum spanning tree of the graph and has the expected running time complexity O(n log n).

Proposed method

Background theory review

Information Theory (IT)

Quantifies the amount of information in a noisy communication channel and is measured in bits of entropy. IT is based in probability theory and statistical distributions. Entropy quantifies the amount of uncertainty in a random Bernoulli variable created by a Bernoulli process thus information can be interpreted as a reduction in the overall uncertainty about a set of finite states. Mutual information is a measure of common information between two random variables and it can be used to maximize the amount of information shared between encoded (sent) and decoded (received) signals. In Table 3 we have the relationship between Information and Entropy. As we increase our knowledge about the states following a probabilistic function distribution, we reduce entropy, as there is less uncertainty about possible state outcomes.

| Information | Entropy | Word | P (X = 0) | P (X = 1) | E(X) |

|---|---|---|---|---|---|

| High | Low | 0000 | 1 | 0 | 1*1*1*1 = 1 |

| Medium | Medium | 0001 | 0.75 | 0.25 | 0.75*0.75*0.75*0.25 = 0.105 |

| Low | High | 0011 | 0.5 | 0.5 | 0.5*0.5*0.5*0.5 = 0.0625 |

Information theory as an approximation method

Information Theory has applications in a range of fields and is used as a mathematical framework for encoding and decoding of information such as in adaptive systems, artificial intelligence, complex systems, network theory, coding theory, etc. IT quantifies the number of bits required to describe a given a set of states using a statistical distribution function for the input data.

Entropy of a random sequence

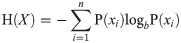

Entropy is a measure of uncertainty of a random variable. It is the average rate at which information is produced by a stochastic process [22]. defined the entropy H as a discrete random variable X with possible values as outcomes draw from a probability density function P(X). Fig 15 demonstrate the variation in entropy H(X) vs a Bernoulli distribution. In Eq 4 the entropy function is defined as:

Entropy H(X) vs Probality Pr(X = 1).

Random variables and utility function

Let X be an independent random variable with alphabet L: {001, 010, 100–}. A utility function g is used to model worth or value and is defined by g: {X ⊂ ℝ} and it represents a preference of relation between states. The utility function Y = g(X) of a random variable X express the preference of a given order of possible values of X. This order can be a logical evaluation of the value against a given threshold or constant parameter. The g(X) is defined by a normal distribution with given mean and variance under some degrees of freedom (i.e. confidence level).

As an example if g(X1 = 001 = 1) = c1 and g(X2 = 010 = 2) = c2 are the costs of two routes between a set of nodes in a super-graph G*, we can use this function to determine the arithmetical and logical relationship between them and decide if c1 is worst, better, less, greater or equal to c2. Therefore g(X1) < g(X2). The probability density function pdf(Y) can be used to calculate the entropy of the distribution of the cost values. An exponential utility is a special case used to model when uncertainty (or risks) in the outcome between binary states and in this case the expected utility function is maximized depending on the degree of risk preference.

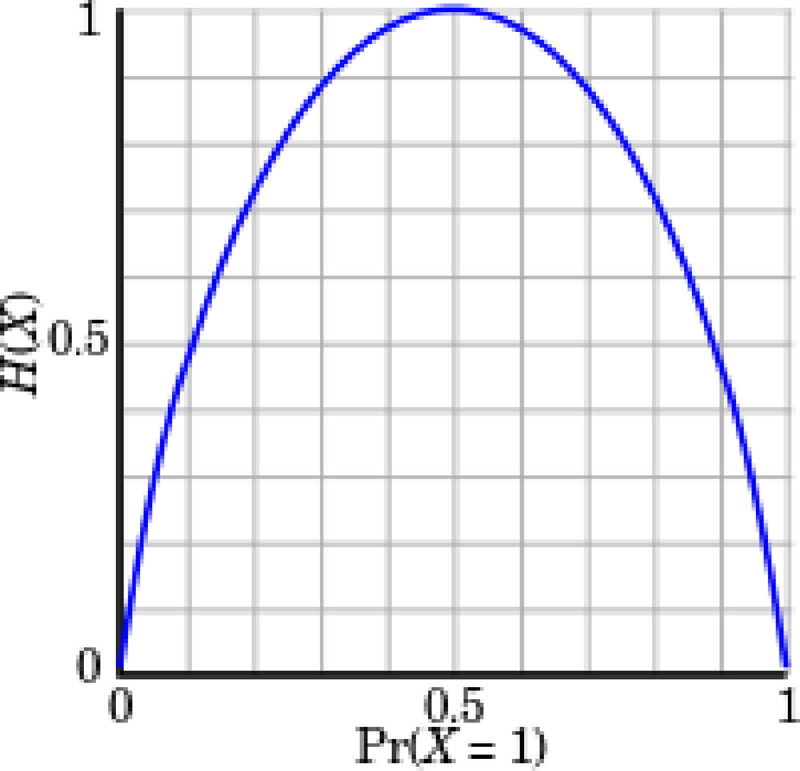

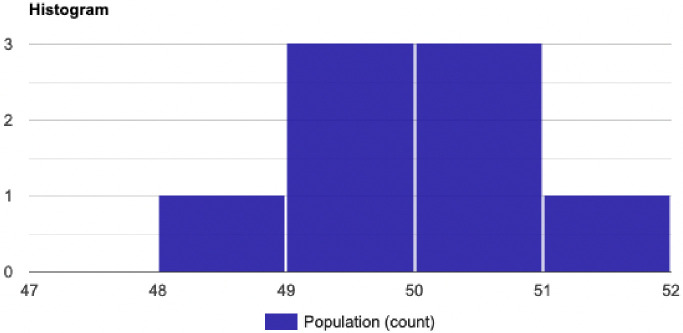

Fig 17 demonstrate an example of a normal distribution for cost function g(X) = {50, 51, 49, 49.3, 50.5, 50.3, 49.1, 48} and the probability P(X<48). Fig 16 shows the histogram for g(X). Table 4 demonstrate the calculation for the mean, standard deviation and variance for g(X), thus we have:

The probability density function for cost function g(X).

The normal distribution with mean 49.65 and standard deviation 0.91.

Then P (X<48; X < min g(X)) = 0.0349.

| Parameters | Output |

|---|---|

| Standard Deviation, σ | 0.91241437954473 |

| Count, N | 8 |

| Sum, Σx | 397.2 |

| Mean, μ | 49.65 |

| Variance, σ2 | 0.8325 |

Kolmogorov complexity

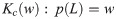

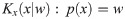

The Kolmogorov complexity [23] of a string w from language L denoted by

Complexity of a string and shortest description length

Let U be a Universal computer (i.e. Universal Turing Machine). If description d(x) is the minimal string to encode x. Kolmogorov complexity Kc(x) of a string x of a computer U is

Measuring the randomness of a string

Let Kc (x|y): The conditional Kolmogorov complexity of Xn given Y. Consider for example we want to find the binary string with higher complexity between three variables X1(010101010101010), X2(0111011000101110) and Y (01110110001011). In Table 5 we have the representation and minimal encoding using Xn and Y. Therefore, we can see X1 and X2 can be encoded as combinations of Y and thus Kc(X1|Y) > Kc (X2| Y). This relationship is defined as

| Variable | Binary Sequence | Minimal String Representation Schema |

|---|---|---|

| Y | 01110110001011 | Substring |

| X1 | 010101010101010 | 01 (#7 pairs of bits) + 0 (#1 single bit) |

| X2 | 011101100010110 | Y + 0 |

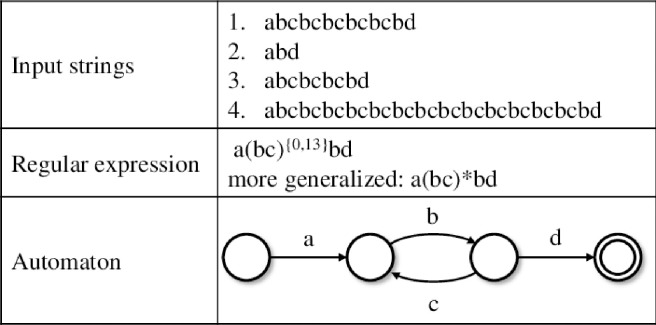

In Fig 18 from [24] we can see a comparison between a series of strings and the correspondent automata state machine and the regular expression patterns (i.e. regex schema).

Examples of representations of a given input string set using regex and an automaton.

The expected value of the Kolmogorov complexity of a random sequence is close to the Shannon entropy. From [25] this relationship between complexity and entropy can be described as a stochastic process drawn to a i.i.d on variable X following a probability mass function pdf(x). The symbol x in variable X is defined by a finite alphabet L. This expectation is

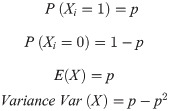

Kelly criterion and the uncertainty in random outcomes

The Kelly strategy is a function for optimal size of an allocation in a channel. It calculates the percentage of a resource that should be allocated for a given random process. It was created by John Kelly [26] to measure signal noise in a network. The bit can be interpreted as the amount of entropy in an expected event with two possible binary state outcome and even odds. This model maximizes the expectation of the logarithm of total resource value rather than the expected improvement for the utility function from each trial, in each clock unit iteration in a Turing Machine.

The Kelly criterion has applications in gambling and investments in the securities market [27]. In those special cases, the resource (communication) channel is the gambler’s financial capital wealth and the fraction is the optimal bet size. The gambler wants to reduce the risk of ruin and maximize the growth rate of his capital. This value is found by maximizing the expected value of logarithm of wealth which is equal to maximize the expected geometric growth rate.

Similarly, the log-normal Salesman’s can improve his strategy in the long run by quantifying the total of available inside information in the channel (or a tape in the Turing machine) and maximizing the expected value of the logarithm of the value function (defined by Traveled Euclidean distance) for each execution clock. Using this approach, he can reduce his uncertainty (entropy H(X)) while optimizing his rate of distance reduction (and solution quality improvement) at each execution time.

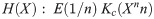

The Fig 19 demonstrate the Kelly criterion value over the Expected Growth Rate.

Maximization of entropy in random events.

Kelly uncertainty distribution

Let E(Y) be the expected value of random variable Y, H(Y) be the measured entropy for the pdf(Y) distribution and K*(E(Y),H(Y)) = f* be the maximization of the expected value of the logarithm of the entropy of the utility function Y = g(X). This fraction is known as the Kelly criterion and can be understood as the level of uncertainty about a given data distribution of the random variable X relative to a probability density function pdf(Y) of a measured respective cost distribution found at the sample. It’s a measurement of the amount of useful encoded information.

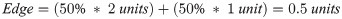

The value of f* is a fraction of the cost-value of g(X) on an outcome that occurs with probability p and odds b. Let the probability of finding a value which improves g(X) be p and in this case the resulting improvement is equal to 1 cost-unit plus the fraction f, (1+f). The probability of decreasing quality for Y is (1-p).

The maximization of the expected value f* is defined by the Kelly criterion formula in Eq 12

For example, consider a program with a 60% chance of improving the utility function g(X) thus p = 0.6 (success) and q = 0.4 (failure). Consider the program has a 1-to-1 odds of finding a sequence which improves g(X) and thus b = 1(1 quality-unit increase divided by 1 quality-unit decrease). For these parameters the program has a 20%(f* = 0.20) of certainty that the outcomes produce values that improve the expected value of g(X) over many trials.

Consider another sample case for a fair coin with probability of success (winning) P (1) = 0.5(50%) and failure(losing) P (0) = 0.5(50%). Table 6 demonstrate the amount improved (+) and worsen (-) for each scenario:

| Probability | Output | Value |

|---|---|---|

| Success (1) | Gain (True) | + 2 units |

| Failure (0) | Lose (False) | - 1 unit |

The Total Expected Outcome, Edge, is defined by:

Proposed algorithm and results

Quantitative algorithmic information Theory

The algorithm is designed to find the near optimal route in a cluster of machine-nodes before returning to the original point. This problem is a variation of TSP. Tour improvement heuristics algorithms such as 2opt and Simulated Annealing (SA) are used as a benchmark for the proposed Quantitative Algorithm (QA). 3 test cases are used to analyses the solutions generated by each algorithm. The 2opt algorithms produces solutions with smaller total distance but required more time units as the number of nodes increase. SA and QA have a maximum number of allowed interactions, but QA produces better solution quality than SA for the same time period.

The test samples are grouped in 10, 30 and 50 nodes. Each point represents a machine in a data center (i.e. computing and network provider) that can deploy a given service S. The distance cost in this case is the illustrative round-trip network and processing delay. This weight is the length of time to send a signal s*(t0) plus the time to reply acknowledging of that the signal was received with another message s*ack (t1).

To avoid bias and miss interpretation in the research, the first tour loaded in the computer memory is randomly flushed using a statistical function in Python programming language. The function swaps all elements (using a normal distribution) of the initial tour list, created after reading the list of input nodes.

The solutions found from SA and QA heuristics algorithms were analyzed for accuracy and reliability of the output. We have compared the required time and solution quality between the methods. Each algorithm was measured with a trial with sample length N = 60 for 3 test cases.

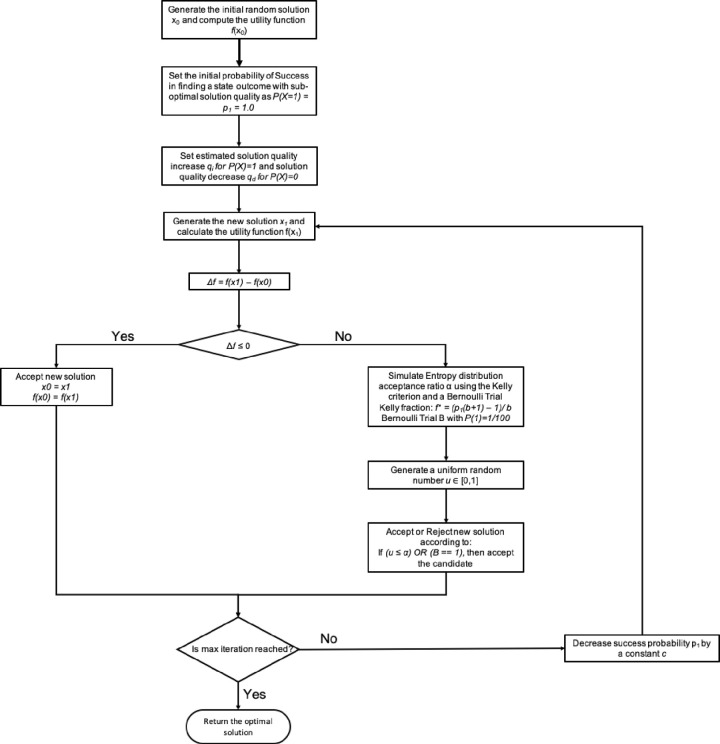

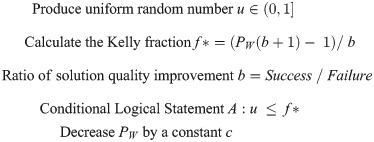

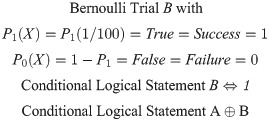

Quantitative heuristic

In Fig 20 we have the flowchart design for the Quantitative TSP Algorithm (QA). The constraints for the Kelly criterion and the Bernoulli process are presented in Figs 21 and 23. The pseudo-code for the proposed Quantitative Algorithm (QA) is describe in the Table 7.

Flowchart for the Quantitative TSP algorithm based in information theory.

| Quantitative Algorithm for TSP 1. Generate the initial random solution x0 and compute the utility function f(x0) 2. Set the initial probability of Success in finding a state outcome with sub-optimal solution quality as 1. P(X = 1) = p1 = 1.0 2. and the Failure probability defined as 1. P(X = 0) = q = p0 = 1—p1 3. Set estimated solution quality increase qi for P(X) = 1 4. Set estimated solution quality decrease qd for P(X) = 0 5. Set the net fractional odds ratio as b = qi / qd 6. For i in range (0, M) 1. Generate the new solution x1 and calculate the utility function f(x1) 2. Δf = f(x1)–f(x0) 3. If Δf ≤ 0 then 1. Accept new solution 2. x0 = x1 3. f(x0) = f(x1) 4. Else 1. Simulate Entropy distribution acceptance ratio α using the Kelly criterion and a Bernoulli Trial 1. Kelly fraction α: f* = (p1(b+1)– 1)/ b 2. Bernoulli Trial B with P(1) = 1/100 to work as a random binary switch 2. Generate a uniform random number u ∈ [0,1] 3. Accept or Reject new solution according to: 1. If (u ≤ α) OR (B = = 1), then accept the candidate 1. Set x0 = x1 2. f(x0) = f(x1) 2. Else then reject the candidate solution 3. Decrease success probability p1 by a constant c 1. p1 = p1–c |

Algorithmic information model

The two major components are the simulated Kelly fraction f* (describing the overall uncertainty spread) and the Bernoulli process distribution of the underlining random event between states (estimated as the mean and standard variance for the weight function for each solution). The combination of those factors will be evaluated to decide the start of a neighborhood search (following a Probability density function) when the new alternative solution has a negative gain (i.e. new proposed solution is worse than current best-known encoded candidate).

Solutions to the TSP routing problem are explored by algorithms such as 2opt, Simulated Annealing (SA), Greedy and Genetic Algorithm (GA). In this paper we proposed a Quantitative Algorithm (QA) that does not rely on naturally inspired schemas but rather provides a statistical interpretation as a distribution of signals by a stochastic log normal process.

This stochastic process is defined as an ordered list of random variables {Xn} for a given trial of length N. N is a set of non-negative integers and Xn is defined as a target measurement for a specific instance of time.

The utility function is used to find the near optimal route that have the minimum traveling distance to multiple target node destination while returning to the starting node at the end. There are 2 constraints to be considered in the model presented in this paper: Simulated Entropy Uncertainty and Bernoulli Process.

Method Constraint 1: Entropy simulation and uncertainty measurement

Simulated Uncertainty. The first constraint is limited by the entropy. The input parameters for the Kelly function f* are the Wining probability PW and the expected net-odds b for the Bernoulli trial B. The value of PW is decreased by a fixed constant rate of 0.0001 at each interaction. The value b is measured as the ratio of average improvements of the positive interactions divided by the average reduction of the negative interactions. The result of this function is the percentage of the useful side information available in a noisy channel.

In Table 8 we have an example for the Kelly criterion calculation.

| PW (Win/Cost Decrease) | PL (Lose/Cost Increase) | Expected (Averaged) Cost Gain (Quality Improvement) | Expected (Averaged) Cost Loss (Quality Worsening) | Success/Failure Ratio b | (Output) Kelly Percentage f* |

|---|---|---|---|---|---|

| 90 | 10 | +100 | -100 | +100/|-100| = 1 | 0.8 |

| 50 | 50 | +100 | -100 | +100/|-100| = 1 | 0 |

| 10 | 90 | +100 | -100 | +100/|-100| = 1 | -0.8 |

The decision criterion 1 is implemented as an evaluation of the IF logical statement for the Simulated Kelly Criterion and the Uniform Random Number output value.

Logical Statement for Method Constraint 1

For each interaction in the program

In Fig 21 we have a circuit representation of the first constraint and the representation for a sequential circuit with input and output values with a clock.

Decision Criterion 1 is implemented as an evaluation of the IF logical statement for the Simulated Kelly Criterion and the Uniform Random Number output value.

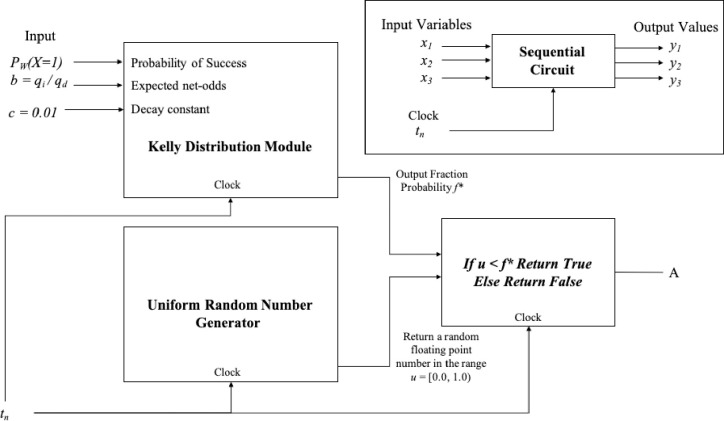

Method Constraint 2: Discrete computation distribution of a random binary variable

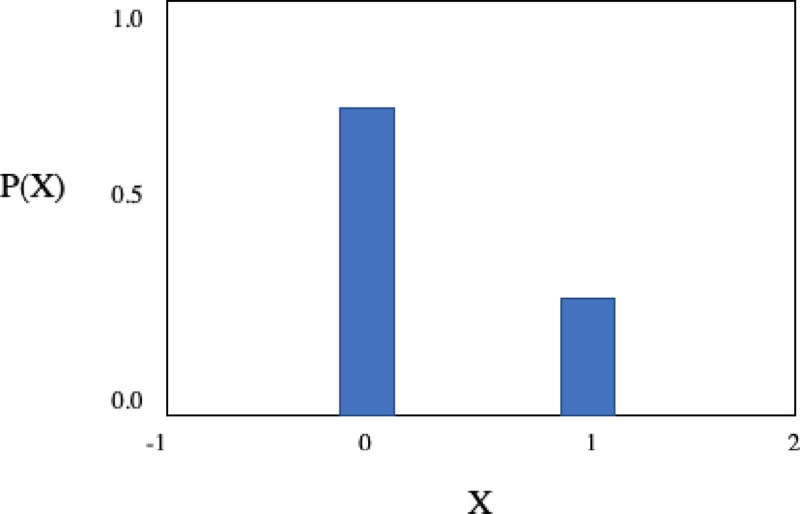

Bernoulli Process. The second constraint is defined by a Bernoulli process as a finite sequence of independent and random Bernoulli variables. This module will return as output the value “True” at 1% of the time for 100 interactions (N = 100) and it will accept unlikely (risky) solutions with negative gain to eventually provide improvements bets for the solution quality, under some degree of freedom. The process is defined as a trial with two binary states either “True/Success” (1) or “False/Failure” (0) with domain p ∈ [0,1]

In Fig 22 we can see a Bernoulli distribution with P (0) = 80% probability of output a Failure state

P(X) for the Bernoulli distribution with P(1) = 0.2 and P(0) = 1—P(1) = 0.8.

Examples of Bernoulli trials are show in Table 9.

| Event | Outcome |

|---|---|

| Play a game | Win/Lose |

| Coin toss | Head/Tail |

| Processing a request | On time/Late |

| Defect in equipment’s | Good/Defect |

| Buy-Sell an asset | Profit/Deficit |

| Optimizing traveling cost | Reduction/Increase |

The decision criterion 2 is implemented as a OR gate using the input Bernoulli Distribution Trial B and the output A of the IF statement from the Simulated Kelly Criterion and the Uniform Random Number output value.

Logical Statement for Method Constraint 2

For each interaction in the program

Decision Criterion 2 is implemented as a OR gate using the input bernoulli distribution Trial B and The output A of the IF statement from the Simulated Kelly Criterion and the uniform random number output value.

Algorithm analysis

This paper tries to address a few algorithm`s design questions to solve the TSP:

What is the shortest code that produces the optimal solution for the problem?

Objective: What is the minimal binary encoding, the self-information or the basic quantity required to represent the Bernoulli process

What is the path with less risk “of ruin” for the Salesman while also maximizing the solution quality?

Objective: Find the shortest Euclidian distance with less uncertainty

How to minimize running time requirements?

Objective: Optimize computational complexity

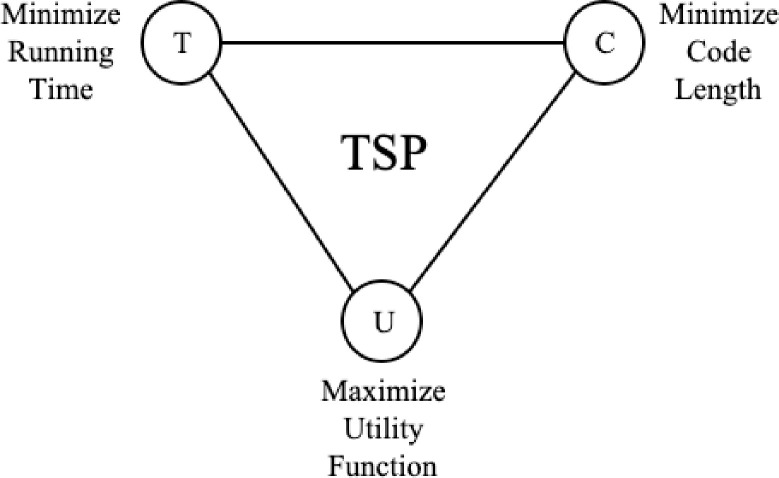

Fig 24 illustrate the TSP goal to optimize solution quality and the factors involved: Minimize Running Time; Maximize Utility Function and Minimize Code Length

TSP target objectives.

Shortest distance and shortest encoding

The computational complexity problem in Graph Theory such as the TSP and NP-Hard Problems is reduced to a combinatorial problem, using an entropy function optimization method from a Bernoulli variable defined by a Log-Normal distribution.

The method is based on the amount of self-information available in a random variable and this is the minimum encoding required to represent the problem in a Turing Machine, while producing as output the near-optimal solution found before halting. By the Kolmogorov Complexity this is the optimal program with the minimum amount of encoded information.

Mathematical definition

Assuming the Euclidian distance between nodes is the utility function for the problem. The proposed algorithm instead of trying to find the best solution directedly, using an arbitrary stochastic process, and interactively improving the string path, alternatively, the QA algorithm assumes the symbols from output Y is produced by processing the input from Bernoulli variable X, that is defined by a log-normal distribution and variables X and Y share Mutual Information. This is the amount of information X has about Y and is equal to the information Y know about X.

The lemmas for the proposed model are defined in Table 10.

| 1. The Bernoulli process B(p) is used to generate strings with length n and a pattern-code schema encoded as a Hamilton graph with probability p 2 The strings S are a Bernoulli sequence B formed with a random variable X from a finite alphabet L 3. The utility function U(S=B(X)) = Y is defined as the Euclidian Distance between nodes in a 2D plane 4. Bernoulli variables X and Y have Mutual Information 5. The Entropy Function for H(X), H(Y) and H(X|Y) can be calculated 6. E(X) is the Expected Value for X with mean and standard deviation σ1 7. E(Y) is the Expected Value for Y with mean  8. f*(Y) is the optimal encoding defined by the Total Expected Value (“Edge”) divided by the Expected Solution Quality Increase if Successful (“Odds”), for Sequence Y a. f*(Y) = Edge/Odds |

Logarithm utility model

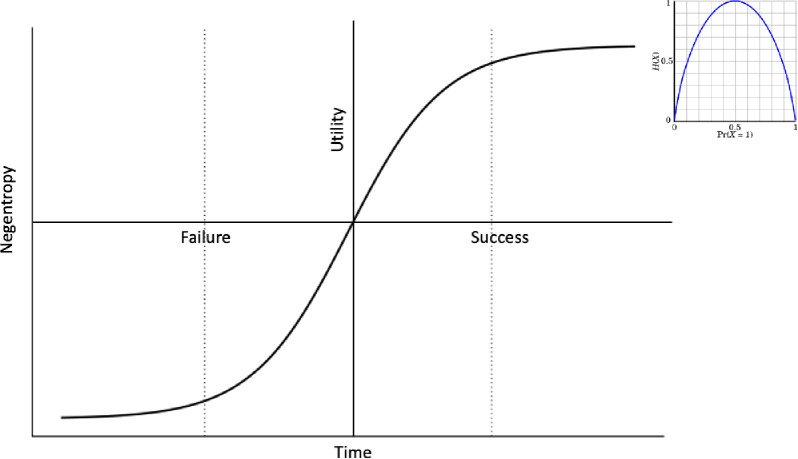

The method provides an alternative narrative for the Traveling Salesman Problem as it asks, “what is the best path between cities that simultaneously minimizes cost losses in the long run while also provide the best rate of improvement for each interaction”. The salesman wants to avoid solutions with local minima. There are several possible paths the salesman can choose, and he must guess which choice will lead to the best route over a set of candidate solutions. The uncertainty between states outcomes indicates the level of entropy (i.e. more entropy means less knowledge about the state values distribution). The reverse of entropy is the negentropy, which is defined as a temporary state condition in which a certain state distribution is more probable and more organized and thus there is less uncertainty about the state distribution for a given time frame. The utility function is demonstrated in Fig 25.

Utility function.

This approach is a model for risk measurement over many possible outcomes and is based in the work of John Kelly, Edward O. Thorp, von Neumann and Claude Shannon in logarithmic information utility, with applications in the financial markets and game theory such as the optimization in wealth growth in the long run and diversification in investment strategies in portfolio management.

Random path construction

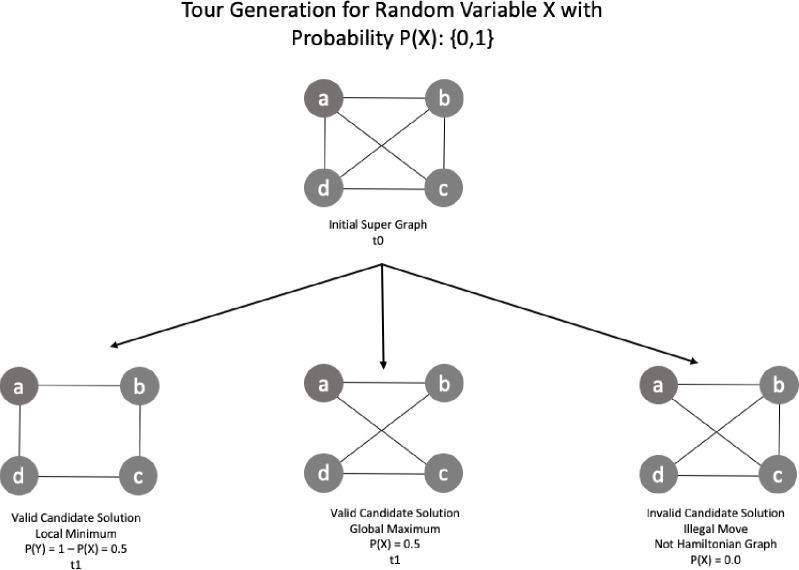

In Fig 26 we have a representation of the possible path choices to produce a candidate solution tour for TSP. The example has an alphabet L with 4 elements (n = 4). At each interaction the Salesman’s needs to choose the next node in the route. In the begging at T = t0, starting in the arbitrary node L: {a}, it has several possibilities for the next move with L’: {b,c,d}. Each element (or symbol) has a 1/3 probability in t0. In the second iteration t1, the probability for the remaining possible symbol increase to 0.5, however the probability for the nodes already chosen drops to zero, and the possible choice outcome for the next state move is reduced to L’: {c,d}.

![Tour construction for a weighted graph with a probability function p ∈ [0,1].](/dataresources/secured/content-1765739701756-33578643-e990-416b-a955-4a9bf198ffbe/assets/pone.0242285.g026.jpg)

Tour construction for a weighted graph with a probability function p ∈ [0,1].

From initial solution in time T = t0 there are a few valid sequences that can be chosen. There is no universal function to guide the Salesman with certainty on what is the best choice. This statement is supported by the Kolmogorov complexity of the string. The Salesman must then outguess this problem using the available side-information.

The side-information can be defined by the probability density function of the Euclidian distance distribution, formed by a set of valid candidate solution strings. The condition for a candidate to be valid is to be a Hamiltonian graph. Invalid solutions have zero probability of being chosen. In Fig 27 we have a set of candidate solutions with examples of valid and invalid sequences.

Candidate solution production and path decision.

This feature reduces the search space to only Hamiltonian circuits. From observation, we know that there is a greater probability that edges that crosses generally produce a total path distance larger than those nodes that do not have edges that overlap. The Fig 28 demonstrate a crossing of edges in a graph.

Edge crossing between nodes.

Algorithms such as 2opt takes advantage of this cluster aggrupation of edges and uses a strategy to remove the crossing between a group of nodes until no further improvement is found.

Solution quality and time to convergence

The method proposed is not an exact method but is guaranteed to produce the best quality in the long run, eventually, with probability 1 and smaller time to convergence, in average. This is a method that almost surely converges to a better solution quality if compared to any other heuristics when approaching the limit, as the number of algorithm iteration goes to infinity, and without needing complex string and array manipulations. Therefore, the method has a better computational cost optimization in average.

The proposed algorithm also brings more simplicity of implementation with lower encoding overhead, when compared to other algorithms such as Genetic Algorithm, Neural Networks and Ant Colony.

Computational requirements and cost effectiveness

The time constraining in QA heuristic is not a problem because the only factor to decide the running time is the degree of freedom allowed to the program. If the algorithm is expected to be more precise and with less errors, the time parameters can be adjusted with time complexity O(n) and with each interaction the entropy about the solution states is reduced, as the knowledge about the best solution increases (i.e. Smaller Euclidian distance). Therefore, the error rate can be adjusted to any level desired.

Relation with other heuristic methods

This is similar to the approach proposed by the Cross-Entropy Method, but the Quantitative Algorithm method does not resample or update the probability density function at each interaction, but instead embraces randomness and accepts candidate solution according to a simulated decreasing entropy function, with a binary random switch attached.

This mechanism is similar to the Manhattan Rule used by the Simulated Annealing algorithm. The proposed method use the simulated Kelly function ratio value α = f* to compare against a random control value u, than the output is evaluated again using a binary operation switch B which returns True or False, following a dependable probability function, that is decremented by a constant rate c from P(X) = 100 until P(X) = 1/100, at each interaction tN. This schema is used to provide alternatives paths and solve the hill-climbing limitation that is also present in many solvers of the TSP.

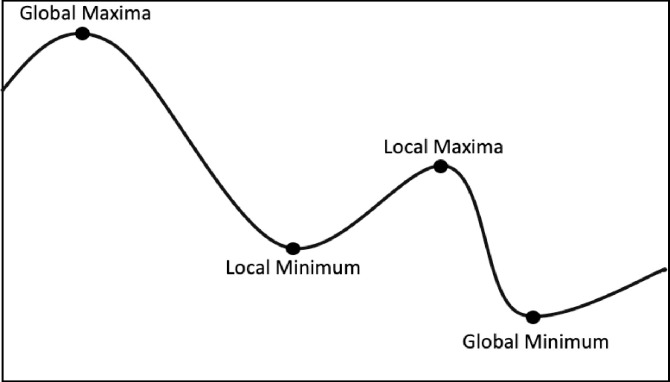

In Fig 29 we have a demonstration for the hill-climbing with examples of Global and Local points with the respective Maximum and Minimum positions.

Hill-Climbing problem.

Running costs

The running cost for the Quantitative Algorithm is the defined by the computational effort to generate a random candidate tour string and to evaluated with the current registered best-known solution, encoded by a Hamiltonian graph pattern, in O(N) time.

For each iteration, it has the computing cost to calculate the simulated entropy heuristic function and compare against the control values. These values can be calculated in constant time T(n). The memory required is defined by an array M of candidate solutions elements with size O(M) = 2. The array is a buffer to store the best candidate solution and the alternative solution.

Disadvantages and Limitations

Because of this design, the running time to converge is worst for the QA algorithm when compared to other methods, such as 2opt and greedy, at small instances of the problem with less than a few nodes (n<15). If compared to heuristics such as Simulated Annealing, Genetic Algorithm or Ant Colony the execution time is similar but requires less time in average. This behavior is observed in our computer simulation.

This is explained by the overhead in calculating the probabilistic temperature-function in Simulated Annealing (or the mutate-reproduce-selection operations in Genetic Algorithms) that are used as the acceptance criteria for the random candidate solutions and therefore the proposed entropy derived function in QA has a smaller running time overhead.

The running time cost for the proposed algorithm scales linearly to input with length n and the solution can be found in polynomial time in any Turing Machine. In Table 11 we have a comparison between classes of computational time complexity.

| Runtime Complexity | Computational Class | Time to Convergence (1/8) | Solution (Search)Space Volume | Example Algorithm |

|---|---|---|---|---|

| O(1) | Constant | 0000000000 | 0000000000 | |

| O(log n) | Logarithmic | 0000000001 | 0000000001 | Binary Search |

| O(n) | Linear | 0000000011 | 0000000011 | Linear Search |

| O(n log n) | Linearithmic time | 0000000111 | 0000000111 | k-opt method |

| O(n2) | Quadratic | 0000001111 | 0000001111 | Nearest neighbor (NN) |

| O(n3) | Cubic | 0000011111 | 0000011111 | Christofides and Serdyukov |

| O(nk) | Polynomial | 0000111111 | 0000111111 | A* |

| O(2n) | Exponential | 0001111111 | 0001111111 | |

| O(n!) | Factorial | 0011111111 | 0011111111 | Brute-Force |

| O(inf) | Infinite Time | 0111111111 | 0111111111 |

The proposed method has limitations. It needs a larger running time to converge for small instances if compared to heuristics such as 2opt and Greedy Algorithm, as it must run until the predefined max number of interactions parameter value is reached.

We have also observed that the local search methods such as 2opt, converge with less running time when the input is pre-sorted and thus help avoiding the algorithm to being lock down in local minima solutions. However, this implies that a predefined prefix schema must be directly encoded in the input string values, with the goal to improve running time performance. The pre-processing of the input string works as a prefix set for filtering “above average” solutions. This is explained by the Kolmogorov randomness of a string.

Alternatively, the proposed method does not need such implementation bias. This behavior can be explained by Algorithm Information Theory. The 2opt method for example is sensitive to the initial solution set sequence and if provided with an initial state with more noise than the running time also increases.

This means there are more elements to verify in the search space and thus more time is needed until converge. In the other hand, if there is less noise in the initial state, than the sub-optimal end state is found quickly. In comparison the proposed model is resistant to variations in the initial path sequence positions.

Computational results

The research evaluates the performance of the proposed algorithm through a series of test cases and statistical analysis. The new method is tested against the traditional method Simulated Annealing (Benchmark). Each test case was run for a trial with population length of N = 60. In the statistical analysis section, the t-test was used to compare the means between the sample groups. The null hypothesis is that there is no difference between the means of the two populations.

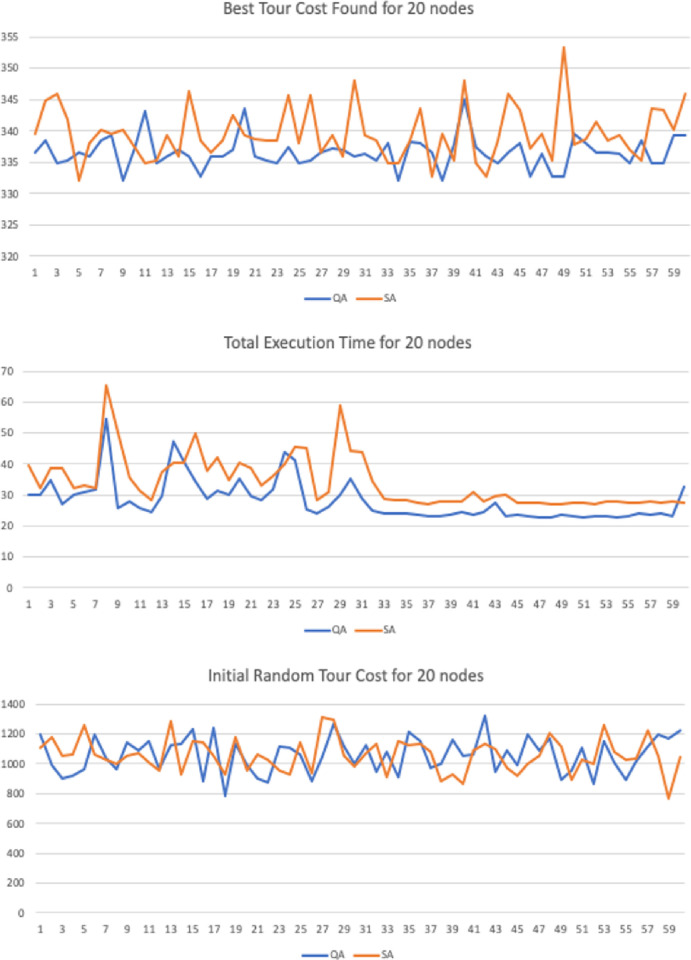

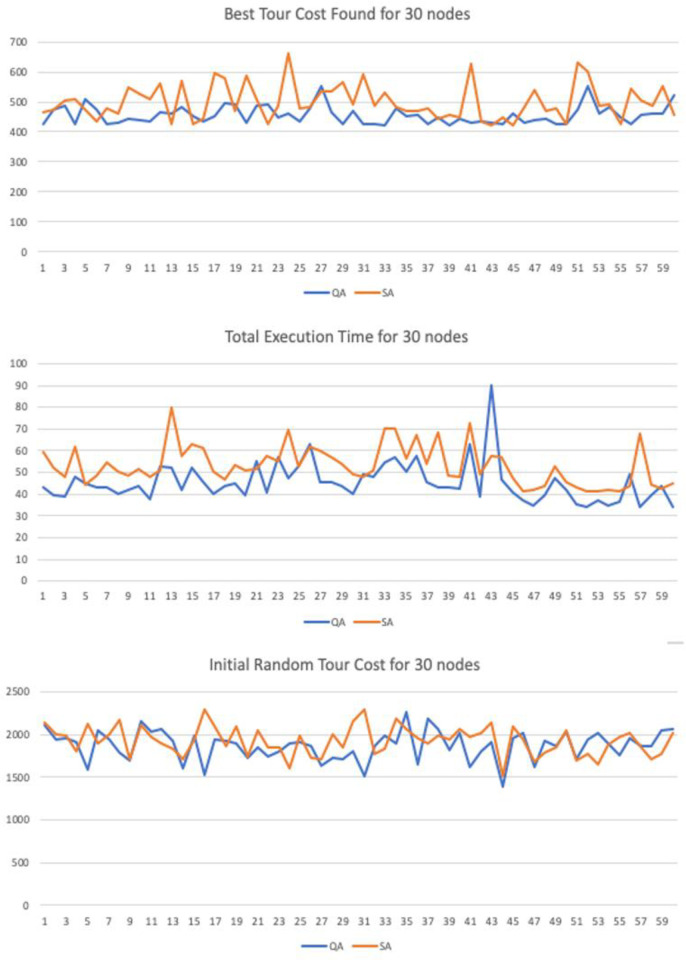

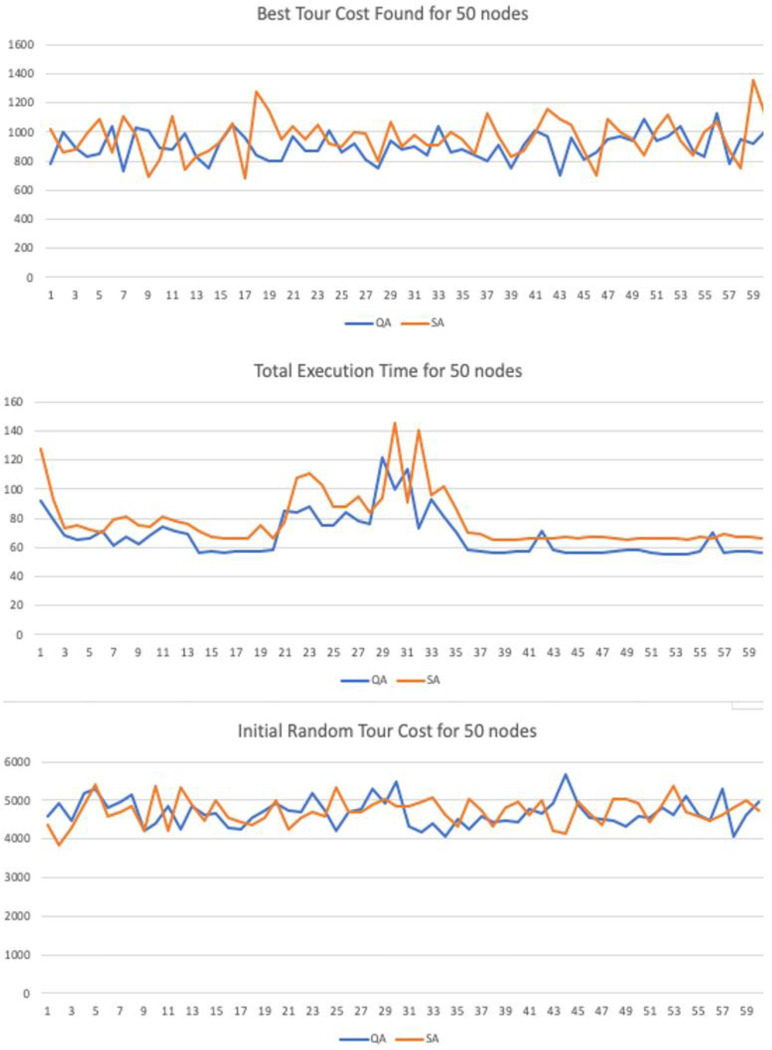

The input parameters where tested with different values in order to verify if the method was sensitive to changes in the controlling parameters. In the numerical simulation we have implemented 3 variations for graphs with 20, 30 and 50 nodes. Each 2D graph was used as input for the Quantitative Algorithm (QA) and the benchmark heuristic algorithm Simulated Annealing (SA). The maximum number of interactions was set to be the same with 40,000 interactions.

Similarly, to heuristics such as GA, SA and AC the method runs until it reaches a maximum number of iterations. We have set this parameter equality between the proposed and benchmark method to a fixed value of i = 40,000 iterations. The constant decay rate for the simulated entropy probability was set to c = 0.0001, the starting probability was set to p = 0.999 and variable net-odds b was defined by a random uniform value between (0.01, 2]. These parameters can be adjusted to improve the quality according to the number of input nodes, but the simulation results support the thesis the proposed method is resistant to variations in the input parameters under a given degree of freedom.

The sample trial length was also set equality to both samples with N = 60. We have also performed the Shapiro-Wilk test for normality to guarantee the input sequences were not skewed or distorted to any side. The T-Test was used to than verify if the results where statically significant in regards of the final best cost distance and the running time variables, with a confidence level of 95%.

We have also taken additional precautious to compare the performance in the numerical simulation. To achieve this all starting paths are randomly flushed—with a function in Python programing language—before execution begin, in order to guarantee the fairness when running and comparing all algorithms.

Computational statistical analysis

To demonstrate the significance and accuracy of the results we have performed a series of statistical tests to guarantee they are fair. For the numerical simulations we have created 3 test cases with 2D graphs n = {20, 30 50} nodes. Each trial has a sample with length N = 60. There are three variables recorded:

Initial Random Tour Cost: Starting total Euclidian cost for the initial path

Best Tour Cost Found: Distance for the best candidate solution found

Total Execution Time: Running Time until convergence.

The method has a few requirements:

The cost distance X can be calculated by a logarithmic utility function U

X is a random variable defined by U(X) = Y

Variables X and Y have Mutual Information

Variable X is encoded by a log-normal distribution

A path is a random sequence produced by a Bernoulli process B with probability p

The paths are Hamiltonian

The estimated value for Y is the expected solution quality

We will use a subset with N = 10 from trial with n = 50 nodes and length N = 60 to demonstrate the simulation results in Table 12.

| 50-node | ||||||

|---|---|---|---|---|---|---|

| Trial N = 60 | Initial Random Tour Cost | Best Tour Cost Found | Total Execution Time | |||

| 0 | QA | SA | QA | SA | QA | SA |

| 1 | 4584.994704 | 4350.530615 | 783.6135781 | 1013.45381 | 91.94147284 | 127.121441 |

| 2 | 4931.04388 | 3829.72755 | 1002.266343 | 856.714194 | 79.49352819 | 93.1328427 |

| 3 | 4459.545641 | 4285.493079 | 892.4679561 | 878.335327 | 67.90769484 | 73.4395111 |

| 4 | 5189.508014 | 4873.122362 | 825.1186081 | 986.814761 | 65.57152077 | 74.8066559 |

| 5 | 5303.265261 | 5389.224823 | 846.448402 | 1085.77975 | 65.92321619 | 72.4844698 |

| 6 | 4792.633045 | 4595.016823 | 1036.163983 | 855.195737 | 71.40535116 | 70.3046693 |

| 7 | 4951.587728 | 4711.457297 | 735.82339 | 1110.36984 | 61.43196151 | 78.8009844 |

| 8 | 5127.650419 | 4851.865628 | 1028.714485 | 974.535522 | 67.16026587 | 80.7510912 |

| 9 | 4213.123791 | 4227.131367 | 1006.163651 | 693.015657 | 61.80173641 | 75.0614487 |

| 10 | 4388.805181 | 5361.415343 | 887.0345646 | 813.130685 | 68.36587932 | 73.5915055 |

Fair run and descriptive statistics

We have implemented the proposed quantitative algorithm (QA) and the Simulated Annealing (SA) heuristic in a set of trials with n = {20, 30, 50} nodes and a sample with length N = 60. To guarantee the results are fair we have analyzed the distribution with the Shapiro-Wilk test to demonstrate the sample is normally distributed. The QA and SA tests for n = 50 nodes is described below:

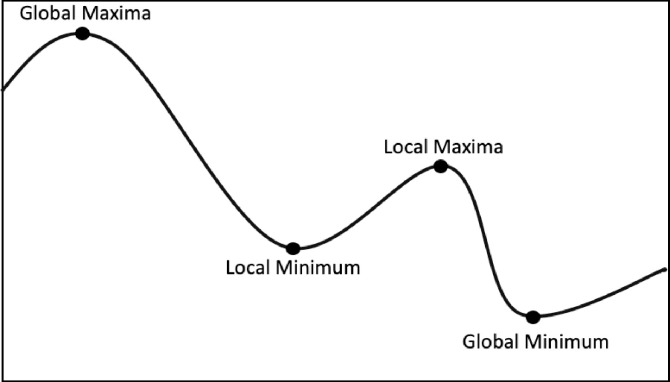

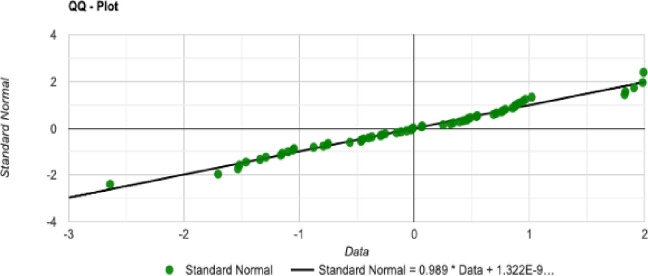

Results from QA simulation: Initial random tour cost variable

The statistical description for the QA method distribution with n = 50 nodes and length N = 60 is described as follow

Nodes in the graph (n): 50

Sample size (N): 60

Average (

Median: 4633.623941

Sample Standard Deviation (σ): 348.057997

Sum of Squares: 7147517.782

b: 2640.163152

Skewness: 0.543901

Skewness Shape: Potentially Symmetrical (pval = 0.078)

Excess kurtosis: 0.152926

Tails Shape: Potentially Mesokurtic, normal like tails (pval = 0.802)

P-value: 0.260661

Outliers: 5651.239742

The normal distribution with average

QA sample: Normal distribution for n = 50 and N = 60.

Histogram for the initial tour cost in the QA method.

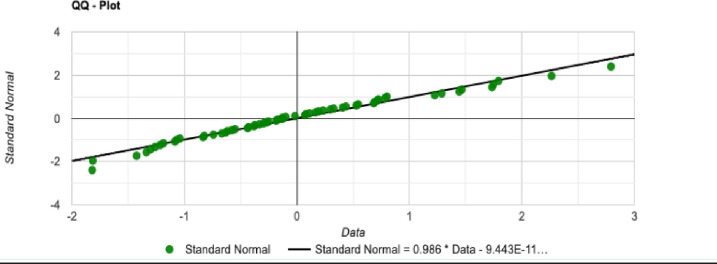

QQ-Plot for the initial tour cost in the QA method.

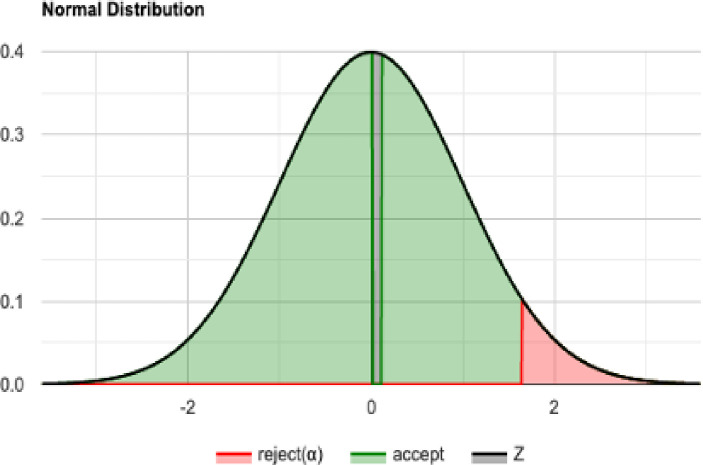

Shapiro-Wilk test, using a right-tailed normal distribution

H0 hypothesis

Since p-value > α, we accept the H0. It is assumed that the data is normally distributed. In other words, the difference between the data sample and the normal distribution is not big enough to be statistically significant.

P-value

p-value is 0.260661, hence, if we would reject H0, the chance of type1 error (rejecting a correct H0) would be too high: 0.2607 (26.07%). The larger the p-value, the more it supports H0

The statistics

W is 0.975228. It is in the 95% critical value accepted range: [0.9605: 1.0000]

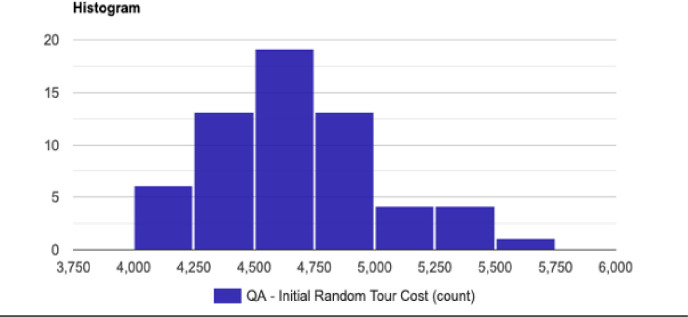

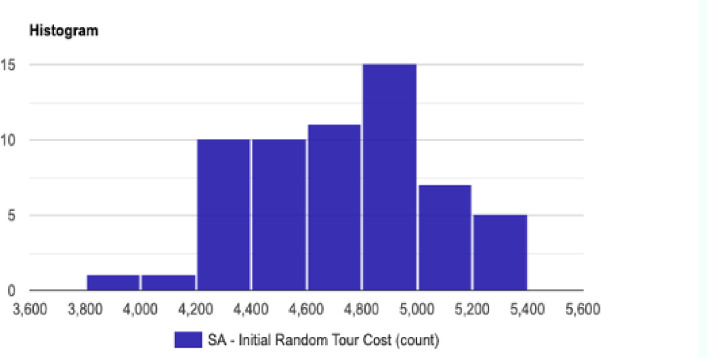

Results from SA simulation: Initial random tour cost variable

The statistical description for the SA method distribution with n = 50 nodes and length N = 60 is described as follow

Nodes in the graph (n): 50

Sample size (N): 60

Average (

Median: 4713.7218465000005

Sample Standard Deviation (σ): 336.776436

Sum of Squares: 6691683.709

b: 2562.233720

Skewness: -0.0726740

Skewness Shape: Potentially Symmetrical (pval = 1.186)

Excess kurtosis: -0.128238

Tails Shape: Potentially Mesokurtic, normal like tails (pval = 1.167)

P-value: 0.475660

Outliers:

The normal distribution with average

SA Sample: Normal distribution for n = 50 and N = 60.

Histogram for the initial tour cost in the SA method.

QQ-Plot for the initial tour cost in the SA method.

Shapiro-Wilk test, using a right-tailed normal distribution

H0 hypothesis

Since p-value > α, we accept the H0. It is assumed that the data is normally distributed. In other words, the difference between the data sample and the normal distribution is not big enough to be statistically significant.

P-value

p-value is 0.475660, hence, if we would reject H0, the chance of type1 error (rejecting a correct H0) would be too high: 0.4757 (47.57%). The larger the p-value, the more it supports H0

The statistics

W is 0.981075. It is in the 95% critical value accepted range: [0.9605: 1.0000]

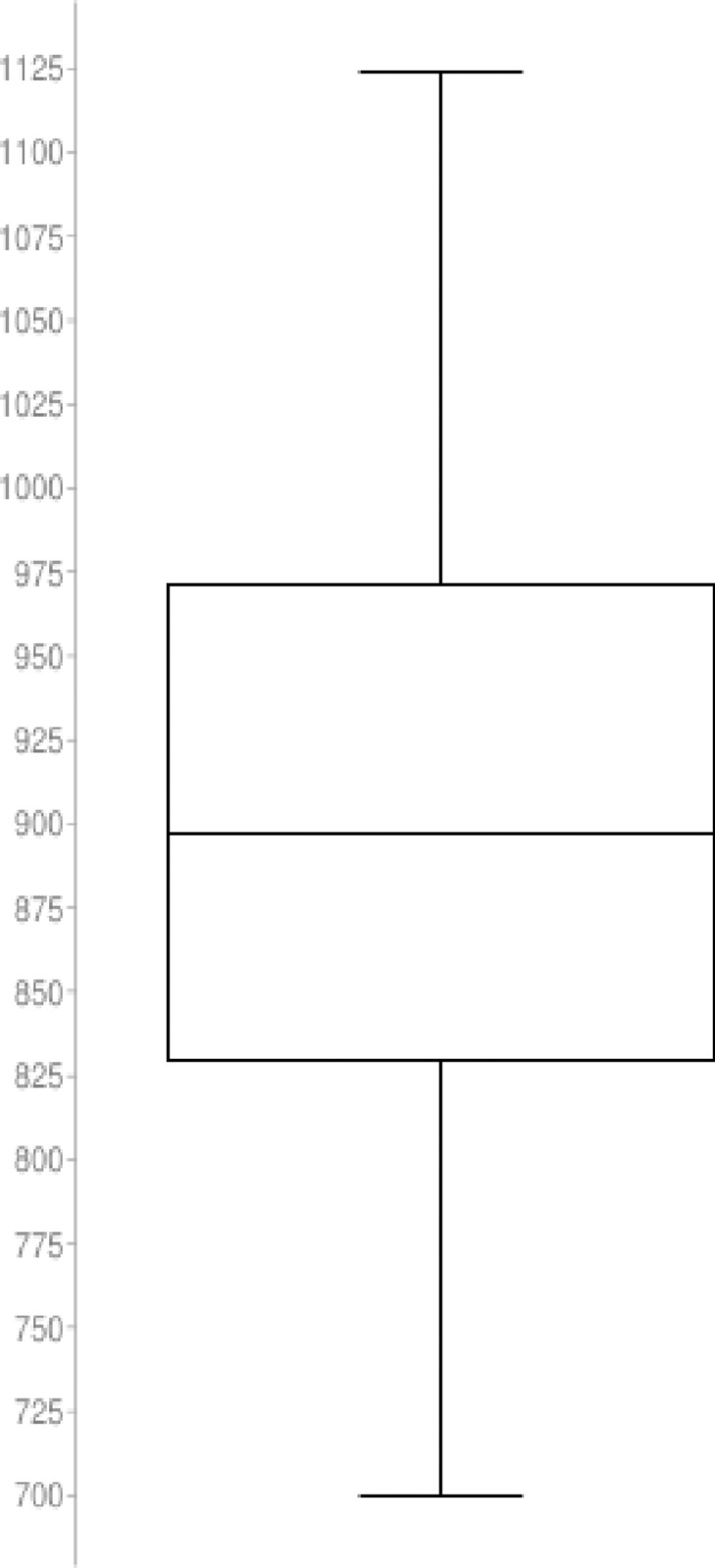

Next we have created the boxplot graph to represent the median, average and the quartiles for QA and SA sample distributions.

Results from QA simulation: Best tour cost found

The statistical description for the QA method distribution with n = 50 nodes and length N = 60 is described as follow

Sample size: 60

Median: 897.3196982

Minimum: 700.0706523

Maximum: 1123.628464

First quartile: 829.287859975

Third quartile: 971.0301233

Interquartile Range: 141.742263325

Outliers: none

The boxplot for the QA distribution is illustrated in Fig 36

Boxplot for the QA simulation.

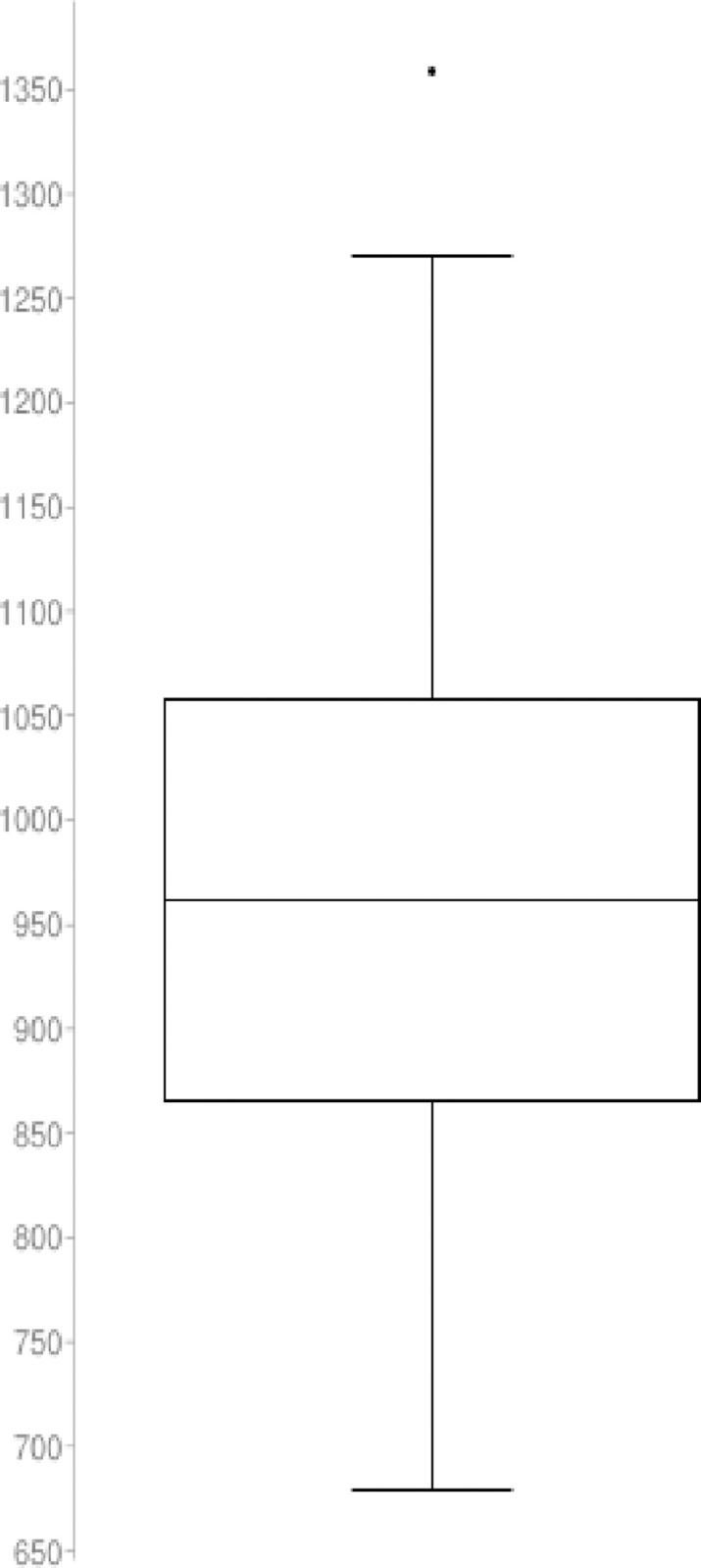

Results from SA simulation: Best tour cost found

The statistical description for the SA method distribution with n = 50 nodes and length N = 60 is described as follow

Sample size: 60

Median: 961.0134322

Minimum: 679.4900154

Maximum: 1358.45442

First quartile: 865.4905371

Third quartile: 1057.63755725

Interquartile Range: 192.14702015

Outlier: 1358.45442

The boxplot for the SA distribution is illustrated in Fig 37

Boxplot for the SA simulation.

Test cases and computer simulation

The test cases are a set with 20, 30 and 50 nodes. The network latency between each node is bounded by the geographic distances between the machines, made available by the computing service provider. It’s the time required to send a message over a noisy communication channel. The proposed algorithm was used to find the optimal network route to deploy a process in a cluster of machines. In Tables 13–15 we present the Euclidian coordinates used to generate the candidate solutions and the cost matrix. We have provided a sample to demonstrate the results between QA and SA from the 180 trials (N = 60 for each sample). The data shows the first initial tour and the optimal solution found for each algorithm (the example data was extracted from the results produced by the simulations).

List of nodes with length N = 20 with coordinates (X, Y) in a 2D plan.

| Node | X | Y |

|---|---|---|

| 1 | 288 | 149 |

| 2 | 288 | 129 |

| 3 | 270 | 133 |

| 4 | 256 | 141 |

| 5 | 256 | 157 |

| 6 | 246 | 157 |

| 7 | 236 | 169 |

| 8 | 228 | 169 |

| 9 | 228 | 161 |

| 10 | 220 | 169 |

| 11 | 212 | 169 |

| 12 | 204 | 169 |

| 13 | 196 | 169 |

| 14 | 188 | 169 |

| 15 | 196 | 161 |

| 16 | 188 | 145 |

| 17 | 172 | 145 |

| 18 | 164 | 145 |

| 19 | 156 | 145 |

| 20 | 148 | 145 |

The QA simulation results are described with four variables

First solution path: ['1', '20', '8', '7', '17', '13', '2', '3', '16', '9', '15', '11', '14', '10', '6', '19', '4', '5', '18', '12']

Total distance cost from the first(starting) solution: 1145.7617186058662

Best solution Path: ['6', '5', '1', '2', '3', '4', '9', '15', '16', '17', '18', '19', '20', '14', '13', '12', '11', '10', '8', '7']

Total distance cost for the best solution found: 332.1144088148687

List of nodes with length N = 30 with coordinates (X, Y) in a 2D plan

| Node | X | Y |

|---|---|---|

| 1 | 288 | 149 |

| 2 | 288 | 129 |

| 3 | 270 | 133 |

| 4 | 256 | 141 |

| 5 | 256 | 157 |

| 6 | 246 | 157 |

| 7 | 236 | 169 |

| 8 | 228 | 169 |

| 9 | 228 | 161 |

| 10 | 220 | 169 |

| 11 | 212 | 169 |

| 12 | 204 | 169 |

| 13 | 196 | 169 |

| 14 | 188 | 169 |

| 15 | 196 | 161 |

| 16 | 188 | 145 |

| 17 | 172 | 145 |

| 18 | 164 | 145 |

| 19 | 156 | 145 |

| 20 | 148 | 145 |

| 21 | 140 | 145 |

| 22 | 148 | 169 |

| 23 | 164 | 169 |

| 24 | 172 | 169 |

| 25 | 156 | 169 |

| 26 | 140 | 169 |

| 27 | 132 | 169 |

| 28 | 124 | 169 |

| 29 | 116 | 161 |

| 30 | 104 | 153 |

The QA simulation results are described with four variables

First solution path: ['20', '24', '23', '9', '2', '6', '21', '15', '27', '1', '11', '14', '29', '25', '5', '28', '13', '7', '18', '22', '26', '4', '19', '12', '3', '17', '10', '30', '8', '16']

Total distance cost from the first(starting) solution: 2114.8616643887417

Best solution Path: ['4', '9', '12', '13', '14', '24', '23', '25', '22', '26', '27', '28', '29', '30', '21', '20', '19', '18', '17', '16', '15', '11', '10', '8', '7', '6', '5', '1', '2', '3']

Total distance cost for the best solution found: 423.26765018746386

List of nodes with length N = 50 with coordinates (X, Y) in a 2D plan

| Node | X | Y |

|---|---|---|

| 1 | 288 | 149 |

| 2 | 288 | 129 |

| 3 | 270 | 133 |

| 4 | 256 | 141 |

| 5 | 256 | 157 |

| 6 | 246 | 157 |

| 7 | 236 | 169 |

| 8 | 228 | 169 |

| 9 | 228 | 161 |

| 10 | 220 | 169 |

| 11 | 212 | 169 |

| 12 | 204 | 169 |