Kinetic Ising models are powerful tools for studying the non-equilibrium dynamics of complex systems. As their behavior is not tractable for large networks, many mean-field methods have been proposed for their analysis, each based on unique assumptions about the system’s temporal evolution. This disparity of approaches makes it challenging to systematically advance mean-field methods beyond previous contributions. Here, we propose a unifying framework for mean-field theories of asymmetric kinetic Ising systems from an information geometry perspective. The framework is built on Plefka expansions of a system around a simplified model obtained by an orthogonal projection to a sub-manifold of tractable probability distributions. This view not only unifies previous methods but also allows us to develop novel methods that, in contrast with traditional approaches, preserve the system’s correlations. We show that these new methods can outperform previous ones in predicting and assessing network properties near maximally fluctuating regimes.

Many mean-field theories are proposed for studying the non-equilibrium dynamics of complex systems, each based on specific assumptions about the system’s temporal evolution. Here, Aguilera et al. propose a unified framework for mean-field theories of asymmetric kinetic Ising systems to study non-equilibrium dynamics.

Advances in high-throughput data acquisition technologies for very large biological and social systems are providing unprecedented possibilities to investigate their complex, non-equilibrium dynamics. For example, optical recordings from genetically modified neural populations make it possible to simultaneously monitor activities of the whole neural network of behaving C. elegans1 and zebrafish2, as well as thousands of neurons in the mouse visual cortex3. Such networks generally exhibit out-of-equilibrium dynamics4, and are often found to self-organize near critical regimes at which their fluctuations are maximized5,6. Evolution of such systems cannot be faithfully captured by methods assuming an asymptotic equilibrium state. Therefore, in general, there is a pressing demand for mathematical tools to study the dynamics of large-scale, non-equilibrium complex systems and to analyze high-dimensional datasets recorded from them.

The kinetic Ising model with asymmetric couplings is a prototypical model for studying such non-equilibrium dynamics in biological7,8 and social systems9. It is described as a discrete-time Markov chain of interacting binary units, resembling the nonlinear dynamics of recurrently connected neurons. The model exhibits non-equilibrium behavior when couplings are asymmetric or when model parameters are subject to rapid changes, ruling out quasi-static processes. These conditions induce a time reversal asymmetry in dynamical trajectories, leading to positive entropy production (the second law of thermodynamics) as revealed by the fluctuation theorem10–15 (see refs. 16,17 for reviews). This time-asymmetry is characteristic of non-equilibrium systems as it can only be displayed by systems in which energy dissipation takes place18. In the case of symmetric connections and static parameters, the model converges to an equilibrium stationary state. Consequently, it is a generalization of its equilibrium counterpart known as the (equilibrium) Ising model19.

The forward Ising problem refers to calculating statistical properties of the model, such as mean activation rates (mean magnetizations of spins) and correlations, given the parameters of the model. In contrast, inference of the model parameters from data is called the inverse Ising problem20. In this regard, kinetic Ising models21,22 and their equilibrium counterparts23–25 have become popular tools for modeling and analyzing biological and social systems. In addition, they capture memory retrieval dynamics in classical associative networks. Namely, they are equivalent to the Boltzmann machine, extensively used in machine learning applications20. Unfortunately, exact solutions of the forward and inverse problems often become computationally too expensive due to the combinatorial explosion of possible patterns in large, recurrent networks or the high volume of data, and applications of exact or sampling-based methods are limited in practice to around a hundred of neurons5,25,26. In consequence, analytical approximation methods are necessary for analysing large systems. In this endeavour, mean-field methods have emerged as powerful tools to track down otherwise intractable statistical quantities.

The standard mean-field approximations to study equilibrium Ising models are the classical naive mean-field (nMF) and the more accurate Thouless-Anderson-Palmer (TAP) approximations27. These methods have also been employed to solve the inverse Ising problem28–31. Plefka demonstrated that the nMF and TAP approximations for the equilibrium model can be derived using the power series expansion of the free energy around a model of independent spins, a method which is now referred to as the Plefka expansion32. This expansion up to the first and second orders leads to the nMF and TAP mean-field approximations respectively. The Plefka expansion was later formalized by Tanaka and others in the framework of information geometry33–37.

In non-equilibrium networks, however, the free energy is not directly defined, and thus it is not obvious how to apply the Plefka expansion. Kappen and Spanjers38 proposed an information geometric approach to mean-field solutions of the asymmetric Ising model with asynchronous dynamics. They showed that their second-order approximation for an asymmetric model in the stationary state is equivalent to the TAP approximation for equilibrium models. Later, Roudi and Hertz derived TAP equations for nonstationary states using a Legendre transformation of the generating functional of the set of trajectories of the model39. Another study by Roudi and Hertz extended mean-field equations to provide expressions for the nonstationary delayed correlations assuming the presence of equal-time correlations at the previous step40. Yet another interesting method proposed by Mézard and Sakellariou approximates the local fields by a Gaussian distribution according to the central limit theorem, yielding more accurate results for fully asymmetric networks41. This method was later extended to include correlations at the previous time step, improving the results for symmetric couplings42. More recently, Bachschmid-Romano et al. extended the path-integral methods in ref. 39 with Gaussian effective fields43, not only recovering ref. 41 for fully asymmetric networks but also proposing a method that better approximates mean rate dynamics by conserving autocorrelations of units. Although many choices of mean-field methods are available, the diversity of methods and assumptions makes it challenging to advance systematically over previous contributions.

Here, we propose a unified approach for mean-field approximations of the Ising model. While our method is applicable to symmetric and equilibrium models, we focus for generality on asymmetric kinetic Ising models. Our approach is defined as a family of Plefka expansions in an information geometric space. This approach allows us to unify and relate existing mean-field methods and to provide expressions for other statistics of the systems such as pairwise correlations. Furthermore, our approach can be extended beyond classical mean-field assumptions to propose novel approximations. Here, we introduce an approximation based on a pairwise model that better captures network correlations, and we show that it outperforms existing approximations of kinetic Ising models near a point of maximum fluctuations. We also provide a data-driven method to reconstruct and test if a system is near a phase transition by combining the forward and inverse Ising problems, and demonstrate that the proposed pairwise model more accurately estimates the system’s fluctuations and its sensitivity to parameter changes. These results confirm that our unified framework is a useful tool to develop methods to analyze large-scale, non-equilibrium biological and social dynamics operating near critical regimes. In addition, since the methods are directly applicable to Boltzmann machine learning, the geometrical framework introduced here is relevant in machine learning applications.

The paper is organized as follows. First, we introduce the kinetic Ising model and its statistical properties of interest. Second, we introduce our framework for the Plefka approximation methods from a geometric perspective. To explain how it works, we derive the classical naive and TAP mean-field approximations under the proposed framework. Third, we show that our approach can unify other known mean-field approximation methods. We then propose a novel pairwise approximation under this framework. Finally, we compare different mean-field approximations in solving the forward and inverse Ising problems, as well as in performing the data-driven assessment of the system’s sensitivity. The last section is devoted to discussion.

The kinetic Ising model is the least structured statistical model containing delayed pairwise interactions between its binary components (i.e., a maximum caliber model44). The system consists of N interacting binary variables (down or up of Ising spins or inactive or active of neural units) si,t ∈ { − 1, + 1}, i = 1, 2, . . , N, evolving in discrete-time steps t with parallel dynamics. Given the configuration of spins at t − 1, st−1 = {s1,t−1, s2,t−1, …, sN,t−1}, spins st at time t are conditionally independent random variables, updated as a discrete-time Markov chain, following

The parameters H = {Hi} and J = {Jij} represent local external fields at each spin and couplings between pairs of spins respectively. When the couplings are asymmetric (i.e., Jij ≠ Jji), the system is away from equilibrium because the process is irreversible with respect to time. Given the probability mass function of the previous state P(st−1), the distribution of the current state is:

In this article, we use variants of the Plefka expansion to calculate some statistical properties of the system. Namely, we investigate the average activation rates mt, correlations between pairs of units (covariance function) Ct, and delayed correlations Dt given by

Note that mt and Dt are sufficient statistics of the kinetic Ising model. Therefore, we will use them in solving the inverse Ising problem (see Methods). We additionally consider the equal-time correlations Ct as they are commonly used to describe neural systems, and are investigated by some of the mean-field approximations in the literature40. Calculation of these expectation values is analytically intractable and computationally very expensive for large networks, due to the combinatorial explosion of the number of possible states. To reduce this computational cost, we approximate the marginal probability distributions (Eq. (3)) by the Plefka expansion method that utilizes an alternative, tractable distribution.

Information geometry37,45,46 provides clear geometrical understanding of information-theoretic measures and probabilistic models15,47,48. Using the language of information geometry, we introduce our method for mean-field approximations of kinetic Ising systems.

Let

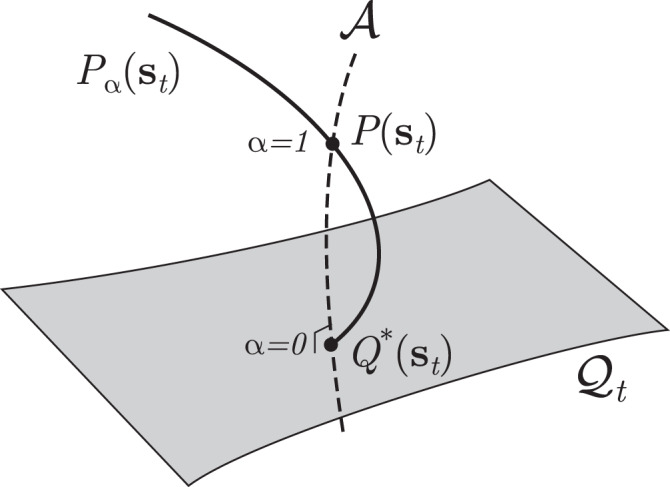

A geometric view of the approximations based on Plefka expansions.

The point P(st) is the marginal distribution of a kinetic Ising model at time t. The submanifold

The simplest submanifold

Our first goal is to find the average activation rates of the target distribution P(st∣H, J). It turns out that they can be obtained from the independent model Q(st∣Θt) that minimizes the following Kullback-Leibler (KL) divergence from P(st):

The independent model

From an information geometric point of view, given mt (or

Although the m-projection provides the exact and unique average activation rates, its calculation in practice requires the complete distribution P(st). In the Plefka expansion, we relax the constraints of the m-projection, and introduce another set of more tractable distributions that passes only through P(st∣H, J) and

The Plefka expansions of these statistics are defined as the Taylor series expansion of these functions around α = 0. In the case of the mean activation rate, the expansion up to the nth-order leads to:

What is the difference between this approach and other mean-field methods? Conventionally, naive mean-field approximations are obtained by minimizing D(Q∣∣P) as opposed to D(P∣∣Q) (Eq. (8))36,49. This approach is typically used in variational inference to construct a tractable approximate posterior in machine learning problems. Following the Bogolyubov inequality, minimizing this divergence is equivalent to minimizing the variational free energy. Geometrically, it comprises an e-projection of P(st∣H, J) to the submanifold

In the subsequent sections we show that different approximations of the marginal distribution P(st) in Eq. (3) can be constructed by replacing P(si,τ∣sτ−1) with Pα(si,τ∣sτ−1) for different pairs i, τ (here we will explore the cases of τ = t and τ = t − 1). More generally, we show in Supplementary Note 1 that this framework can be extended to a marginal path of arbitrary length k, P(st−k+1, …, st). In addition, we are not restricted to manifolds of independent models. The independent model is adopted as a reference model to approximate the average activation rate, but one can also more accurately approximate correlations using this method. In this vein, we can extend our framework to use reference manifolds

Before elaborating different mean-field approximations, we demonstrate our method by deriving the known results of the classical nMF and TAP approximations for the kinetic Ising model38,39. In order to derive these classical mean-field equations, we make a Plefka expansion around the points

Following Eq. (13), for the first order approximation we have

We apply the same expansion to approximate the correlations, expanding Cik,t(α) and Dil,t(α) around α = 0 up to the first order using

To obtain the second-order approximation, we need to solve

Having

In these approximations, Eqs. (16) and (20) of activation rates mt correspond to the classical nMF and TAP equations of the kinetic Ising model38,39. The mean-field equations for the equal-time and delayed correlations (Eqs. (17), (18), (21), and (22)) are novel contributions from applying the Plefka expansion to correlations.

Using the equations above, we can compute the approximate statistical properties of the system at t (mt, Ct, Dt) from mt−1. Therefore, the system evolution is described by recursively computing mt from an initial state m0 (for both transient and stationary dynamics), although approximation errors accumulate over the iterations. After we introduce a unified view of mean-field approximations in the subsequent sections, we will numerically examine approximation errors of these various methods in predicting statistical structure of the system.

In the previous section, we described a Plefka expansion that uses a model containing independent units at time t − 1 and t to construct a marginal probability distribution

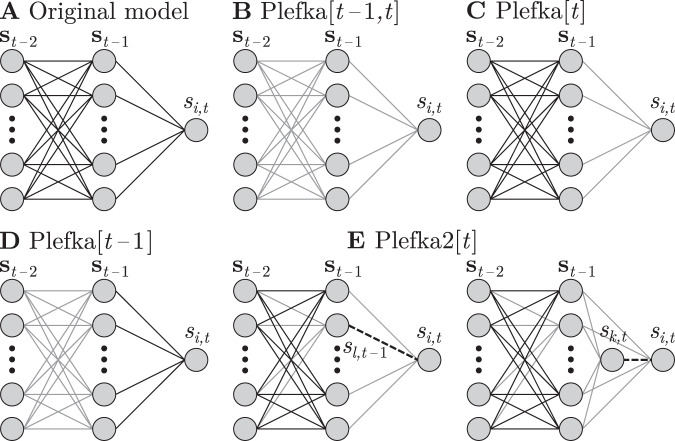

In the following sections, we show that various approximation methods, including those mentioned above, can be unified as Plefka expansions. Each method of the approximation corresponds to a specific choice of the submanifold

Unified mean-field framework.

Original model (A) and family of generalized Plefka expansions (B–E). Gray lines represent connections that are proportional to α and thus removed in the approximated model to perform the Plefka expansions, while solid black lines are conserved and dashed lines are free parameters. Plefka[t − 1, t] (B) retrieves the classical naive and TAP mean-field equations38,39. Plefka[t] (C) results in a novel method which preserves correlations of the system at t − 1, incorporating equations similar to ref. 40. Plefka[t − 1] (D) assumes independent activity at t-1, and in its first order approximation reproduces the results in ref. 41. Plefka2[t] (E) represents a novel pairwise approximation which performs better in approximating correlations.

For the Plefka[t − 1, t] approximation, explained above, the system becomes independent for α = 0 at t as well as t − 1. This leads to approximations of mt, Ct, Dt being specified by mt−1, while being independent of Ct−1 and Dt−1. In ref. 40, the authors describe a mean-field approximation by performing new expansion over the classical nMF and TAP equations that takes into account previous correlations Ct−1. Here, our framework allows us to obtain similar results by considering only a Plefka expansion over manifold

Note that Eqs. (24) and (25) are the same as the nMF Plefka[t − 1, t] equations. Equation (26) includes Ct−1, being exactly the same result obtained in ref. 40, Eq. (4). The second-order approximations leads to:

All update rules include the effect of Ct−1. We can see that if we use the covariance matrix of the independent model at t − 1, we recover the results of the Plefka[t − 1, t] approximation in the previous section. In contrast with ref. 40, we provide a novel approximation method that depends on previous correlations using a single expansion (instead of two subsequent expansions), and additionally present approximated equal-time correlations.

In ref. 41, a mean-field method is proposed by approximating the effective field ht as the sum of a large number of independent spins, approximated by a Gaussian distribution, yielding exact results for fully asymmetric networks in the thermodynamic limit. In our framework, we describe this approximation as an expansion around the projection point from P(st−1) to the submanifold

By defining

The proposed framework is also a powerful tool to develop novel Plefka expansions. To make the expansions more accurately approximate target statistics, we can consider a reference manifold composed of multiple time steps while maintaining some of the parameters in the system (see Supplementary Note 1). Motivated by this idea, here we propose new methods that directly approximate pairwise activities of the units by choosing a reference manifold that preserves a coupling term.

Let us first consider the joint probability of any arbitrary pair of units at time t − 1 and t to compute the delayed correlations (Fig. 2E, left). Namely, we consider the joint probability of spins si,t and sl,t−1:

As in the cases above, we can calculate the equations for the first and second-order approximations (see Supplementary Note 5). Here, for the second-order approximation (which is more accurate than the first order) we have that:

These results are related to previous work43 that included autocorrelations as one of the constraints to derive the Plefka approximation. Instead, here we provide a Plefka approximation that includes delayed correlations between any pair of units.

To compute the above approximations, we need to know Ct−1 and Ct−2. Here, we provide similar pairwise Plefka approximations for the pairwise distribution at time t, P(si,t, sk,t). Since si,t, sk,t are conditionally independent, we can construct a model in which first sk,t is computed from st−1, and then si,t is computed conditioned on sk,t, st−1 (Fig. 2E, right):

Here θi,t is a function of sk,t that accounts for equal-time correlations between si,t and sk,t. Computed similarly to delayed correlations, the second-order approximation yields (see Supplementary Note 5):

Using these equations, approximate equal-time correlations are given as

In the subsequent sections, we compare the family of Plefka approximation methods described above by testing their performance in the forward and inverse Ising problems. More specifically, we compare the second-order approximations of Plefka[t − 1, t] and Plefka[t], the first order approximation of Plefka[t − 1], and the second-order pairwise approximation of Plefka2[t]. We define an Ising model as an asymmetric version of the kinetic Sherrington-Kirkpatrick (SK) model, setting its parameters around the equivalent of a ferromagnetic phase transition in the equilibrium SK model. External fields Hi are sampled from independent uniform distributions

Generally, mean-field methods are suitable for approximating properties of systems with small fluctuations. However, there is evidence that many biological systems operate in critical, highly fluctuating regimes5,6. In order to examine different approximations in such a biologically plausible yet challenging situation, we select the model parameters around a phase transition point displaying large fluctuations.

To find such conditions, we employed path-integral methods to solve the asymmetric SK model (Supplementary Note 6). We find that the stationary solution of the asymmetric model displays for our choice of parameters a non-equilibrium analogue of a critical point for a ferromagnetic phase transition, which takes place at βc ≈ 1.1108 in thermodynamic limit (see Supplementary Note 6, Supplementary Fig. 1). The uniformly distributed bias terms H shift the phase transition point from β = 1 obtained at H = 0. By simulation of the finite size systems, we confirmed that the maximum fluctuations in the model are found near the theoretical βc, which shows maximal covariance values (see Supplementary Note 6, Supplementary Fig. 2).

Fluctuations of a system are generally expected to be maximized at a critical phase transition19. In addition, entropy production (a signature of time irreversibility) has been suggested as an indicator of phase transitions. For example, it presents a peak at the transition point of a continuous phase transition in a non-equilibrium Curie-Weiss Ising model with oscillatory field50 and some instances of mean-field majority vote models51,52. We found that the entropy production of the kinetic Ising system is also maximized around βc (discussed later, see also Methods for its derivation).

We examine the performance of the different Plefka expansions in predicting the evolution of an asymmetric SK model of size N = 512 with random H and J. To study the nonstationary transient dynamics of the model, we start from s0 = 1 (all elements set to 1 at t = 0) and recursively update its state for T = 128 steps. We repeated this stochastic simulation for R = 106 trials for 21 values of β in the range [0.7βc, 1.3βc] (except for the reconstruction of the phase transition where we used R = 105 and 201 values of β in the same range). Using the R samples, we computed the statistical moments and cumulants of the system, mt, Ct, and Dt at each time step. We then computed their averages over the system units, i.e.,

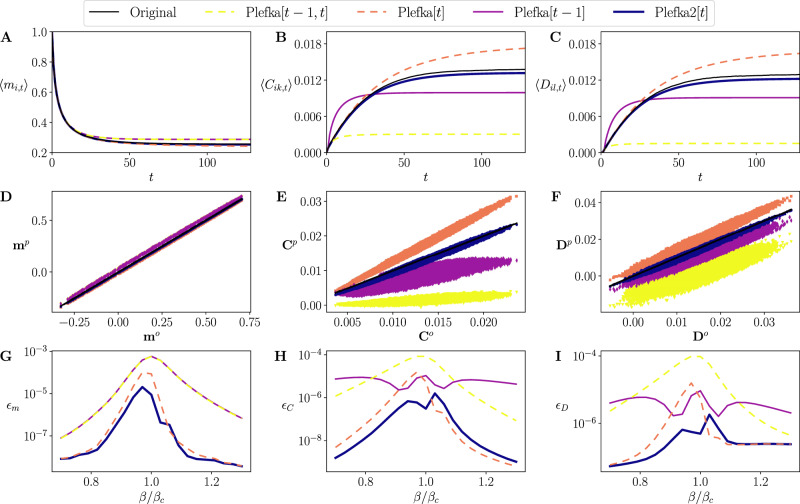

The black solid lines in Fig. 3A–C display nonstationary dynamics of these averaged statistics from t = 0, …, 128, simulated by the original model at

Forward Ising problem.

Top: Evolution of average activation rates (magnetizations) (A), equal-time correlations (B), and delayed correlations (C) found by different mean-field methods for β = βc. Middle: Comparison of the activation rates (D), equal-time correlations (E), and delayed correlations (F) found by the different Plefka approximations (ordinate, p superscript) with the original values (abscissa, o superscript) for β = βc and t = 128. Black lines represent the identity line. Bottom: Average squared error of the magnetizations

Performance of the methods in predicting individual activation rates and correlations are displayed in Fig. 3D–F by comparing vectors mt, Ct and Dt at the last time step (t = 128) of the original model (o superscript) and those of the Plefka approximations (p superscript). For activation rates mt, the proposed Plefka2[t] and Plefka[t] perform slightly better than the others (see also Fig. 3A). While being overestimated by Plefka[t], underestimated moderately by Plefka[t − 1] and significantly by Plefka[t − 1, t], equal-time and time-delayed correlations Ct, Dt are best predicted by Plefka2[t] (Fig. 3E, F).

The above results are obtained at the critical β = βc, intuitively the most challenging point for mean-field approximations. In order to further show that our novel approximation Plefka2[t] systematically outperforms the others in a wider parameter range, we repeated the analysis for different inverse temperatures β (the same random parameters are applied for all β). Fig. 3G, H, I, respectively, show the averaged squared errors (averaged over time and units) of the activation rates ϵm, equal-time correlations ϵC and delayed correlations ϵD between the original model and approximations, averaged over units and time for 21 values of β in the range [0.7βc, 1.3βc]. Fig. 3G–I shows that Plefka2[t] outperforms the other methods in computing mt, Ct, Dt (with the exception of a certain region of β > βc in which Plefka[t] is slightly better), yielding consistently a low error bound for all values of β. Errors of these approximations are smaller when the system is away from βc.

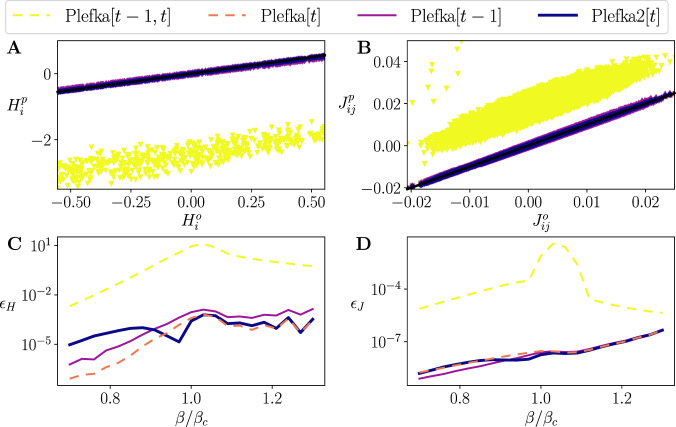

We apply the approximation methods to the inverse Ising problem by using the data generated above for the trajectory of T = 128 steps and R = 106 trials to infer the parameters of the model, H and J. The model parameters are estimated by the Boltzmann learning method under the maximum likelihood principle: H and J are updated to minimize the differences between the average rates mt or delayed correlations Dt of the original data and the model approximations, which can significantly reduce computational time (see Methods). While Boltzmann learning requires to compute the likelihood of every point in a trajectory and every trial (RT calculations) each iteration, we can estimate the gradient at each iteration in a one-shot computation by applying the Plefka approximations (Methods). At β = βc (Fig. 4A, B), we observe that the classical Plefka[t − 1, t] approximation adds significant offset values to the fields H and couplings J. In contrast, Plefka[t], Plefka[t − 1] and Plefka2[t] are all precise in estimating the values of H and J.

Inverse Ising problem.

Top: Inferred external fields (A) and couplings (B) found by different mean-field models plotted versus the real ones for β = βc. Black lines represent the identity line. Bottom: Average squared error of inferred external fields

Fig. 4C, D shows the mean squared error ϵH, ϵJ for bias terms and couplings between the original model and the inferred values for different β. In this case, errors are large in the estimation of J for Plefka[t − 1, t]. In comparison, Plefka[t], Plefka[t − 1] and Plefka2[t] work equally well even in the high fluctuation regime (β ≈ βc). Since the inverse Ising problem is solved by applying approximation one single time step (per iteration), it is not as challenging as the forward problem that can accumulate errors by recursively applying the approximations. Therefore, different approximations other than the classical mean-field Plefka[t − 1, t] perform equally well in this case.

We have shown how different methods perform in computing the behavior of the system (forward problem) and inferring the parameters of a given network from its activation data (inverse problem). Combining the two, we can ask how well the methods explored here can reconstruct the behavior of a system from data, potentially exploring behaviors under different conditions than the recorded data.

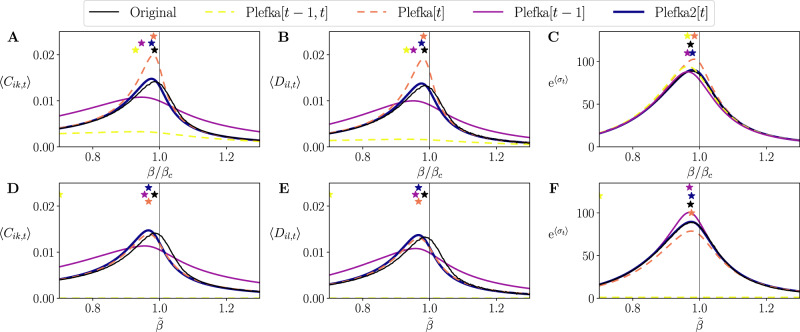

First, in Fig. 5A–C we examine how the different approximation methods approximate fluctuations (equal-time and time-delayed covariances) and the entropy production (see Methods) at t = 128 after solving the forward problem by recursively applying the approximations for the 128 steps. As we mentioned above, the asymmetric SK model explored here presents maximum fluctuations and maximum entropy production around β = βc (Supplementary Note 6, Supplementary Fig. 2). However, we see that Plefka[t − 1, t] and Plefka[t − 1] cannot reproduce the behavior of correlations Ct and Dt of the original SK model around the transition point. Plefka[t] and Plefka2[t] show much better performance in capturing the behavior of Ct and Dt in the phase transition, although Plefka[t] overestimates both correlations. Additionally, all the methods capture the phase transition in entropy production, though Plefka[t] overestimates its value around βc and Plefka2[t] is more precise than the other methods.

Reconstructing phase transition of kinetic Ising systems.

Top: Average of the Ising model’s equal-time correlations (A), delayed correlations (B), and entropy production (shown as an exponential for better presentation of its maximum) (C), at the last step t = 128 found by different mean-field methods for β = βc. Bottom (D–F): The same as above using the reconstructed network H, J by solving the inverse Ising problem at β = βc and multiplying a fictitious inverse temperature

Next, we combine the forward and inverse Ising problem and try to reproduce the transition in the asymmetric SK model in the models inferred from the data. We first take the values of H, J from solving the inverse problem from the data sampled at β = βc, and next we solve again the forward problem with those estimated parameters rescaled by a new inverse temperature

We have proposed a framework that unifies different mean-field approximations of the evolving statistical properties of non-equilibrium Ising models. This allows us to derive approximations premised on specific assumptions about the correlation structure of the system previously proposed in the literature. Furthermore, using our framework we derive a new approximation (Plefka2[t]) using atypical assumptions for mean-field methods, i.e., the maintenance of pairwise correlations in the system. This new pairwise approximation outperforms existing ones for approximating the behavior of an asymmetric SK model near the non-equilibrium equivalent of a ferromagnetic phase transition (see Supplementary Note 6), where classical mean-field approximations face problems. This shows that the proposed methods are useful tools to analyze large-scale, non-equilibrium dynamics near critical regimes expected for biological and social systems. However, we note that low-temperature spin phases (e.g., the spin-glass phase in symmetric models) also impose limitations on mean-field approximations32,41, which could be further explored with methods like the ones presented here.

The generality of this framework allows us to picture other approximations with atypical assumptions. For example, the Sessak-Monasson expansion53 for an equilibrium Ising model assumes a linear relation between α and spin correlations. An equivalent equilibrium expansion could use an effective field h(α) nonlinearly dependent on α, satisfying linear Ct(α) = αCt or Dt(α) = αDt relations. As another extension, Plefka2[t] could incorporate higher-order interactions. As Eqs. (43) and (52) are each equivalent to two mean-field approximations with sl,t−1 = ± 1 respectively, a generalized PlefkaM[t] would involve 2M−1 equations, increasing accuracy but also computational costs. In general, reference models Q(st) set coupling parameters of the model to zero at some steps of its dynamics. Other parameters (e.g., fields) are either free parameters fitted as m-projection from P(st), or preserved to their original value (see Supplementary Note 7 for comparing free and fixed parameters of each model). Augmenting accuracy by increasing parameters often involves a computational cost. As a practical guideline for using each method, Supplementary Note 7 compares their precision and computation time in the forward and inverse problems (see also Supplementary Figs. 3 and 4).

Asides from its theoretical implications, our unified framework offers analysis tools for diverse data-driven research fields. In neuroscience, it has been popular to study the activity of ensembles of neurons by inferring an equilibrium Ising model with homogeneous (fixed) parameters23 or inhomogeneous (time-dependent) parameters25,54 from empirical data. Extended analyses based on the equilibrium model have reported that neurons operate near a critical regime5,6. However, studies of non-equilibrium dynamics in neural spike trains are scarce7,26,55, partly due to the lack of systematic methods for analysing large-scale non-equilibrium data from neurons exhibiting large fluctuations. The proposed pairwise model Plefka2[t] is suitable for simulating such network activities, being more accurate than previous methods in predicting the network evolution at criticality (Fig. 3) and in testing if the system is near the maximally fluctuating regime (Fig. 5). In particular, application of our methods for computing entropy production in non-equilibrium systems could provide tools for characterizing the non-equilibrium dynamics of neural systems56.

In summary, a unified framework of mean-field theories offers a systematic way to construct suitable mean-field methods in accordance with the statistical properties of the systems researchers wish to uncover. This is expected to foster a variety of tools to analyze large-scale non-equilibrium systems in physical, biological, and social systems.

Let

The entropy production is defined as the KL divergence between the forward and backward path, quantifying the irreversibility of the system17,55,57:

The online version contains supplementary material available at 10.1038/s41467-021-20890-5.

We thank Yasser Roudi and Masanao Igarashi for valuable comments and discussions on this manuscript. This work was supported in part by the Cooperative Intelligence Joint Research between Kyoto University and Honda Research Institute Japan, MEXT/JSPS KAKENHI Grant Number JP 20K11709, and the grant of Joint Research by the National Institutes of Natural Sciences (NINS Program No. 01112005). M.A. was funded by the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 892715 and the University of the Basque Country UPV/EHU post-doctoral training program grant ESPDOC17/17, and supported in part by the Basque Government project IT 1228-19 and project Outonomy PID2019-104576GB-I00 by the Spanish Ministry of Science and Innovation.

M.A., S.A.M., and H.S. designed and reviewed research; M.A. contributed analytical and numerical results; M.A., S.A.M., and H.S. wrote the paper.

The datasets generated and analysed in this study are available under CC BY license at Zenodo https://zenodo.org/record/431898358 (10.5281/zenodo.4318983).

The source code for implementing the methods and results in this work is available under GPL license at GitHub https://github.com/MiguelAguilera/kinetic-Plefka-expansions59 (10.5281/zenodo.4357634).

The authors declare no competing interests.

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

15.

16.

17.

18.

19.

20.

21.

22.

23.

24.

25.

26.

27.

28.

29.

30.

31.

32.

33.

34.

35.

36.

37.

38.

39.

40.

41.

42.

43.

44.

45.

46.

47.

48.

49.

50.

51.

52.

53.

54.

55.

56.

57.

58.

59.